Xinyan Velocity Yu

@xinyanvyu

#NLProc PhD @usc, bsms @uwcse | Previously @Meta @Microsoft @Pinterest | Doing random walks in Seattle

ID: 1288519465756315648

https://velocitycavalry.github.io 29-07-2020 16:59:25

117 Tweet

802 Followers

662 Following

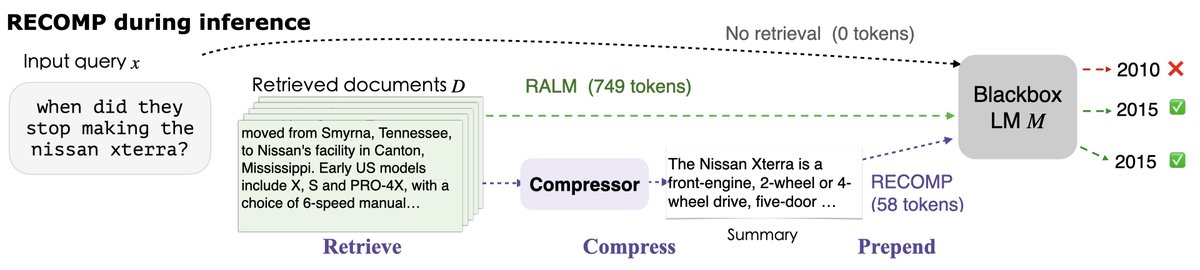

🔌Enhancing language models with retrieval boosts performance but demands more computes for encoding the retrieved documents. Do we need all the documents for the gains? We present 𝐑etrieve 𝐂𝐨𝐦press 𝐏repend (𝐑𝐄𝐂𝐎𝐌𝐏) arxiv.org/abs/2310.04408 (w/Weijia Shi, Eunsol Choi)

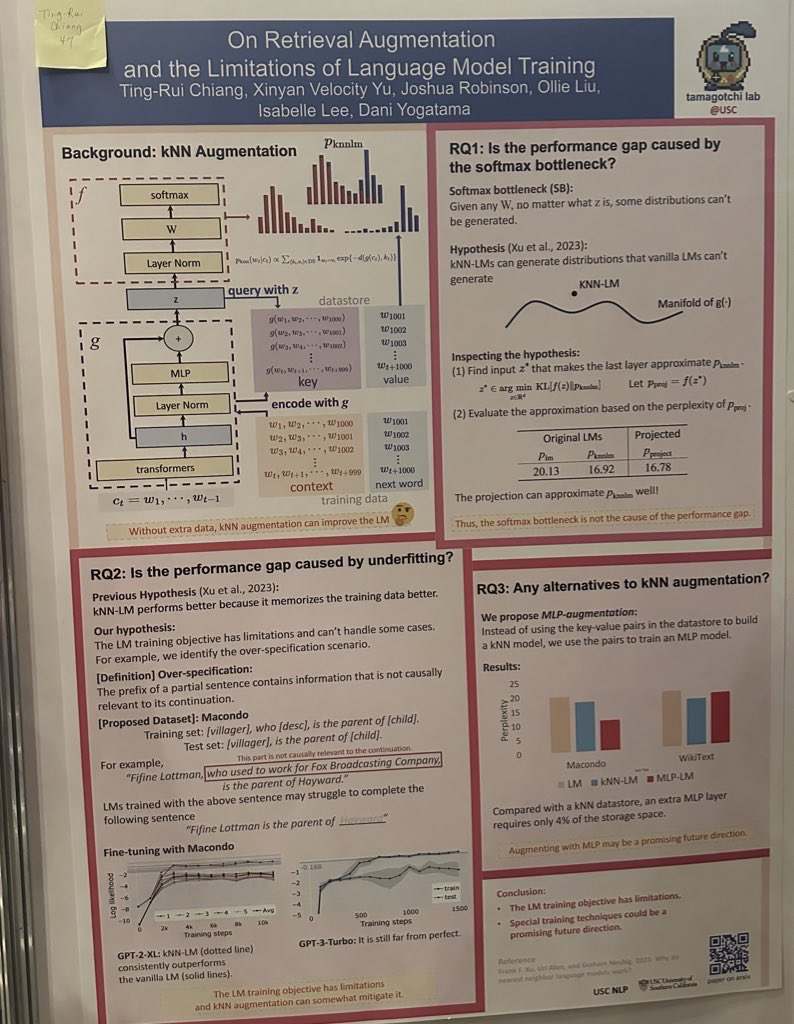

Venelin Kovatchev Ben Zhou 🌴Muhao Chen🌴 Awesome analysis of what KNN-LM says abt training: Is the seeming "free lunch" of KNN-LM (replacing top LM layers with embedding store and KNN lookup) due to a weakness of the LM objctve? Seems no! Training a replacement MLP on the KNN does better! 🤔 aclanthology.org/2024.naacl-sho…

#NAACL2024 NAACL HLT 2024 Reasoning or Reciting? Exploring the Capabilities and Limitations of Language Models Through Counterfactual Tasks Zhaofeng Wu (Zhaofeng Wu @ ACL) arxiv.org/pdf/2307.02477