Miles Wang

@mileskwang

Research @OpenAI, prev @Harvard

ID: 1611796692017225728

07-01-2023 18:48:13

46 Tweet

322 Takipçi

898 Takip Edilen

TL;DR: we are excited to release a powerful new open-weight language model with reasoning in the coming months, and we want to talk to devs about how to make it maximally useful: openai.com/open-model-fee… we are excited to make this a very, very good model! __ we are planning to

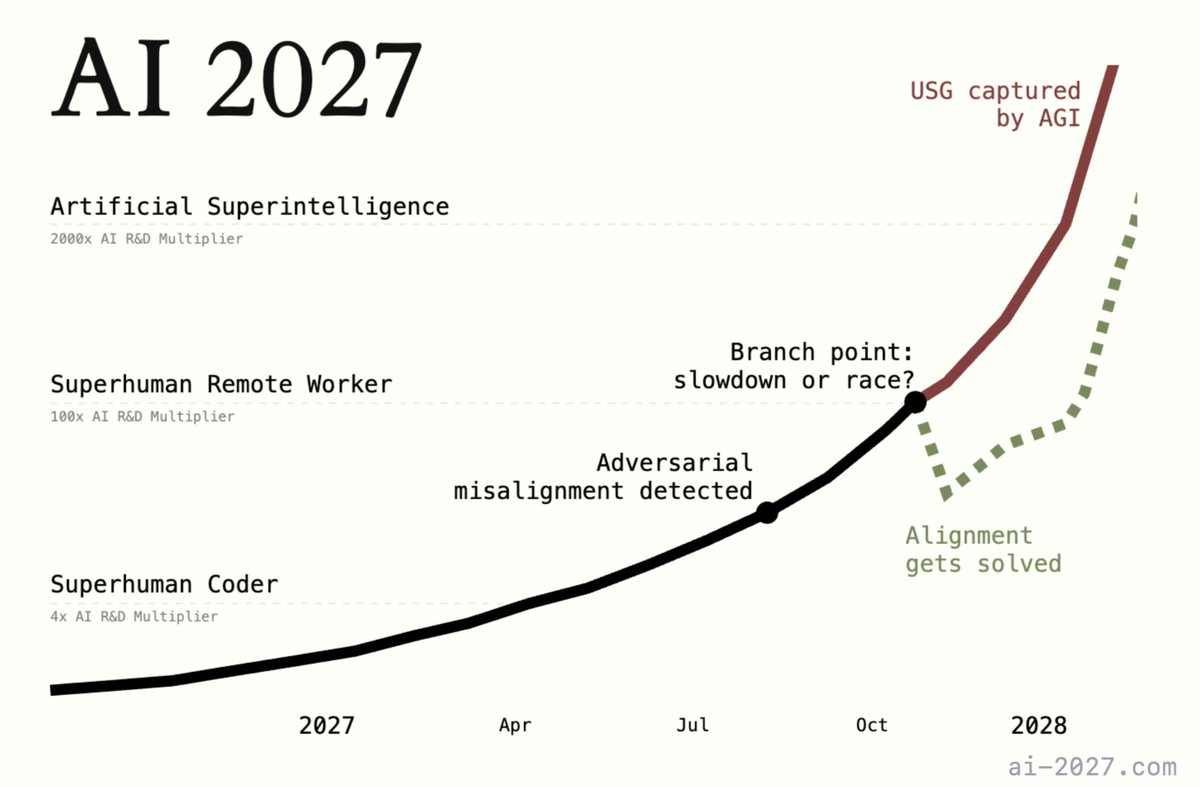

"How, exactly, could AI take over by 2027?" Introducing AI 2027: a deeply-researched scenario forecast I wrote alongside Scott Alexander, Eli Lifland, and Thomas Larsen

Wrote today in the The New York Times about the dangers of blurring scholarship and activism.

Very proud to share #HeathBench with the world, alongside Karan Singhal Jason Wei’s Rebecca Soskin Hicks, MD Joaquin Quiñonero Candela and many others.