Instruction Workshop, NeurIPS 2023

@itif_workshop

The official account of the 1st Workshop on Instruction Tuning and Instruction Following (ITIF), colocated with NeurIPS, in December 2023.

ID: 1689312241542430721

https://an-instructive-workshop.github.io/ 09-08-2023 16:27:08

162 Tweet

261 Followers

26 Following

📢 Check out Anthony Chen and my invited talk at the at the USC ISI Natural Language Seminar: 📜 "The Data Provenance Initiative: A Large Scale Audit of Dataset Licensing & Attribution in AI" youtube.com/watch?v=np9HeJ… Thank you Justin Cho 조현동 for hosting!

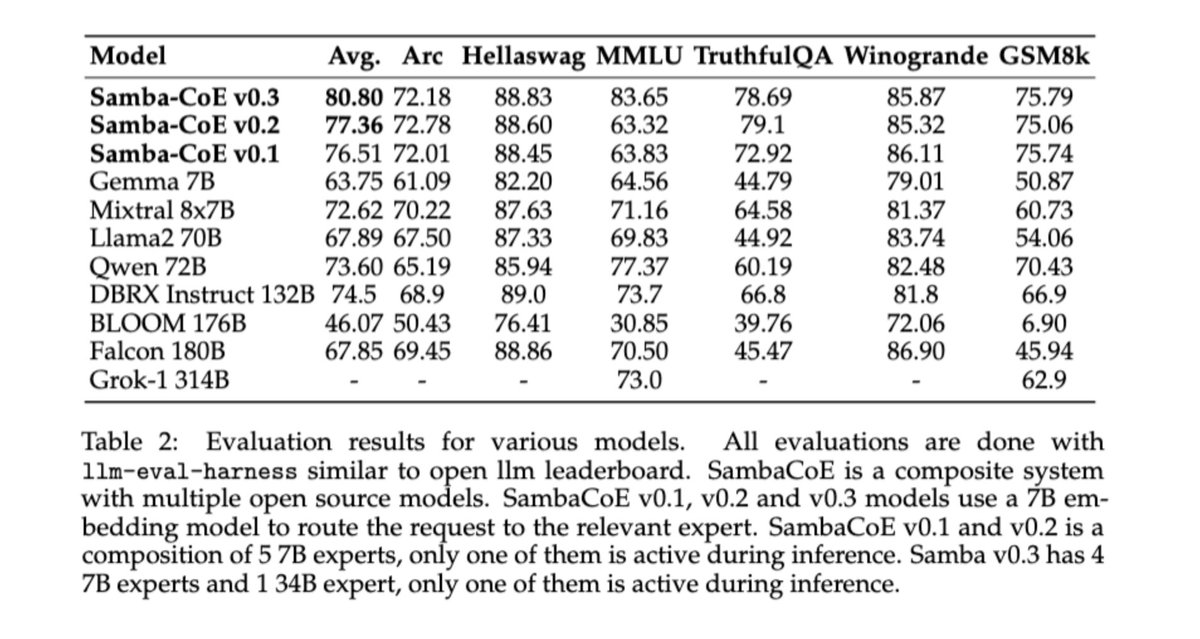

🚀🌟🚀Excited to announce Samba-CoE v0.2, which outperforms DBRX by Databricks Mosaic Research and Databricks, Mixtral-8x7B from Mistral AI, and Grok-1 by Grok at a breakneck speed of 330 tokens/s. These breakthrough speeds were achieved without sacrificing precision and only on 8 sockets,

📢 Want to automatically generate your bibtex for 1000s of Hugging Face text datasets? Minh Chien Vu just added this feature + data summaries for: ➡️ huge collections like Flan, P3, Aya... ➡️ popular OpenAI-generated datasets ➡️ ~2.5k+ datasets & growing 🔗:

Excited to see our 🍮Flan-Palm🌴 work finally published in Journal of Machine Learning Research 2024! Looking back, I see this work as pushing hard on scaling: post-training data, models, prompting, & eval. We brought together the methods and findings of many awesome prior works, scaled them up, and

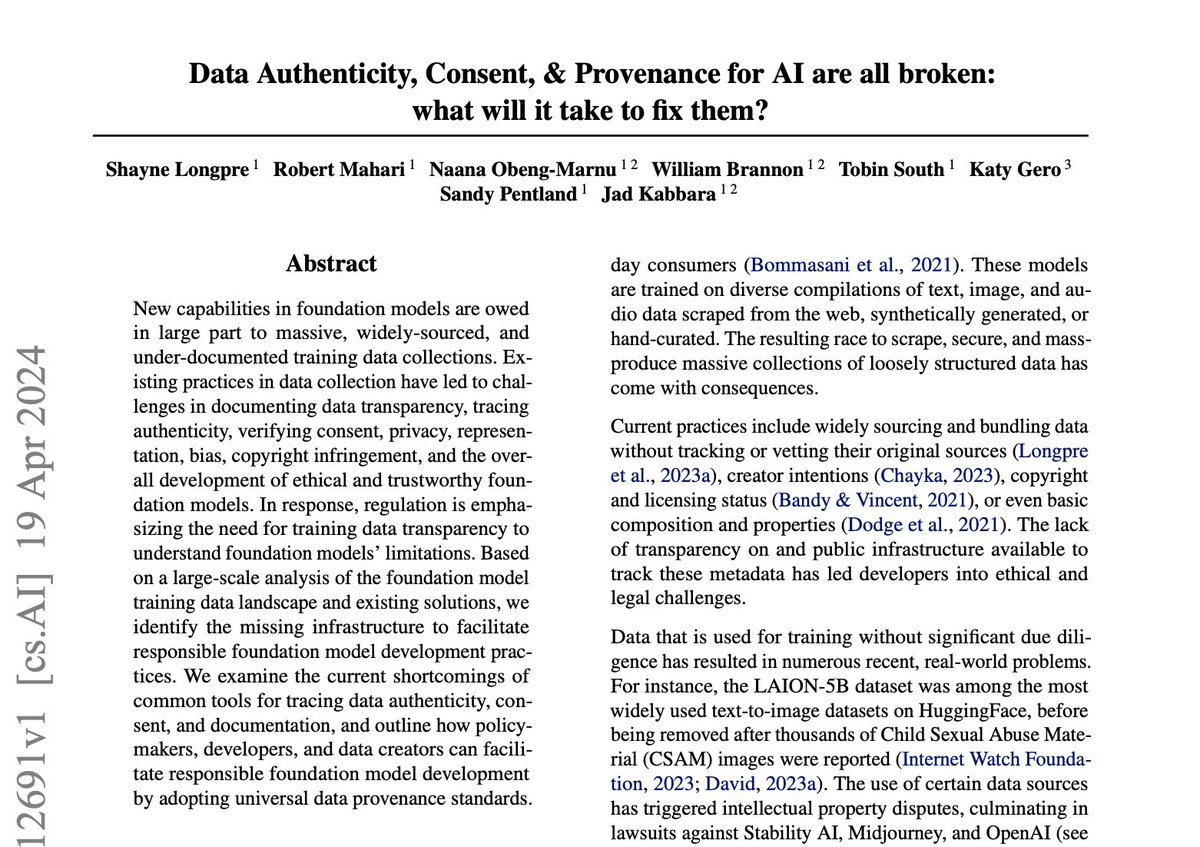

🔭 New perspective piece at #ICML2024 & Massachusetts Institute of Technology (MIT) AI Impact Award winner🎉 🌟Data Authenticity, Consent, and Provenance for AI Are All Broken: What Will It Take to Fix Them?🌟 w/ Robert Mahari Naana Obeng-Marnu Will Brannon Tobin South katy ilonka gero Alex Pentland Jad Kabbara 🔗:

✨Incredibly proud to share our new paper led by Massachusetts Institute of Technology (MIT) MIT Media Lab showing a rapid decline in consenting data for AI, asymmetries in data access by company (🔻26% OpenAI, 🔻13% Anthropic), and inefficiencies in robots.txt preference protocols. dataprovenance.org/consent-in-cri…

The Data Provenance Initiative led by Massachusetts Institute of Technology (MIT) MIT Media Lab is releasing a large-scale audit of 1800+ LLM training datasets! We found significant data access asymmetries by the company (🔻26% OpenAI, 🔻13% Anthropic). See Shayne Longpre's thread for more ⬇️ x.com/ShayneRedford/…

Excellent breakdown by Kevin Roose The New York Times of the recent shifts in web norms, and the consent to use its data for AI. nytimes.com/2024/07/19/tec…

📢 AI is increasingly (mis)used in the context of autonomous weaponry. Fantastic to see this covered by Catherine Caruso in Harvard Medical School news. Also see the #ICML2024 Oral led by Riley Simmons-Edler @RyanBadman1 and Kanaka Rajan.