Aleksandr Dremov

@alexdremov_me

ML Engineer | Student at EPFL

ID: 817763527679062016

https://alexdremov.me 07-01-2017 16:03:13

36 Tweet

37 Followers

115 Following

New TMLR paper by Master (!) student @alexdremov_me: Training Dynamics of the Cooldown Stage in Warmup-Stable-Decay Learning Rate Scheduler We finally understand the negative square root (1-sqrt) cooldown. TL;DR: It gets you best bias-variance tradeoff :) w/ Atli Kosson, M Jaggi

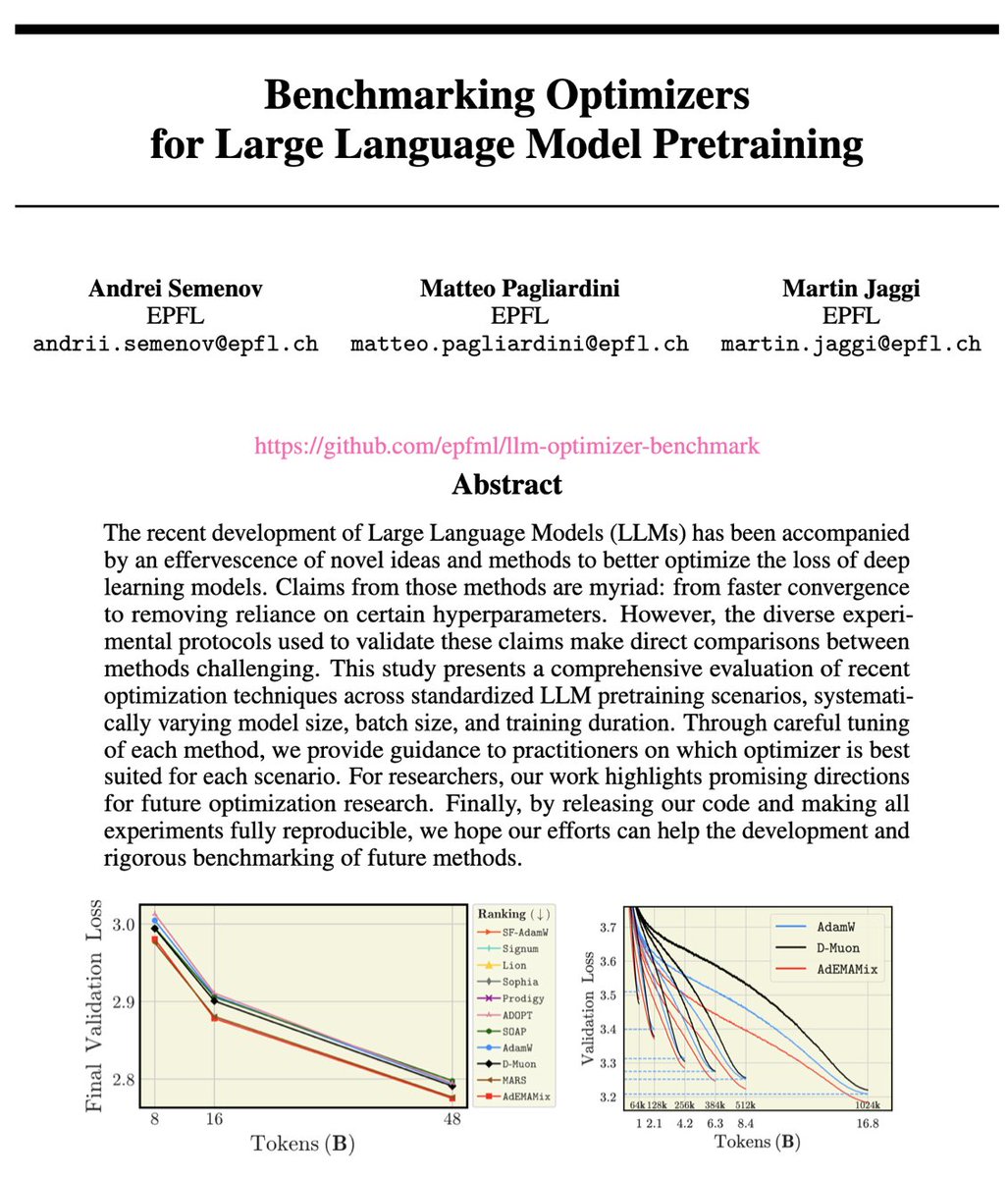

New work from our MLO lab EPFL: Benchmarking the variety of different proposed LLM optimizers: Muon, AdEMAMix, ... all in the same setting, tuned, with varying model size, batch size, and training duration! Huge sweep of experiments by Andrei Semenov Matteo Pagliardini M Jaggi