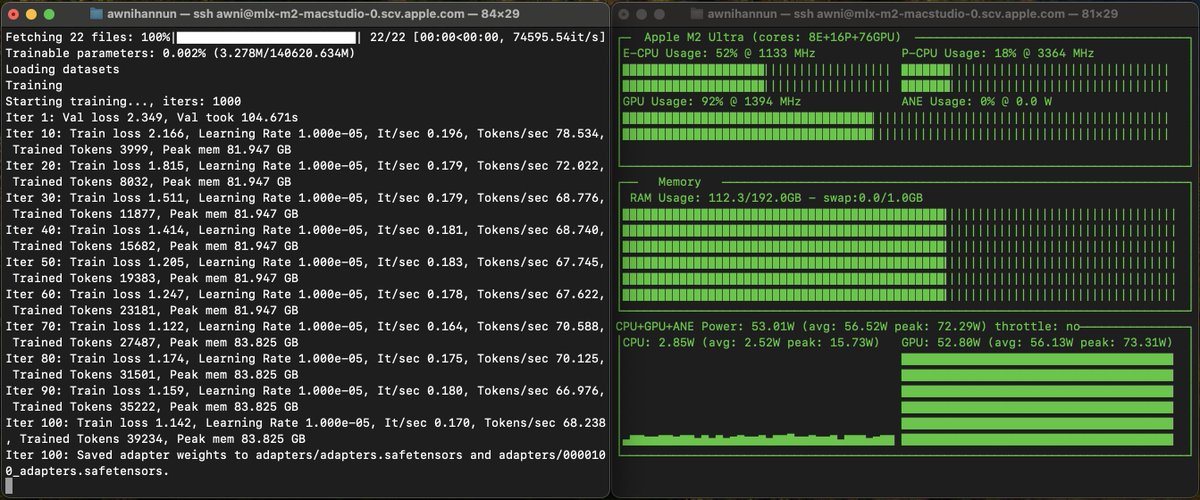

Awni Hannun

@awnihannun

Machine Learning Research @Apple

ID:245262377

https://awnihannun.com/ 31-01-2011 08:05:27

1,7K Tweets

16,2K Followers

245 Following

To see amazing AI communities like MLX thrive on Hugging Face is just the best! We can do more & better all together!

LangChain + MLX integration is OUT 🎉

You can now use all of LangChain features with MLX.

Thank you to Awni Hannun (MLX), Jacob Lee, Erick Friis, Harrison Chase and the entire LangChain team for their hardwork and helping me contribute.👏🏾

github.com/langchain-ai/l…