Desmond Elliott

@delliott

No longer active here. Associate Professor at the University of Copenhagen working on multimodal machine learning.

ID: 8164582

https://elliottd.github.io/ 13-08-2007 18:37:04

3,3K Tweet

2,2K Followers

420 Following

Very insightful remarks by (((ل()(ل() 'yoav))))👾. Looking back in history, what is going on is not new and probably not very different from the empirical revolution in the 90s or the shift to DL in NLP in ~2015 (of which LLMs are just a natural continuation).

Fun new paper led by Ingo Ziegler and Abdullatif Köksal that shows how we can use retrieval augmentation to create high-quality supervised fine tuning data. All you need to do is write a few examples that demonstrate the task.

I’m looking for great recent/almost MSc graduates in NLP/Speech/Theoretical Deep Learning, who are interested in doing up to a 6 months/1 year position with me as research assistant. They would be hired by DTU Compute.

Can you imagine working in a company that not only supports you, but celebrates you? 😍 Feeling all kinds of gratitude for being able to work Hugging Face .

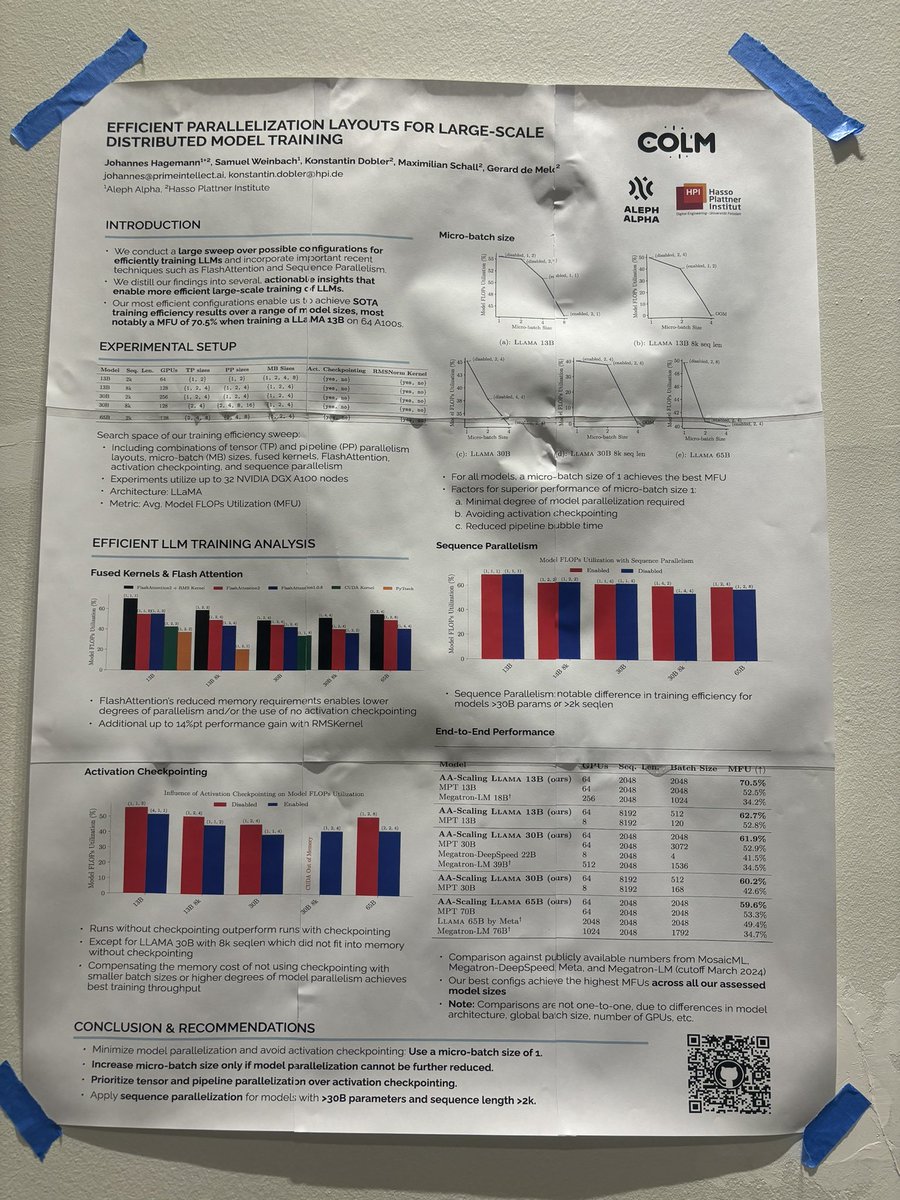

Presented our paper on compute-efficient large-scale distributed model training using cost-efficient large-scale distributed poster printing Conference on Language Modeling yesterday! Happy to chat about compute efficient training & more today and tomorrow if you are at COLM!

How do multilingual models store and retrieve factual knowledge in different languages? And do these mechanisms vary across languages? 🗺️ In our newly released paper, we explore these questions! 📄 arxiv.org/abs/2410.14387 🤗 Negar Foroutan Desmond Elliott 🧵👇

Recent work led by Constanza Fierro and Negar Foroutan on understanding the mechanisms behind multilingual factual recall