Brown NLP

@brown_nlp

Language Understanding and Representation Lab at Brown University. PI: Ellie Pavlick.

ID: 1321062394722922497

https://lunar.cs.brown.edu/ 27-10-2020 12:13:23

152 Tweet

3,3K Takipçi

143 Takip Edilen

Job Opportunity! At Brown University Brown Data Science Institute I direct the (new) Center for Tech Responsibility (CNTR). I'm looking to hire a Program Manager who would work with me to help operationalize the vision for the Center. If you're interested, apply! brown.wd5.myworkdayjobs.com/staff-careers-…

Now hiring: Twelve (!) PhD students to start in fall 2025, for research on combining neural and symbolic/interpretable models of language, vision, and action. Work with world-class advisors at Universität Saarland, MPI Informatics, MPI-SWS, @CISPA, DFKI. Details: neuroexplicit.org/jobs

🚨 New paper at NeurIPS Conference w/ Michael Lepori! Most work on interpreting vision models focuses on concrete visual features (edges, objects). But how do models represent abstract visual relations between objects? We adapt NLP interpretability techniques for ViTs to find out! 🔍

David Byrne won't be NeurIPS Conference, but we will be!

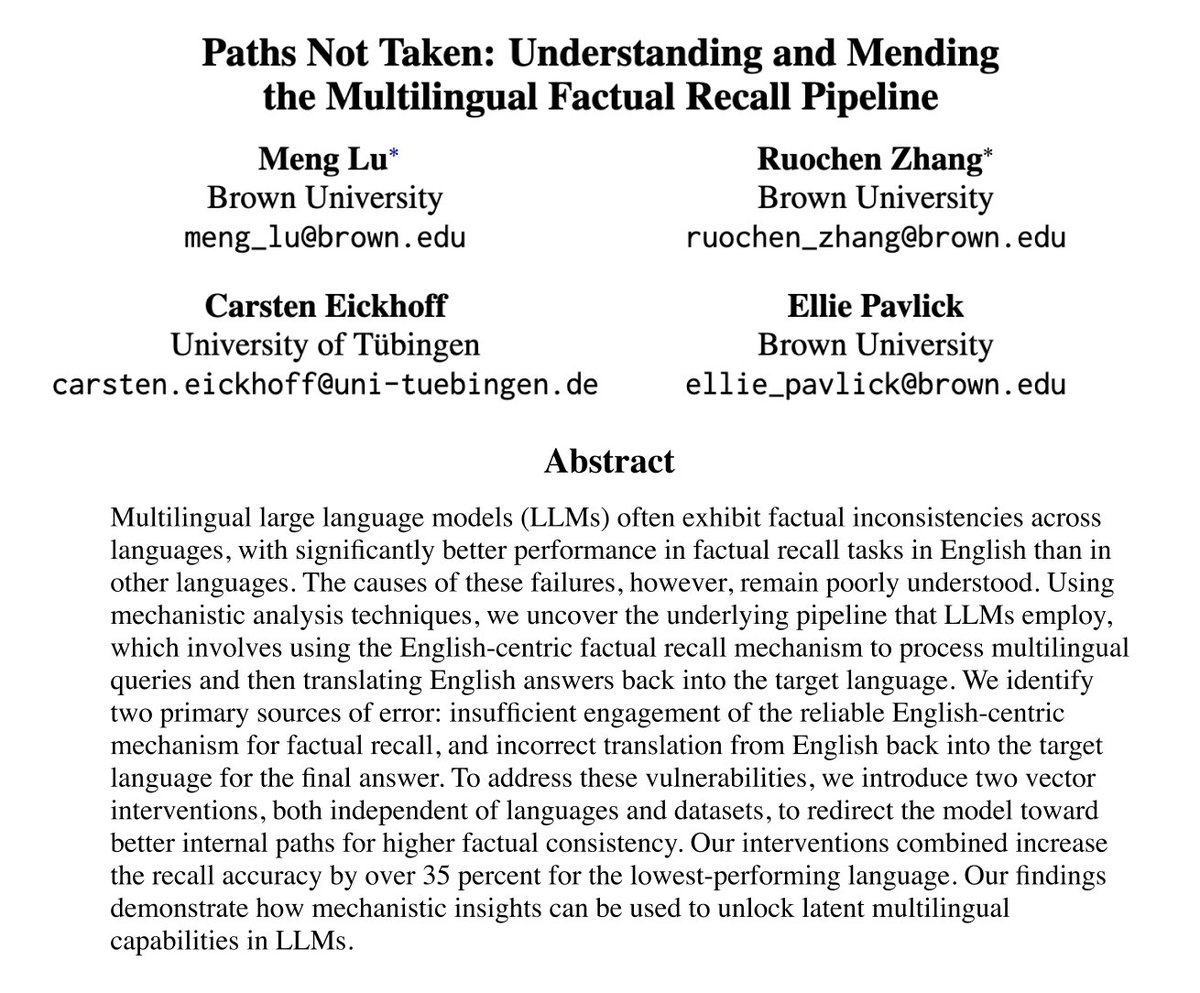

🥳 our recent work is accepted to #EMNLP2025 main conference! In this paper, we leverage actionable interp insights to fix factual errors in multilingual LLMs 🔍 Huge shoutout to Meng Lu (Jennifer) for her incredible work on this! She's applying for PhD this cycle and you should