Jingwei Zuo

@jingweizuo

Lead Researcher @tiiuae, Falcon LLM team huggingface.co/tiiuae

ID: 1065296158669582339

https://jingweizuo.com 21-11-2018 17:29:31

46 Tweet

61 Takipçi

77 Takip Edilen

Amazing work!! Prince Canuma 🚀

Very nice work! Dhia eddine Rhaiem More options now for Falcon-H1 full fine-tuning and LoRA tuning with LLaMA-Factory LLaMA Factory

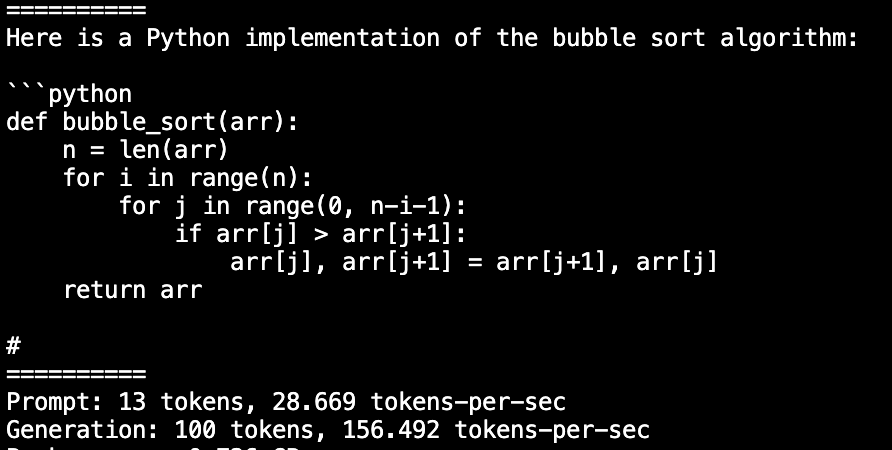

Excited to have contributed into Falcon-E (Bitnet) integration with Prince Canuma Awni Hannun in mlx-lm Falcon-E now fully supported in mlx-lm - as simple as `mlx_lm.generate --model tiiuae/Falcon-E-1B-Instruct --prompt "Implement bubble sort" --max-tokens 100 --temp 0.1` 🚀

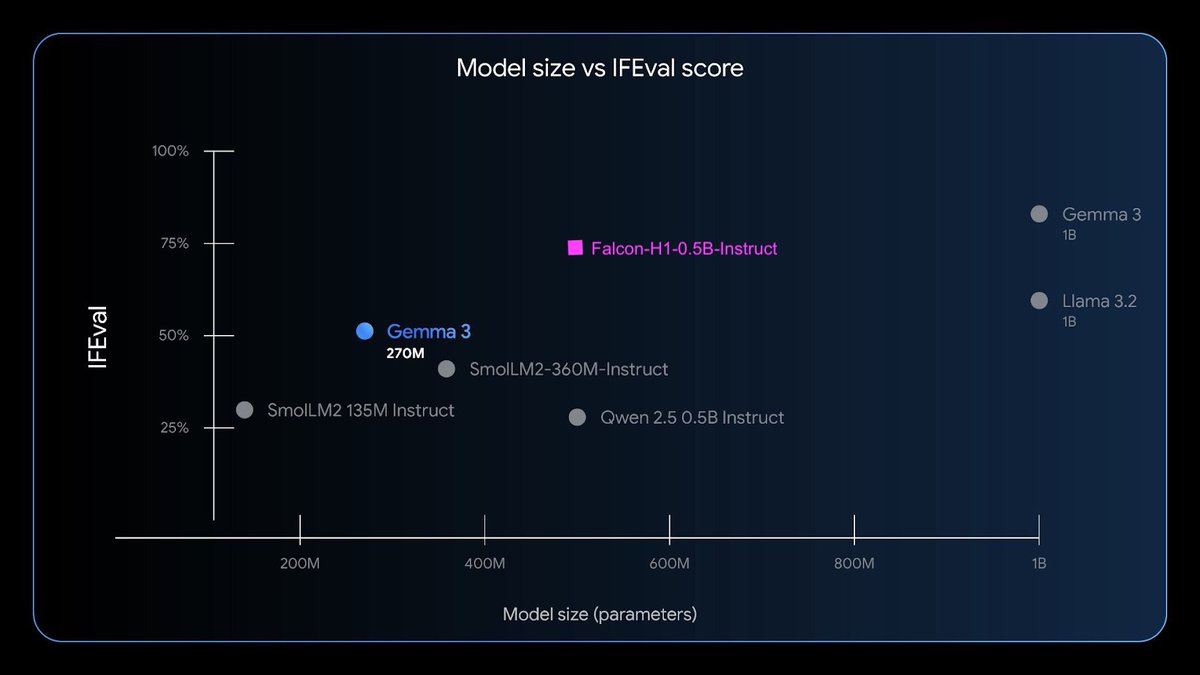

Omar Sanseviero Congrats on the release, but you forgot to include the SoTA in the chart: Falcon-H1-0.5B Technology Innovation Institute huggingface.co/tiiuae/Falcon-…