Ansh Radhakrishnan

@anshrad

Researcher @AnthropicAI

ID: 1494503784004800517

18-02-2022 02:49:44

44 Tweet

551 Followers

2,2K Following

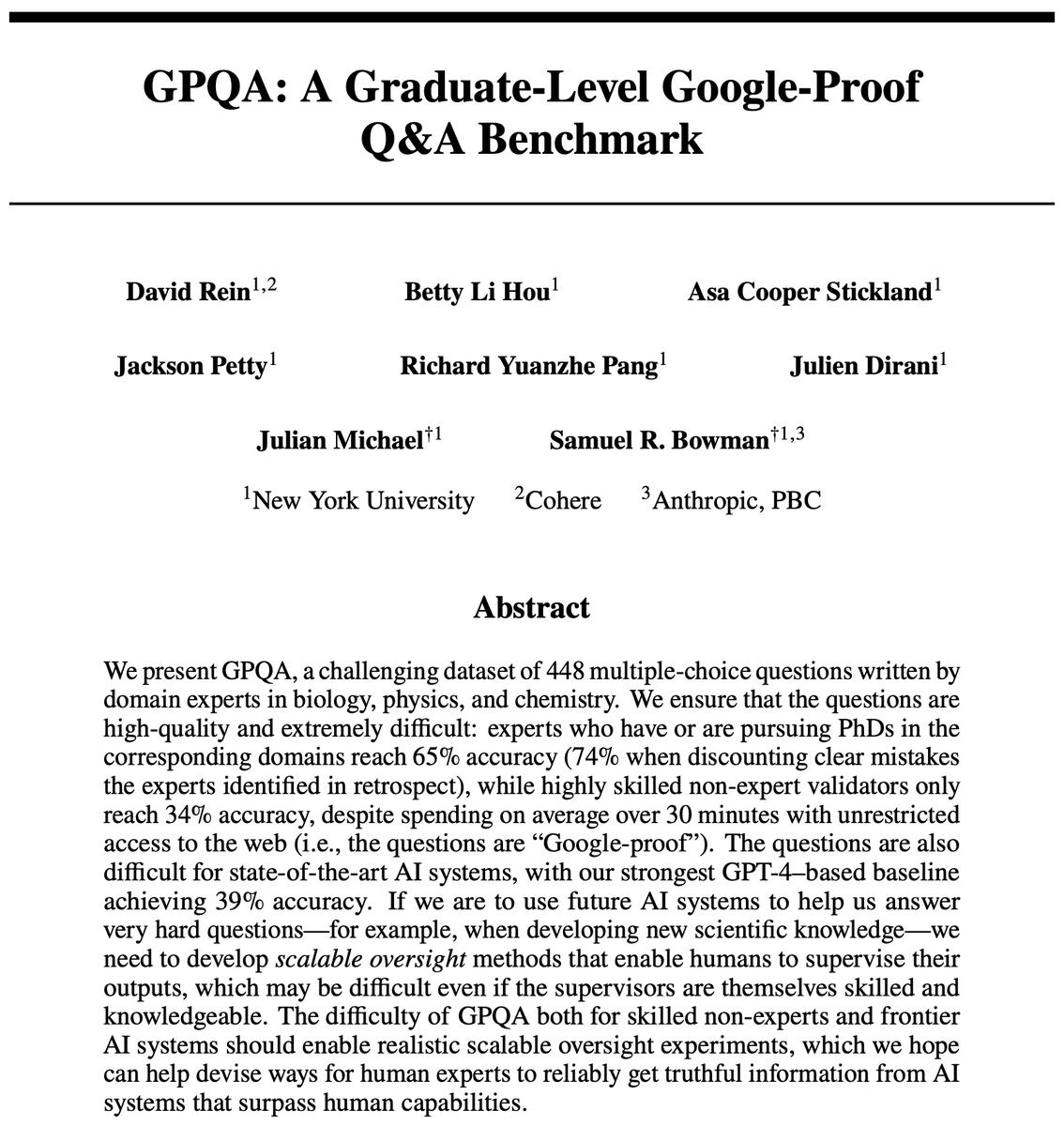

🧵Announcing GPQA, a graduate-level “Google-proof” Q&A benchmark designed for scalable oversight! w/ Julian Michael, Sam Bowman GPQA is a dataset of *really hard* questions that PhDs with full access to Google can’t answer. Paper: arxiv.org/abs/2311.12022

We won a Best Paper award for our Debate paper at #ICML2024! What an amazing group of co-authors, it's been so great to work with them on this over the past year. ❤️ akbir. John Hughes Laura Ruis Tim Rocktäschel Kshitij Sachan Ansh Radhakrishnan Edward Grefenstette Sam Bowman Ethan Perez