Sam Bowman

@sleepinyourhat

AI alignment + LLMs at NYU & Anthropic. Views not employers'. No relation to @s8mb. I think you should join @givingwhatwecan.

ID:338526004

https://cims.nyu.edu/~sbowman/ 19-07-2011 18:19:52

2,2K Tweets

35,2K Followers

3,1K Following

Follow People

We were very excited to see the publication of the Frontier Safety Framework from Google DeepMind! More companies sharing their concrete proposals for preparing for transformative capabilities from AI systems is great: it increases the concreteness of the options available to the

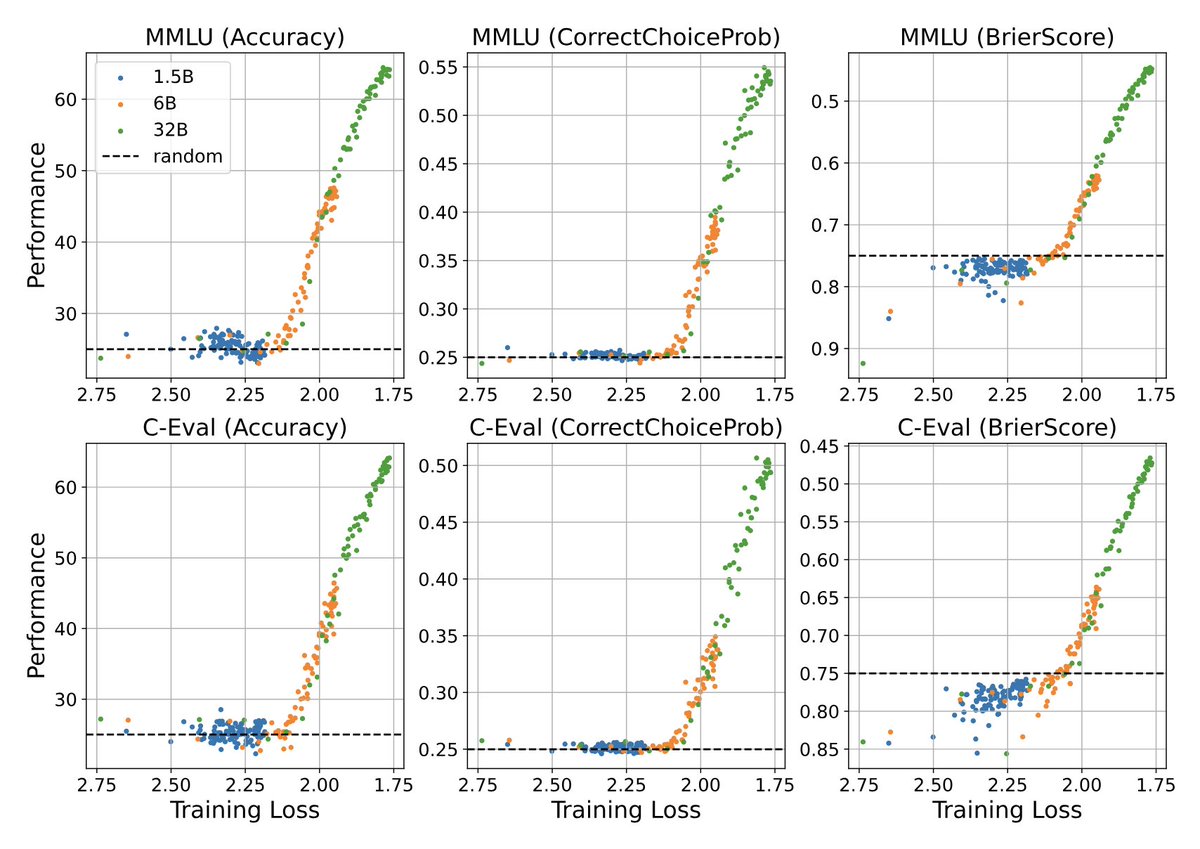

Enjoyed this paper that plots emergent abilities with pretraining loss on the x-axis, which is actually a suggestion that Oriol Vinyals also made a few years back: arxiv.org/abs/2403.15796

The paper uses intermediate checkpoints to plot a variety of pretraining losses. For some

I very distinctly remember while I was in the thick of it making GPQA telling Robert Long that “I knew the project was going to be ambitious/hard, but I didn’t appreciate what that actually meant”

In retrospect I probably still would’ve done it, but we basically had to restart the

I’m super excited to release our 100+ page collaborative agenda - led by Usman Anwar - on “Foundational Challenges In Assuring Alignment and Safety of LLMs” alongside 35+ co-authors from NLP, ML, and AI Safety communities!

Some highlights below...