Rabiraj Banerjee

@rabirajbandyop1

NLP + CSS @gesis_org working on Hate Speech | ex Sr. Data Scientist @Coursera | Prev: MS in CS @UBuffalo

I aspire to use NLP for social media safety

ID: 1345873364561309697

03-01-2021 23:23:22

403 Tweet

100 Followers

269 Following

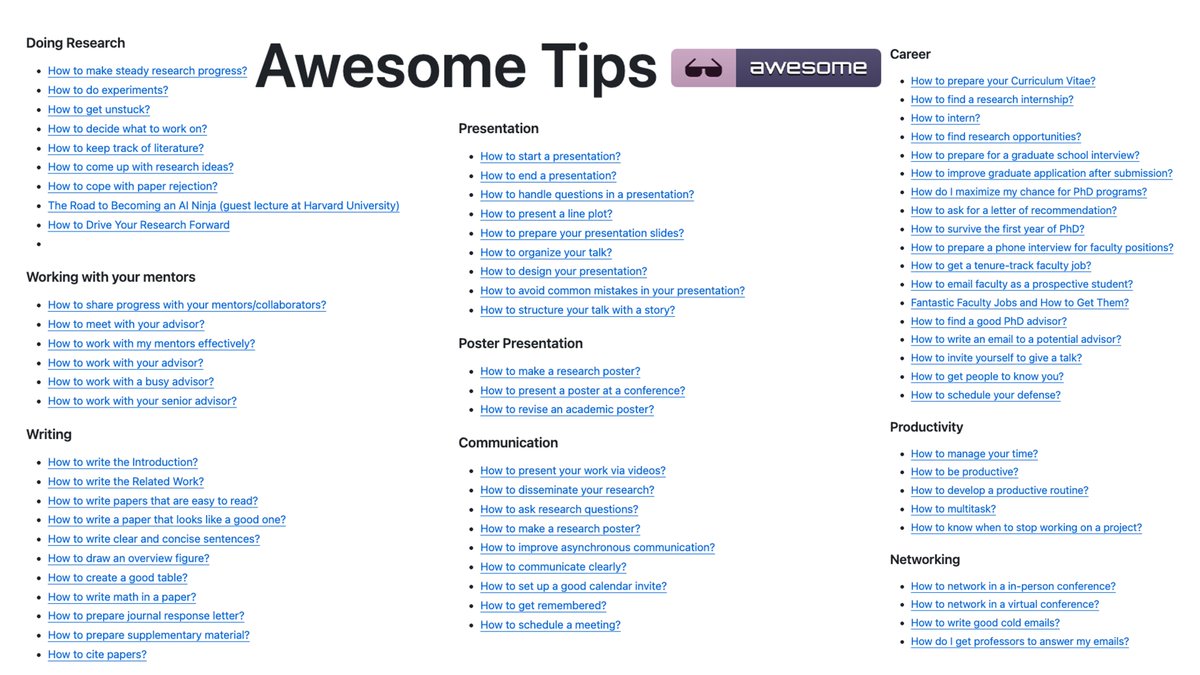

So You Want to Be an Academic? A couple of years into your PhD, but wondering: "Am I doing this right?" Most of the advice is aimed at graduating students. But there's far less for junior folks who are still finding their academic path. My candid takes: anandbhattad.github.io/blogs/jr_grads…

Teaching a new course Stanford University this quarter on explainable AI, motivated by neuroscience. I have curated a paper list 4 pages long (link in comment). What are your favorite papers on explainable AI/mechanistic interpretability that I am missing? Please comment or DM. thanks!

✨ New course materials: Interpretability of LLMs✨ This semester I'm teaching an active-learning grad course at Tel Aviv University on LLM interpretability, co-developed with my student Daniela Gottesman. We're releasing the materials as we go, so they can serve as a resource for anyone