Anand Bhattad

@anand_bhattad

Research Assistant Professor @TTIC_Connect | Visiting Researcher @berkeley_ai | PhD from @illinoisCS | UG @surathkal_nitk | Knowledge in Generative Models

ID: 309050073

https://anandbhattad.github.io/ 01-06-2011 13:04:21

948 Tweet

1,1K Followers

329 Following

All slides from our CV 20/20: A Retrospective Vision workshop at #CVPR2024 (#CVPR2024) is now available on our website: sites.google.com/view/retrocv/s… A side note: We started thinking and planning about this workshop on the last day of #CVPR2023 after a very eventful Scholars and Big

We've release our code and weights for weights2weights. Check out our demo on Hugging Face🤗 powered by Gradio. Code: github.com/snap-research/… Weights: huggingface.co/snap-research/… Demo: huggingface.co/spaces/snap-re… Thanks Linoy Tsaban🎗️ apolinario 🌐 for the collab!

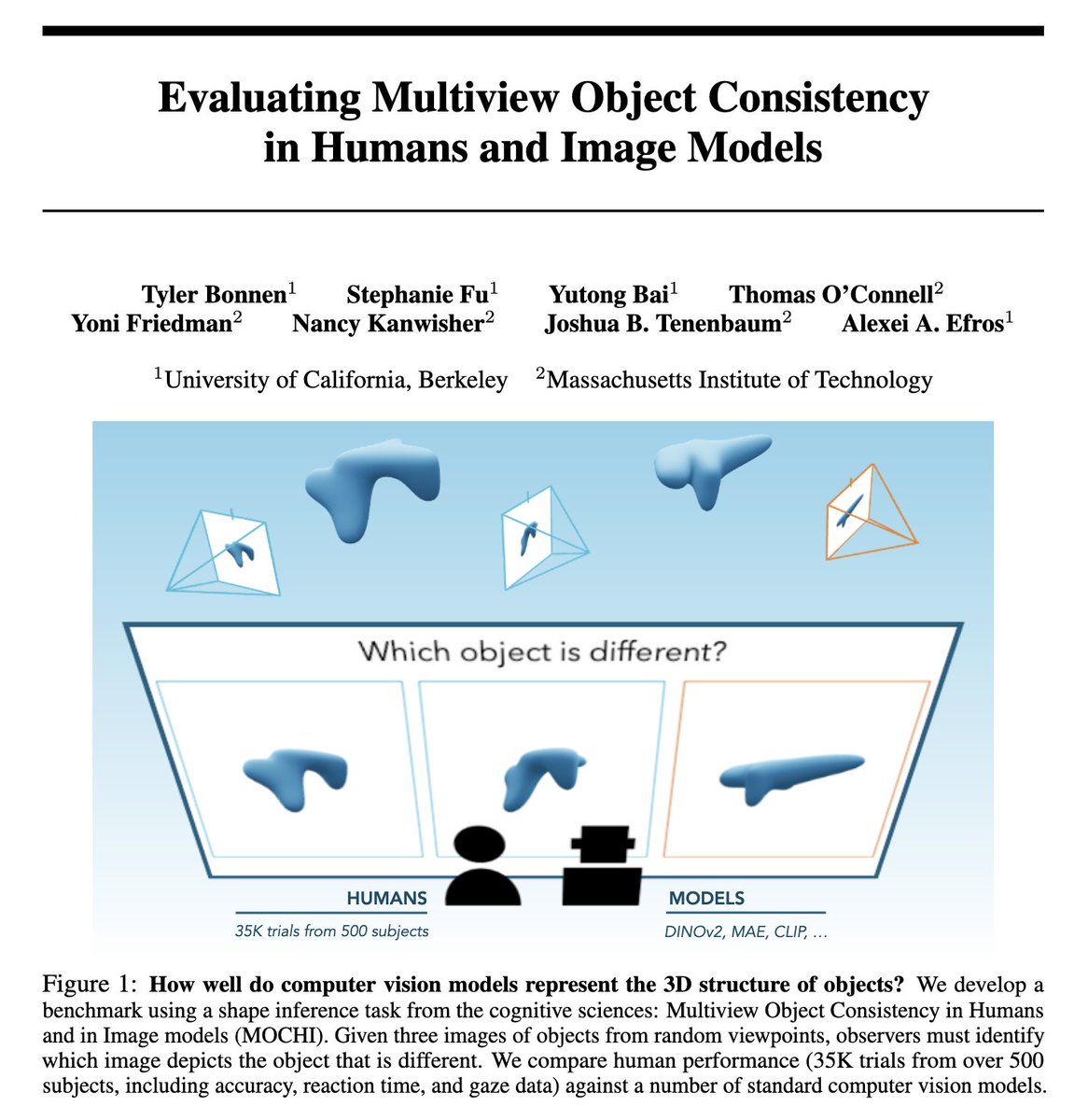

do large-scale vision models represent the 3D structure of objects? excited to share our benchmark: multiview object consistency in humans and image models (MOCHI) with Stephanie Fu Yutong Bai Thomas O'Connell Yoni Friedman Nancy Kanwisher @[email protected] Josh Tenenbaum and Alexei Efros 1/👀