Critical AI : first issue out! https://read.dukeup

@CriticalAI

Critical AI's first issue out: https://read.dukeupress ! Follow us here or @mastodon.social. Our editor often tweets from this acct on weekends.

ID:1290793547122302977

https://criticalai.org/ 04-08-2020 23:35:48

17,9K Tweets

5,0K Followers

1,6K Following

Very much enjoyed James E. Dobson’s excellent book, and pleased to be invited to review it.

Interesting legislation: Generative AI Copyright Disclosure Act, recently introduced by California Democratic congressman Adam Schiff.

H/T Bruna Trevelin who helped me understand some key points. 🧵 1/

schiff.house.gov/imo/media/doc/…

Though I know what you mean Ted McCormick , I'm not sure that's a helpful way of meditating on gen AI's 'innovation.'

The tech isn't a 'poser' trying hard to talk the talk to sound smarter or cooler.

It's a bell curve-like function assembling the most probable concepts/words.

nytimes.com/2024/04/10/opi… Nicely argued opinion essay in The New York Times on abuse of tech devices in k-12 classrooms. Interesting comments too.

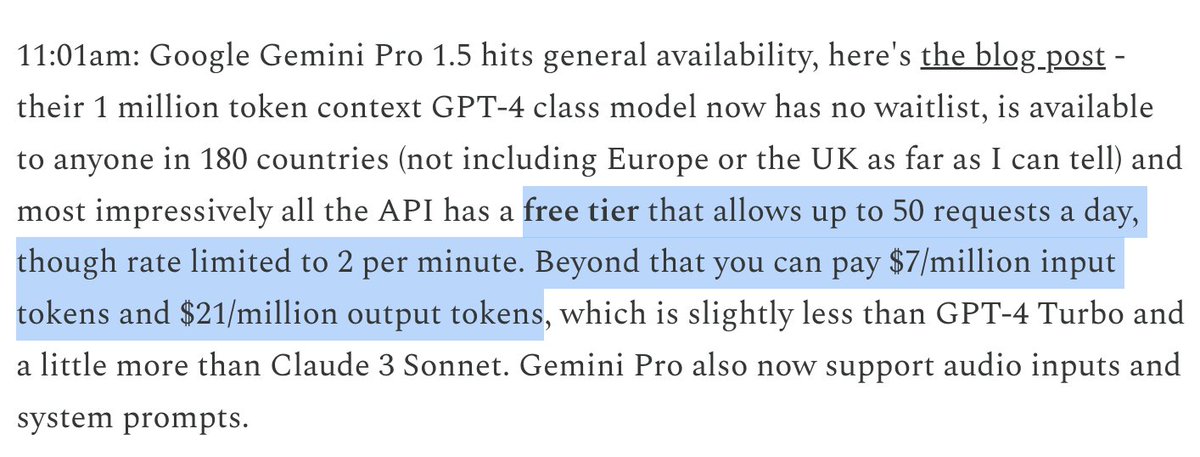

This is the craziest example of the ongoing AI frenzy I've ever seen. As far as I can tell this means that a single developer can make queries that burn $1,400 worth of compute for free *per day* (e.g. 'reverse the following 1M token string') — 50 * (7+21) = 1,400.

HT Simon Willison

#CriticalAI editorial collective member Christopher Newfield has a great blog post out on the the crisis in creating and disseminating knowledge. Research funding is migrating away from the basics + from much-needed social and cultural knowledge.

isrf.org/2024/04/03/the…

My first Director's Note ISRF - knowledge crisis in economics Financial Times when Chris Giles tried to bury the Laffer curve

isrf.org/2024/04/03/the…

Very excited to join Rutgers Food Science for a talk Fri 4/12 at 3pm. I'm hopeful that Critical AI : first issue out! https://read.dukeup team members might be able to join. Topic is 'Towards Practical, Critical and Cooperative Teaching & Learning with AI.'