Christopher Potts

@chrisgpotts

Stanford Professor of Linguistics and, by courtesy, of Computer Science, and member of @stanfordnlp and @StanfordAILab. He/Him/His.

ID: 408714449

http://web.stanford.edu/~cgpotts/ 09-11-2011 19:59:28

2,2K Tweet

12,12K Followers

612 Following

SmolLM3 uses the APO preference loss! Karel D’Oosterlinck great to see APO getting more adoption!

i forgot the whole point of saying you're at a conference is to advertise your poster please come check out AxBench by Zhengxuan Wu* me* et al. on Tuesday, 15 July at 11 AM - 1:30 PM

Overdue job update -- I am now: - A Visiting Scientist at Schmidt Sciences, supporting AI safety and interpretability - A Visiting Researcher at the Stanford NLP Group, working with Christopher Potts I am so grateful I get to keep working in this fascinating and essential area, and

ICML ✈️ this week. open to chat and learn mech interp from you. Aryaman Arora and i have cool ideas about steering, just come to our AxBench poster. new steering blog: zen-wu.social/steer/index.ht… 中文: zen-wu.social/steer/cn_index…

Excited to take part in TEDAI San Francisco this fall!

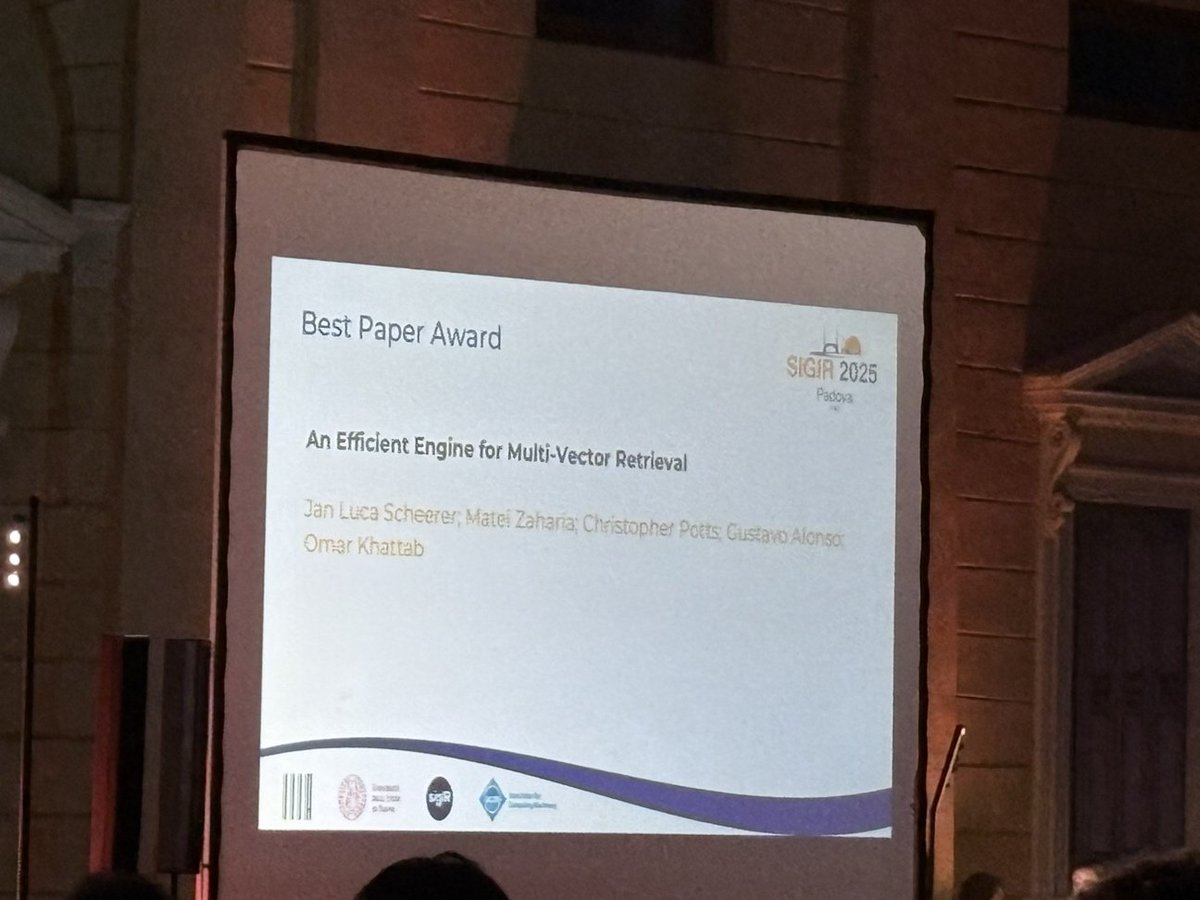

The #SIGIR2025 Best Paper just awarded to the WARP engine for fast late interaction! Congrats to Luca Scheerer🎉 WARP was his ETH Zurich MS thesis, completed while visiting us at @StanfordNLP. Incidentally, it's the fifth Paper Award for a ColBERT paper since 2020!* Luca did an

We're presenting the Mechanistic Interpretability Benchmark (MIB) now! Come and chat - East 1205. Project led by Aaron Mueller Atticus Geiger Sarah Wiegreffe

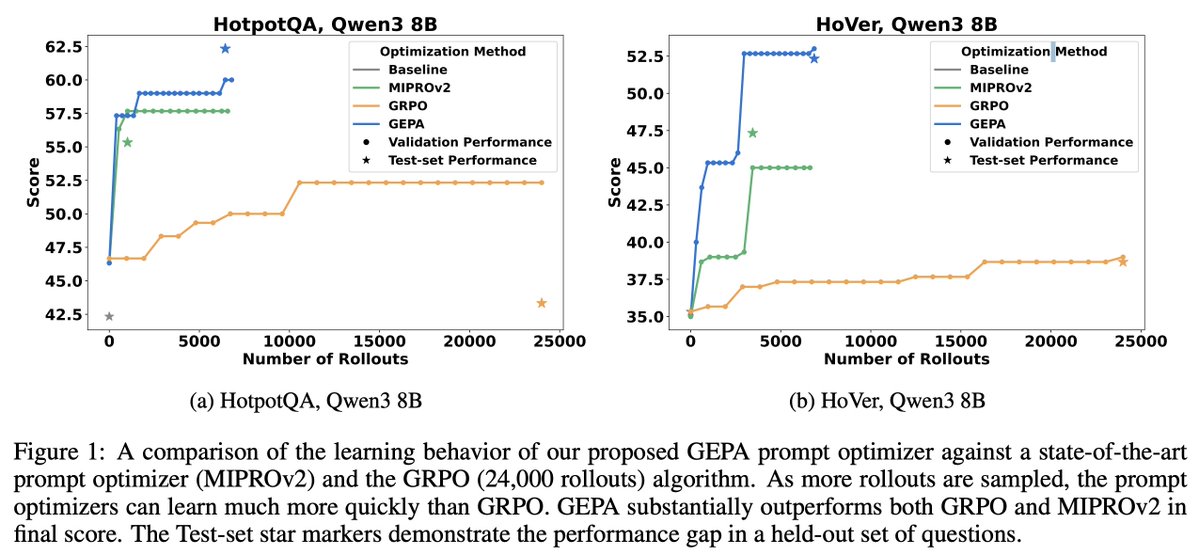

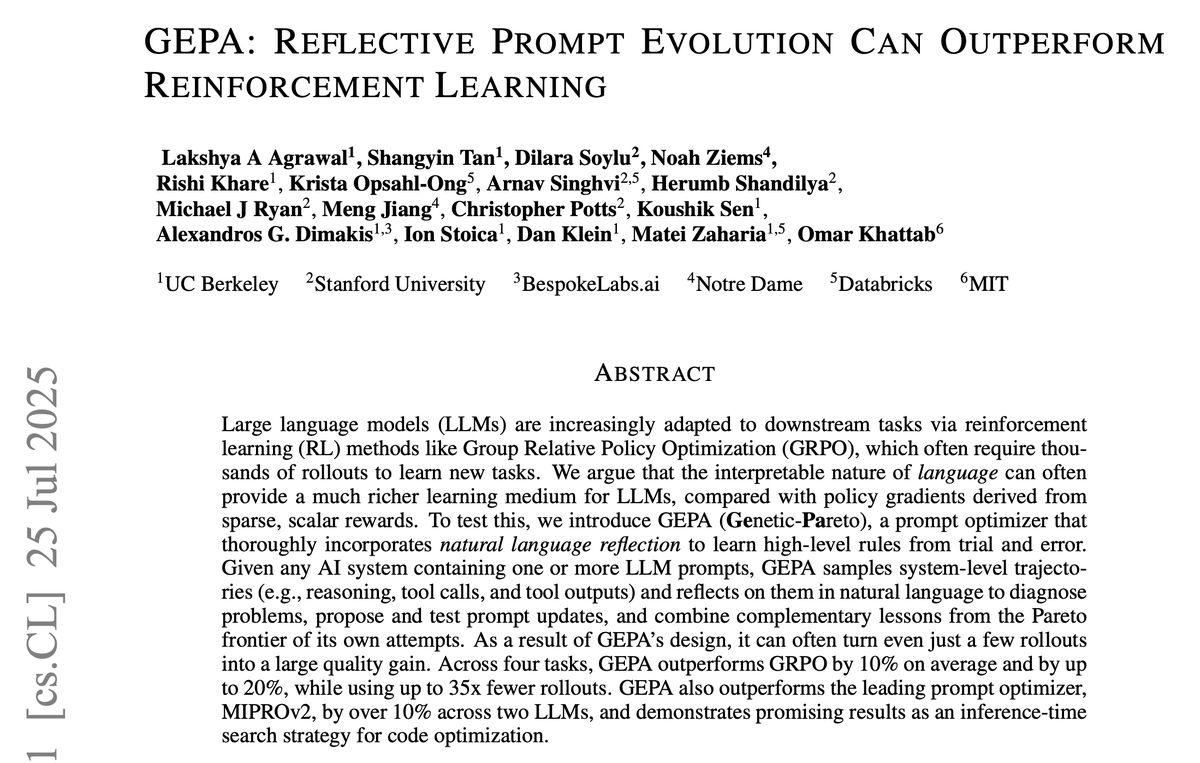

Paper: arxiv.org/abs/2507.19457 GEPA will be open-sourced soon as a new DSPy optimizer. Stay tuned! Incredibly grateful to the wonderful team Shangyin Tan Dilara Soylu Noah Ziems Rishi Khare Krista Opsahl-Ong Arnav Singhvi Herumb Shandilya Michael Ryan @ ACL 2025 🇦🇹 Meng Jiang Christopher Potts

Mike Taylor The better we make models at following prompts, the more impact prompts should have, right?

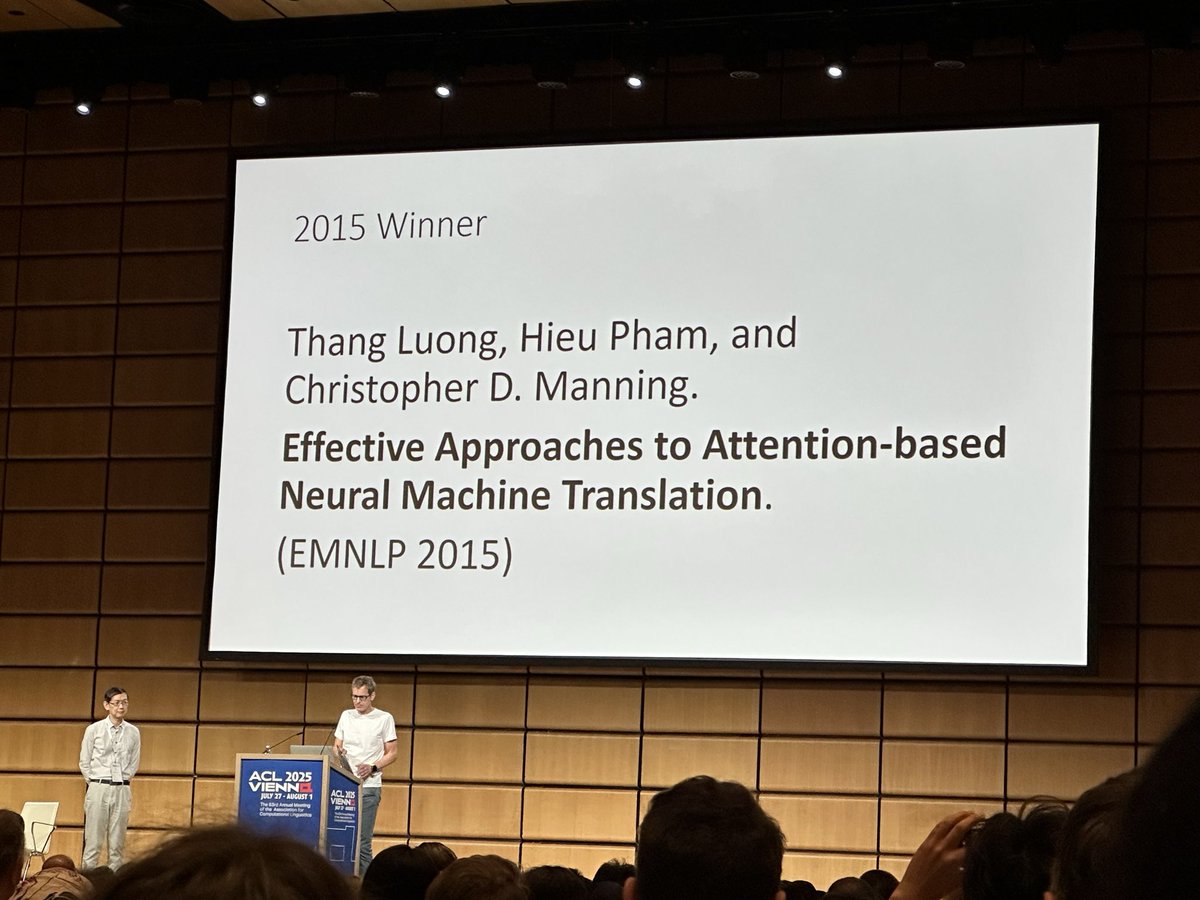

The Stanford NLP Group founders won both 2025 ACL 2025 test of time awards: ▪25 yrs: Gildea & Dan Jurafsky, Automatic Labeling of Semantic Roles aclanthology.org/P00-1065/ ▪10 yrs: Thang Luong, Hieu Pham & Christopher Manning, Effective Approaches to Attention-based NMT aclanthology.org/D15-1166/