Christopher Potts

@ChrisGPotts

Stanford Professor of Linguistics and, by courtesy, of Computer Science, and member of @stanfordnlp and @StanfordAILab. He/Him/His.

ID:408714449

http://web.stanford.edu/~cgpotts/ 09-11-2011 19:59:28

1,8K Tweets

10,9K Followers

621 Following

We are thrilled that Isabel Papadimitriou (Isabel Papadimitriou) will be joining UBC Linguistics as an Assistant Professor as of Sept 2025!

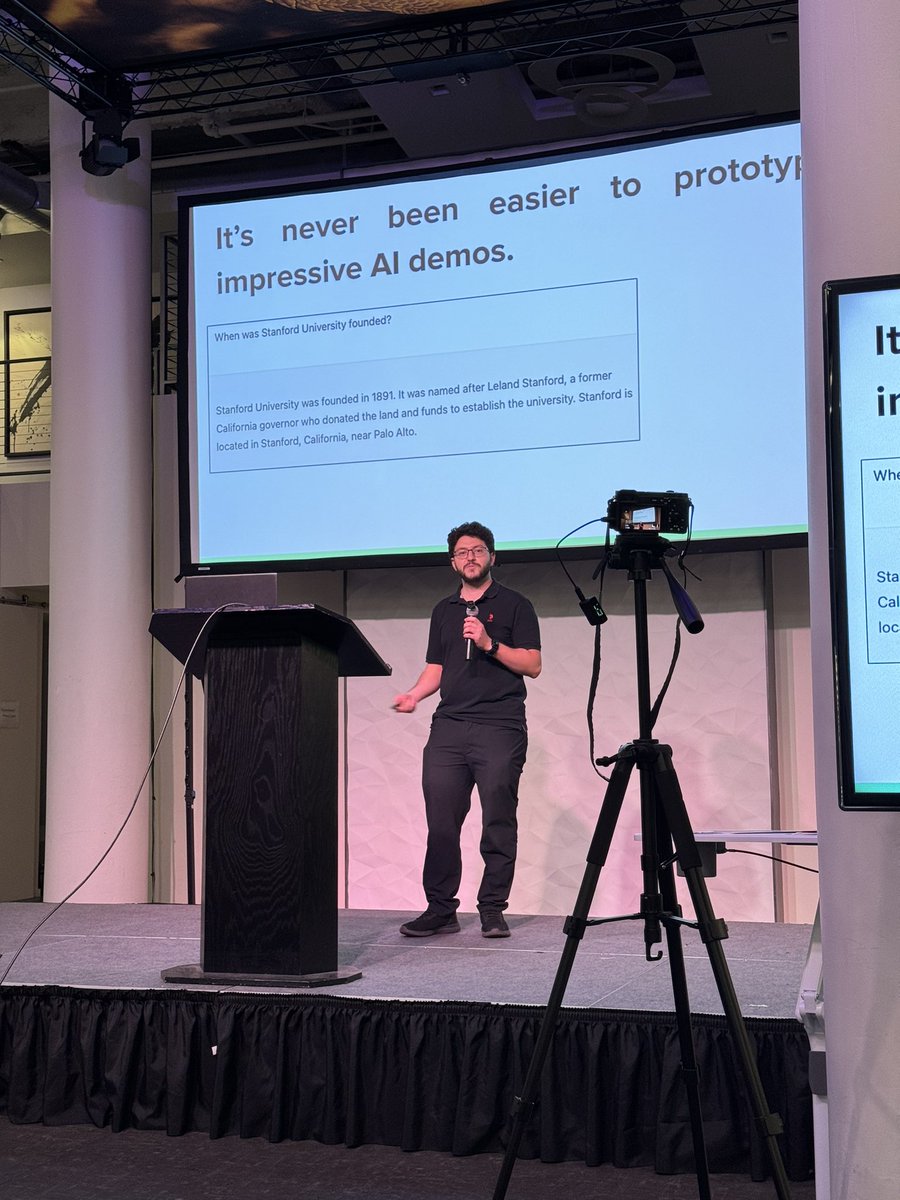

At ICLR? Don't miss the DSPy spotlight poster on Wednesday 4:30 PM (GMT+2).

The DSPy team at ICLR will be represented by Keshav Santhanam and Krista Opsahl-Ong.

I won't be there in person but I might join on an iPad at the session! LMK if you'd want to e-meet!

And the paper Omar Khattab has been waiting for -- we indexed ClueWeb09 and NeuCLIR Track 🔎 TREC 2024 1 with a hierarchical indexing scheme.

We use it to simulate the case where a firehose of docs is coming in and you want to search them.

arxiv.org/abs/2405.00975

4/?

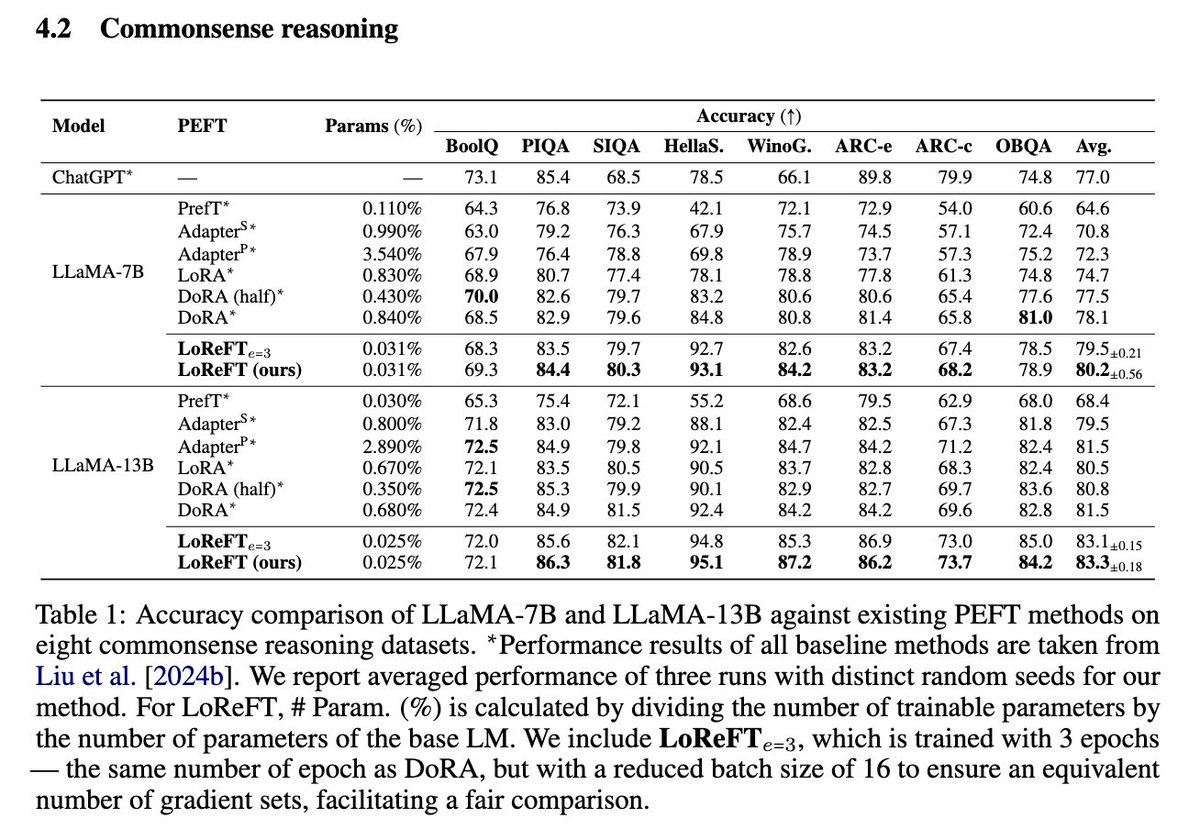

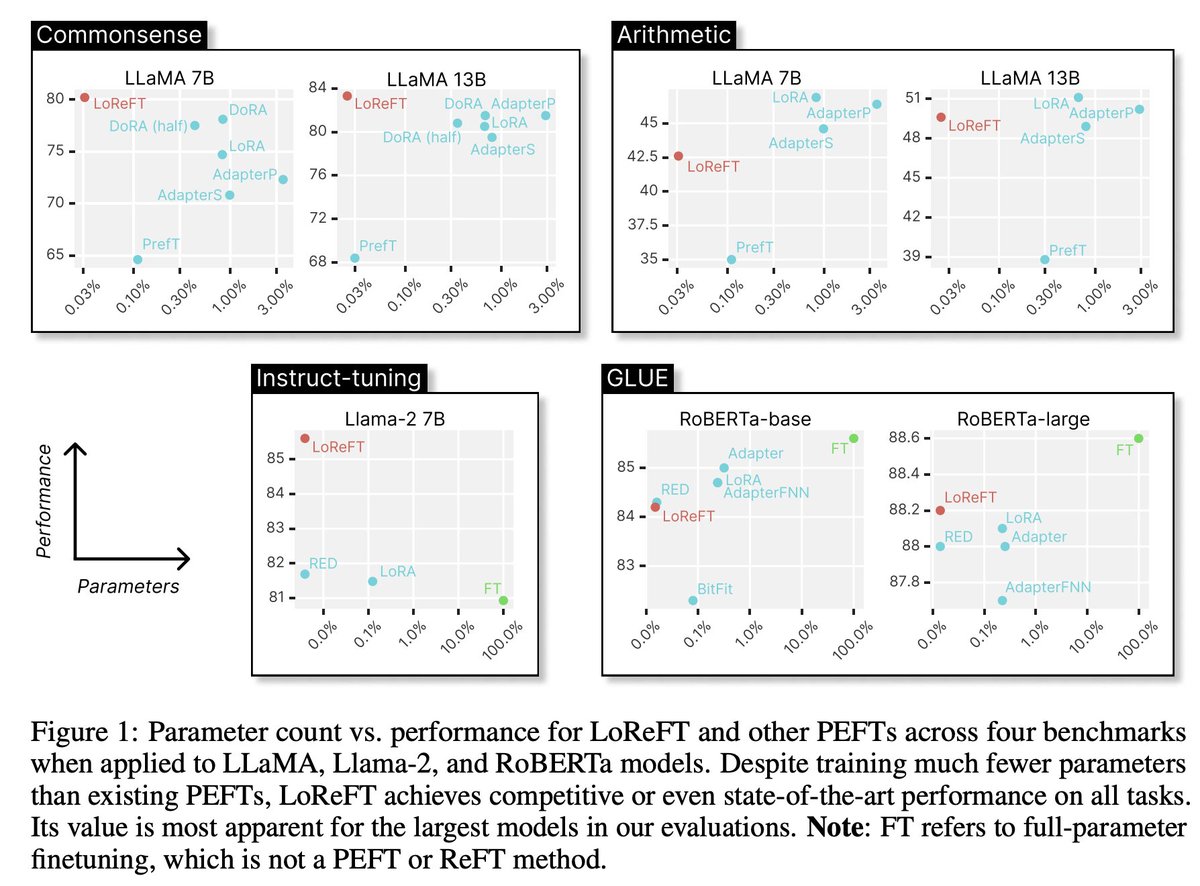

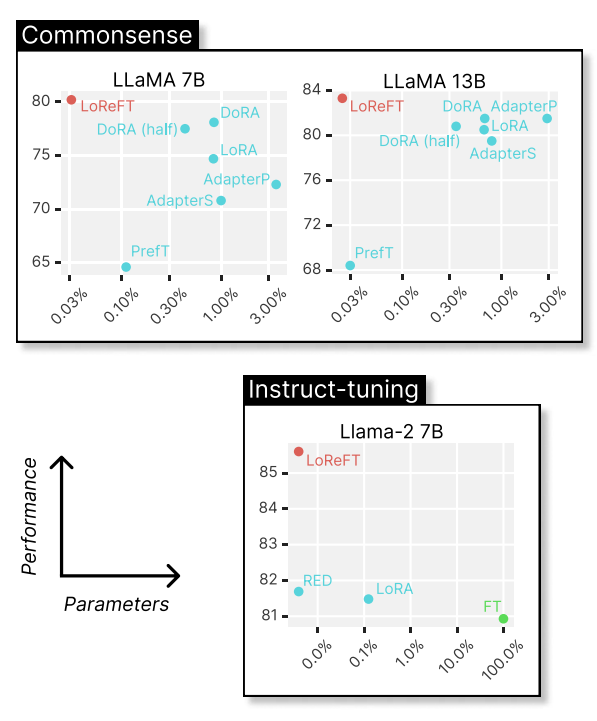

❊Very Few Parameter Fine tuning w/ ReFT and LoRA❊

And thanks for great work by Zhengxuan Wu of Stanford NLP Group /

Stanford AI Lab on the ReFT library!

TIMESTAMPS:

0:00 ReFT and LoRA Fine-tuning with few parameters

0:42 Video Overview

1:59 Transformer Architecture Review…

Oh, look, I hear there's a DSPy meetup in San Francisco on Wednesday (May 1st) from 5:30 PM - 8:30 PM with arize-phoenix, cohere, Weaviate • vector database.

See you there, everyone!

This week at CARE (Thursday 11am EST) we have Atticus Geiger presenting his fantastic line of work (with Christopher Potts, Thomas Icard, noahdgoodman, and others) on finding causal abstractions of large language models. Details here: portal.valencelabs.com/events/post/un…

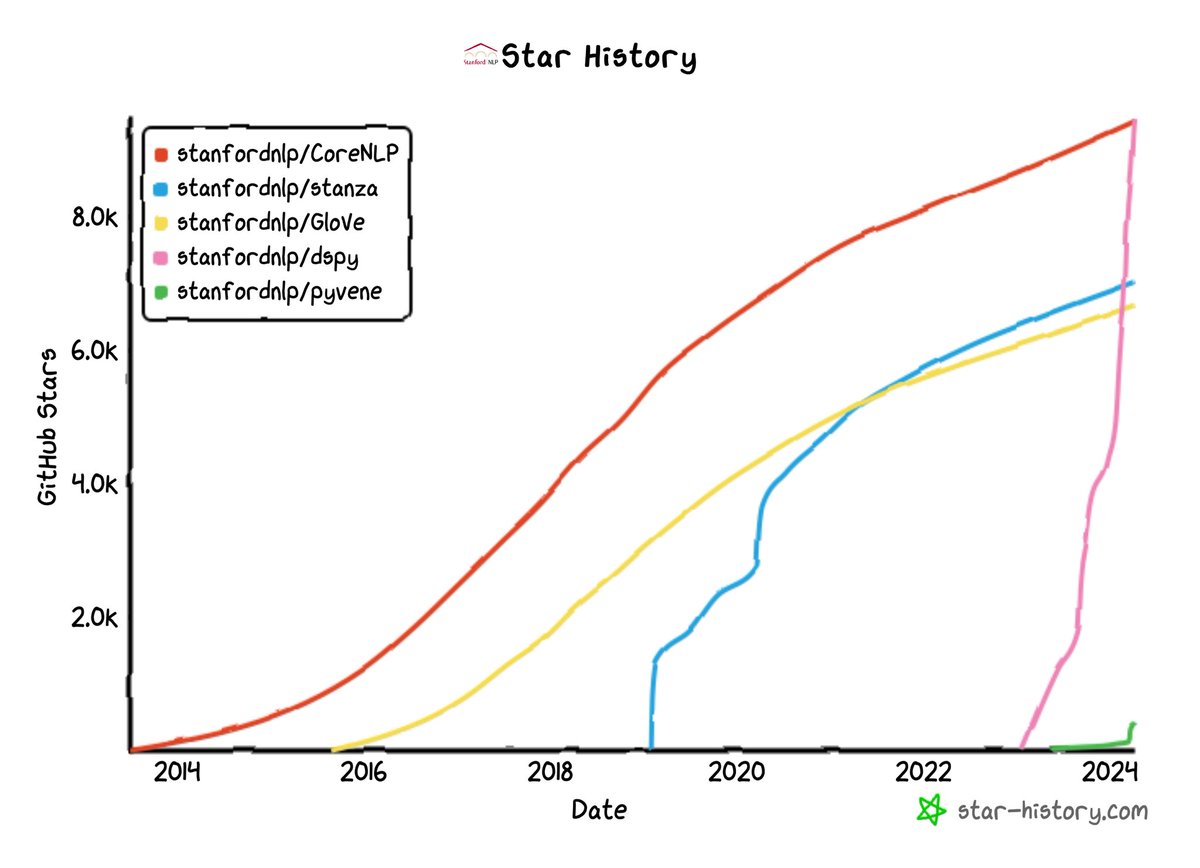

After a meteoric rise, DSPy is now the Stanford NLP Group repository with the most GitHub stars. Big congratulations to Omar Khattab and his “team”.

DSPy: Programming—not prompting—Foundation Models

github.com/stanfordnlp/ds…