Lucy Li

@lucy3_li

@UCBerkeley PhD student + @allen_ai. Human-centered #NLProc, computational social science, AI fairness. she/her. https://t.co/rtSSUhWQnL

ID:861417356756533248

http://lucy3.github.io 08-05-2017 03:07:57

3,0K Tweets

4,1K Followers

1,5K Following

📢 The Gates Foundation has posted a Request for Information on AI-Powered Innovations in Mathematics Teaching & Learning

usprogram.gatesfoundation.org/news-and-insig…

If you are working at the intersection of AI & math education, especially approaches that center equity, consider responding!

🌟Several dataset releases deserve a mention for their incredible data measurement work 🌟

➡️ The Pile (arxiv.org/abs/2101.00027) Leo Gao Stella Biderman

➡️ ROOTS (arxiv.org/abs/2303.03915) Hugo Laurençon++

➡️ Dolma (arxiv.org/abs/2402.00159) Luca Soldaini 🎀 Kyle Lo

14/

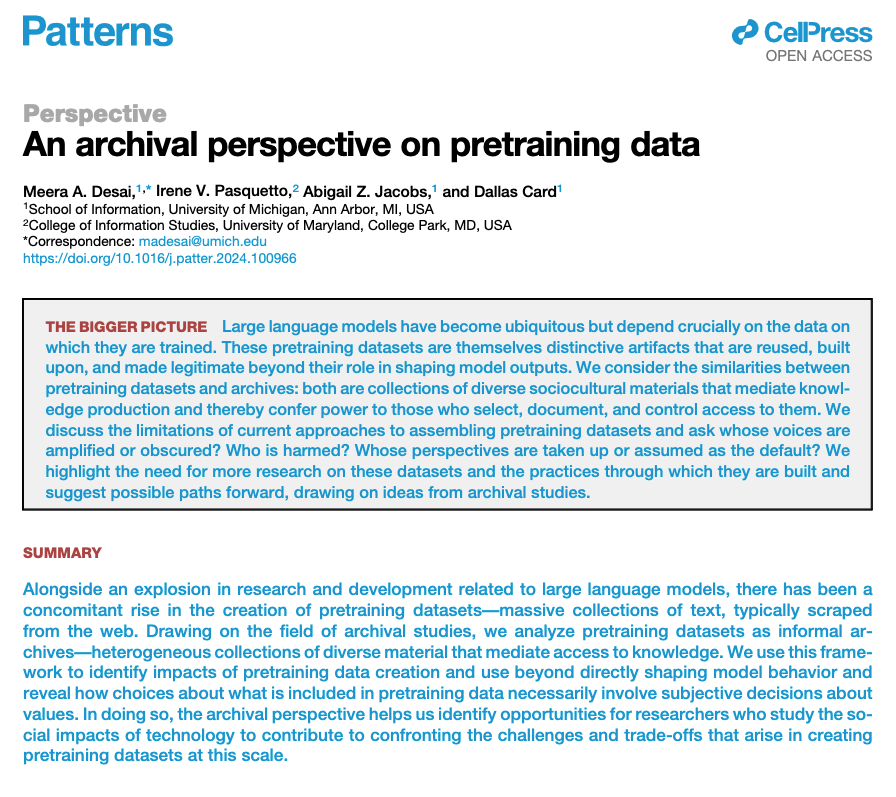

I'm excited to share that the journal version of our paper, 'An archival perspective on pretraining data', is now available (open access) from Patterns!

This project was led by Meera Desai, along with Irene Pasquetto, Abigail Jacobs, and myself

1/n

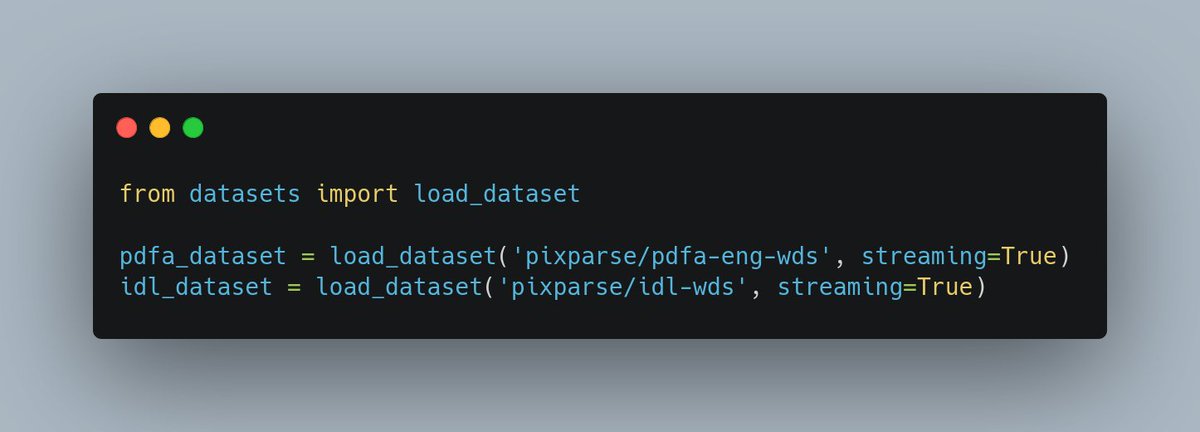

It was hard to find quality OCR data... until today! Super excited to announce the release of the 2 largest public OCR datasets ever 📜 📜

OCR is critical for document AI: here, 26M+ pages, 18b text tokens, 6TB! Thanks to UCSF Library, Industry Documents Library and PDF Association

🧶 ↓

![Jiayi Pan (@pan_jiayipan) on Twitter photo 2024-04-10 16:07:22 New paper from @Berkeley_AI on Autonomous Evaluation and Refinement of Digital Agents! We show that VLM/LLM-based evaluators can significantly improve the performance of agents for web browsing and device control, advancing sotas by 29% to 75%. arxiv.org/abs/2404.06474 [🧵] New paper from @Berkeley_AI on Autonomous Evaluation and Refinement of Digital Agents! We show that VLM/LLM-based evaluators can significantly improve the performance of agents for web browsing and device control, advancing sotas by 29% to 75%. arxiv.org/abs/2404.06474 [🧵]](https://pbs.twimg.com/media/GK0M2S8bEAAduvw.jpg)