Steven Wu

@zstevenwu

Computer science prof at Carnegie Mellon @SCSatCMU. Researcher in algorithms and machine learning. bsky.app/profile/zsteve…

ID: 185379141

http://zstevenwu.com 31-08-2010 21:12:03

510 Tweet

2,2K Followers

666 Following

A dream I've had for five years is finally coming true: I'll be co-teaching a course next sem. on the algorithmic foundations of imitation learning / RLHF with my advisors, Drew Bagnell and Steven Wu! Sign up if you're at CMU (17-740) or follow along at interactive-learning-algos.github.io!

We’ve released updated 2024 lectures from my Stanford RL CS234 class! shorturl.at/pf427 This includes a guest lecture on Direct Preference Optimization (DPO) from 1st authors Rafael Rafailov @ NeurIPS Archit Sharma Eric tinyurl.com/3kr4czth Thanks to Stanford Online!

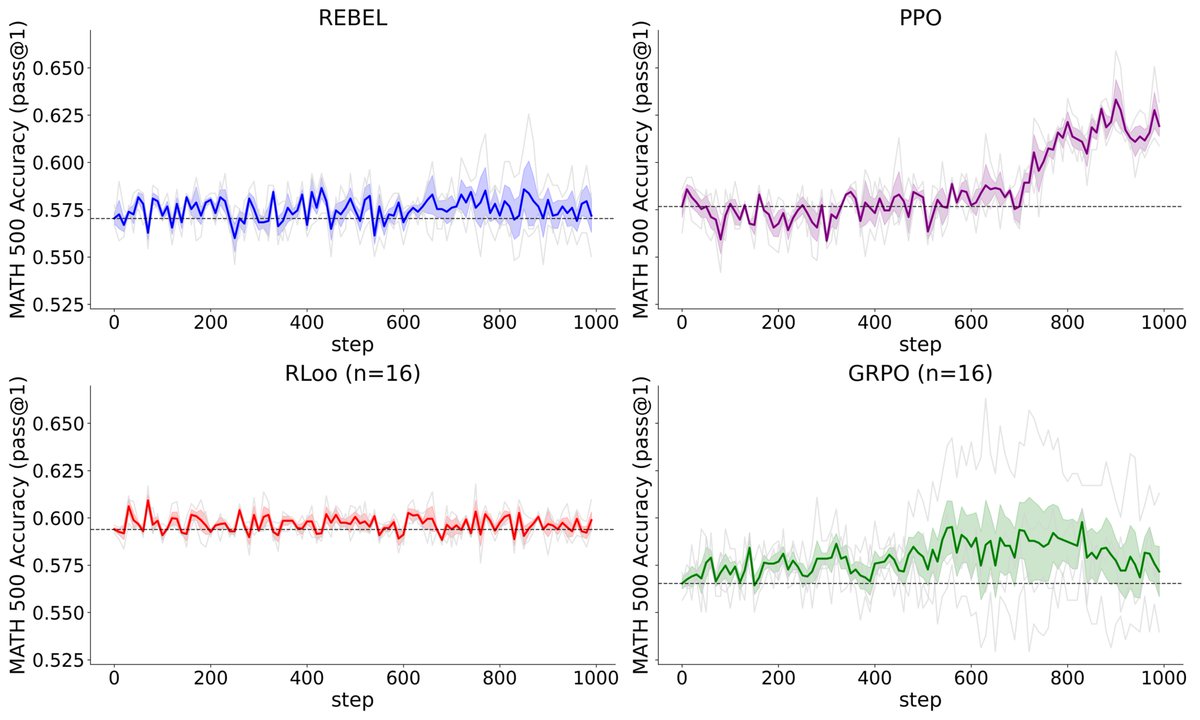

If you'd like to avoid the bends, the TL;DR is that RL lets us filter down our search space to only those policies that are optimal for relatively simple verifiers. 📰: arxiv.org/abs/2503.01067. Joint w/ the all-star cast of Sanjiban Choudhury, Wen Sun, Steven Wu, and Drew Bagnell. [2/n]

I was lucky enough to be invited give a talk on our new paper on the value of RL in fine-tuning at Cornell University last week! Because of my poor time management skills, the talk isn't as polished as I'd like, but I think the "vibes" are accurate enough to share: youtu.be/E4b3cSirpsg.

blog.ml.cmu.edu/2025/04/18/llm… 📈⚠️ Is your LLM unlearning benchmark measuring what you think it is? In a new blog post authored by Pratiksha Thaker, Shengyuan Hu, Neil Kale, Yash Maurya, Steven Wu, and Virginia Smith, we discuss why empirical benchmarks are necessary but not

blog.ml.cmu.edu/2025/05/22/unl… Are your LLMs truly forgetting unwanted data? In this new blog post authored by Shengyuan Hu, Yiwei Fu, Steven Wu, and Virginia Smith, we discuss how benign relearning can jog unlearned LLM's memory to recover knowledge that is supposed to be forgotten.

blog.ml.cmu.edu/2025/06/01/rlh… In this in-depth coding tutorial, Zhaolin Gao and Gokul Swamy walk through the steps to train an LLM via RL from Human Feedback!

The FORC 2026 call for papers is out! responsiblecomputing.org/forc-2026-call… Two reviewing cycles with two deadlines: Nov 11 and Feb 17. If you haven't been, FORC is a great venue for theoretical work in "responsible AI" --- fairness, privacy, social choice, CS&Law, explainability, etc.