Zongze Wu

@zongze_wu

computer vision researcher

ID: 1361740007095095296

16-02-2021 18:11:38

60 Tweet

177 Takipçi

118 Takip Edilen

[1/n] I am happy to share that our GAN Cocktail paper was accepted to #ECCV2022 European Conference on Computer Vision #ECCV2026! Many thanks to my great supervisors Dani Lischinski and Ohad Fried! The project page is available at: omriavrahami.com/GAN-cocktail-p…

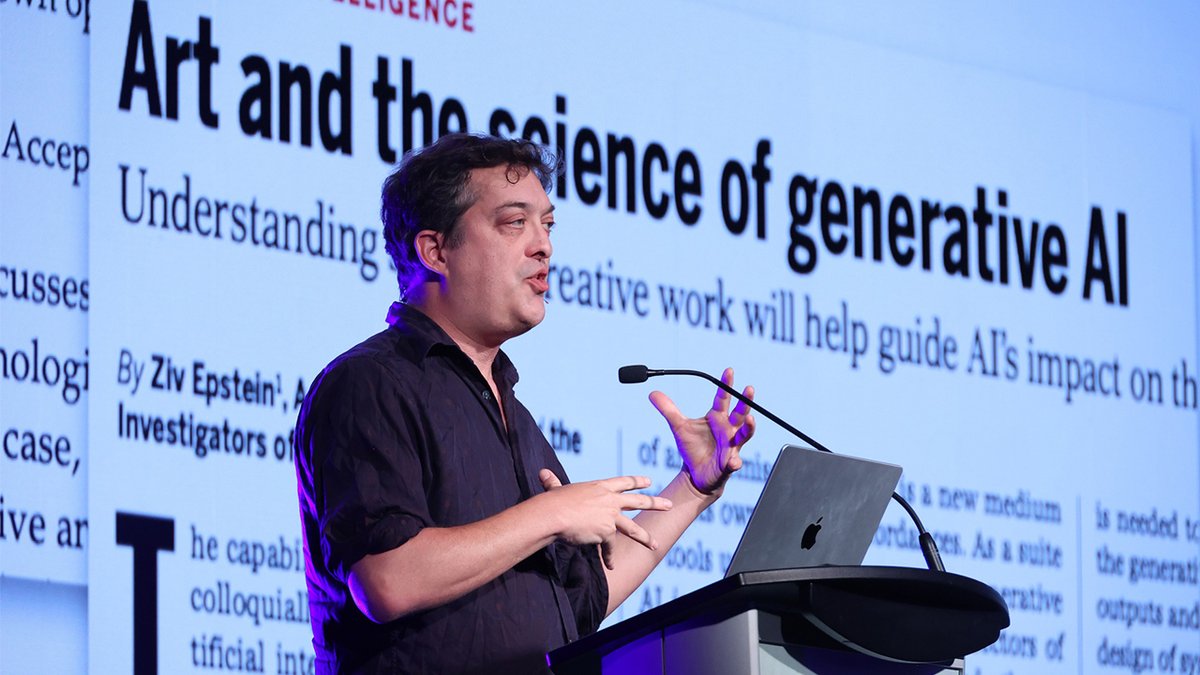

Adobe Research Principal Scientist Aaron Hertzmann won the Computer Graphics Achievement award by @SIGGRAPH,one of the highest honors in the field! Learn about his new theory of perception, and his work at the intersection of art and computer graphics. adobe.ly/46iiX7X

TurboEdit can invert an image in 1s, and each following edit only takes 0.5s. Work w/ Richard Zhang, Nick Kolkin, Jon Brandt, Eli Shechtman Webpage: betterze.github.io/TurboEdit/ Paper: arxiv.org/abs/2408.08332… Video: youtube.com/watch?v=1LG2xC…

We find the StyleCLIP global direction method work reasonably well on human dress editing. Feel free to play it [Here](github.com/orpatashnik/St…)](https://pbs.twimg.com/media/FgWoLYIXkAAa_4a.jpg)