Yannis Flet-Berliac

@yfletberliac

Post-training & RL Research @cohere | Postdoc @Stanford | PhD @Inria (Sequel/Scool) | Associate Program Chair @icmlconf’23

ID: 1465200073

https://cs.stanford.edu/~yfletberliac 28-05-2013 17:00:10

334 Tweet

760 Followers

950 Following

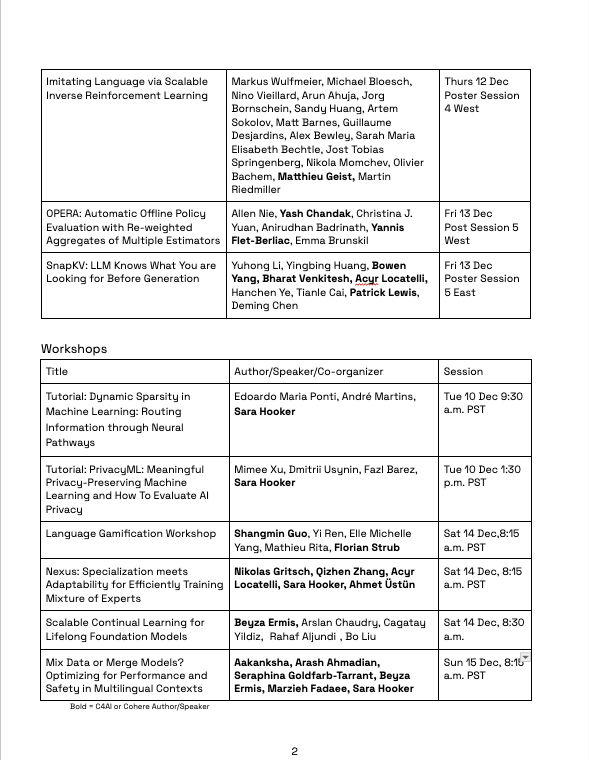

Everyone is on their way to Vancouver for NeurIPS Conference 2024! ✈️ We are looking forward to connecting with everyone! 🤗 Here is where you can find us:

Time to prove that I'm not all about that LLM bandwagon -- presenting work w/ Yash Chandak Yannis Flet-Berliac, Christina, Ani, and Emma Brunskill on Offline RL policy evaluation. Friday Dec 13: 11am-2 pm Poster Location: West Ballroom A-D Stanford AI Lab Stanford HAI

Command R7B is open-weights. ✨Try it on the Cohere For AI Hugging Face space or download it directly here: huggingface.co/CohereForAI/c4…

Checkout our new vision model! Now available on our platform and coming soon to Hugging Face for research use.