Wenyan Li

@wenyan62

PhD student at the CoAStaL NLP Group, University of Copenhagen. Former researcher at Comcast AI and SenseTime.

ID: 1303507887315185665

http://wenyanli.org 09-09-2020 01:38:01

26 Tweet

204 Takipçi

189 Takip Edilen

It is only rarely that, after reading a research paper, I feel like giving the authors a standing ovation. But I felt that way after finishing Direct Preference Optimization (DPO) by Rafael Rafailov @ NeurIPS Archit Sharma Eric Stefano Ermon Christopher Manning and Chelsea Finn. This

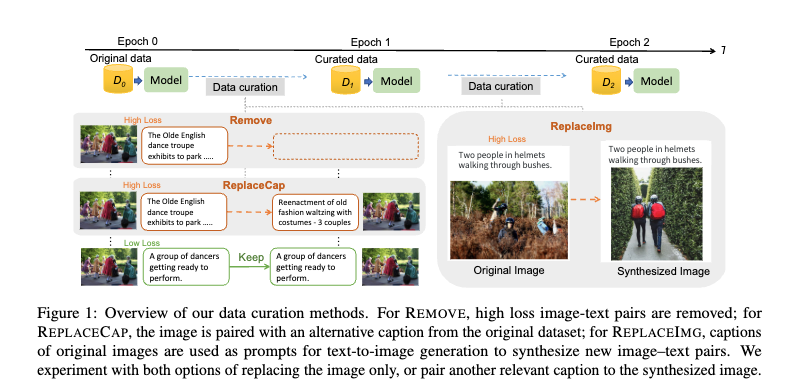

Happy to share that our paper "The Role of Data Curation in Image Captioning" is accepted to #EACL2024 main conference! Thanks to our co-authors Jonas Færch Lotz Desmond Elliott ! we will update the preprint and release the code soon! See you in Malta 🏖️🏖️

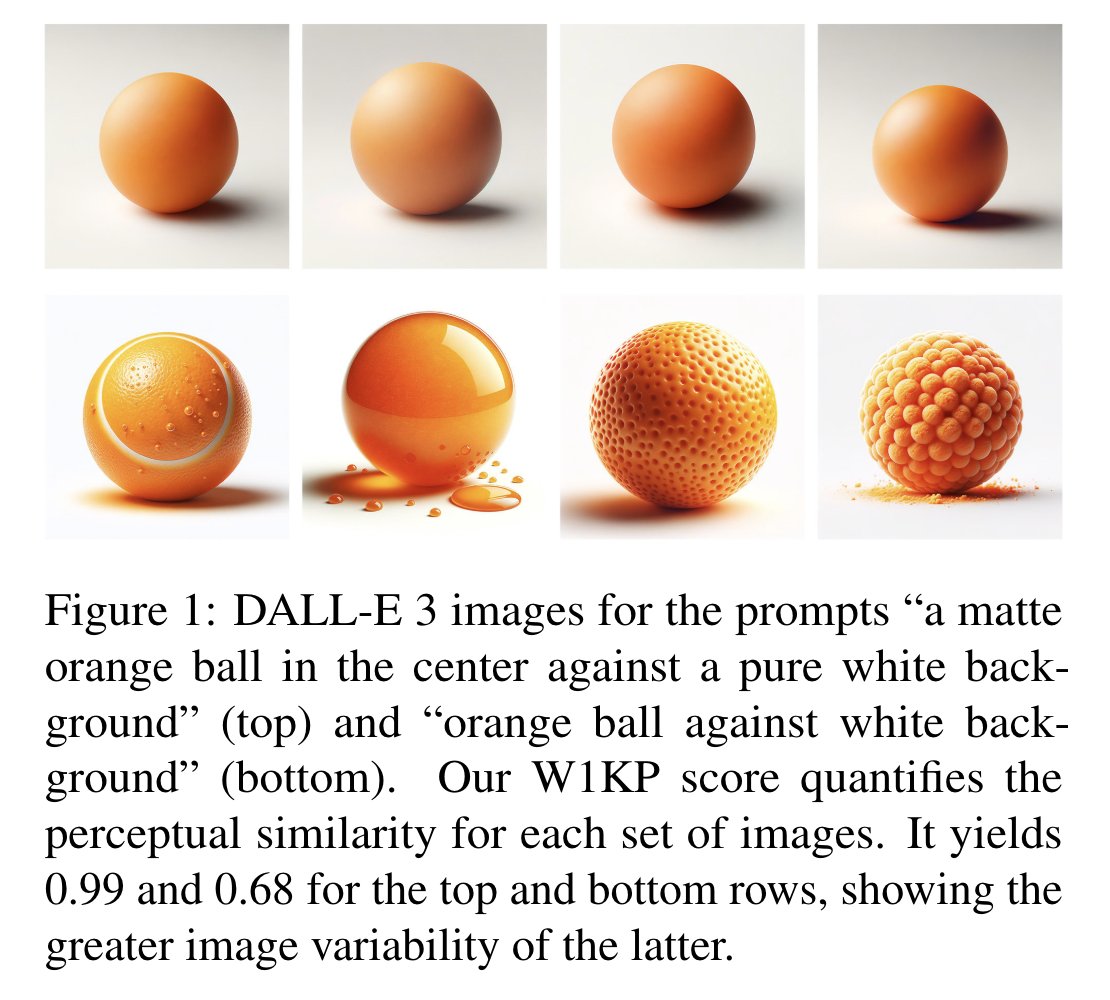

They say a picture is worth a thousand words... but work led by ralphtang.eth finds words worth a thousand pictures! arxiv.org/abs/2406.08482

📣📣 Thrilled to share that I’ll present our paper “Understanding Retrieval Robustness for Retrieval-Augmented Image Captioning” at #ACL2024!! arxiv.org/abs/2406.02265 See you In Bangkok🌴🌴🌴 Kudos to our coauthors❤️@JIAANGLI Rita Ramos ralphtang.eth Desmond Elliott

Our paper on understanding variability in text-to-image models was accepted at #EMNLP2024 main track! Lots of thanks to my collaborators Xinyu Crystina Zhang Yao Lu Wenyan Li Ulie Xu and mentors Jimmy Lin Pontus Ferhan Ture. Check out w1kp.com

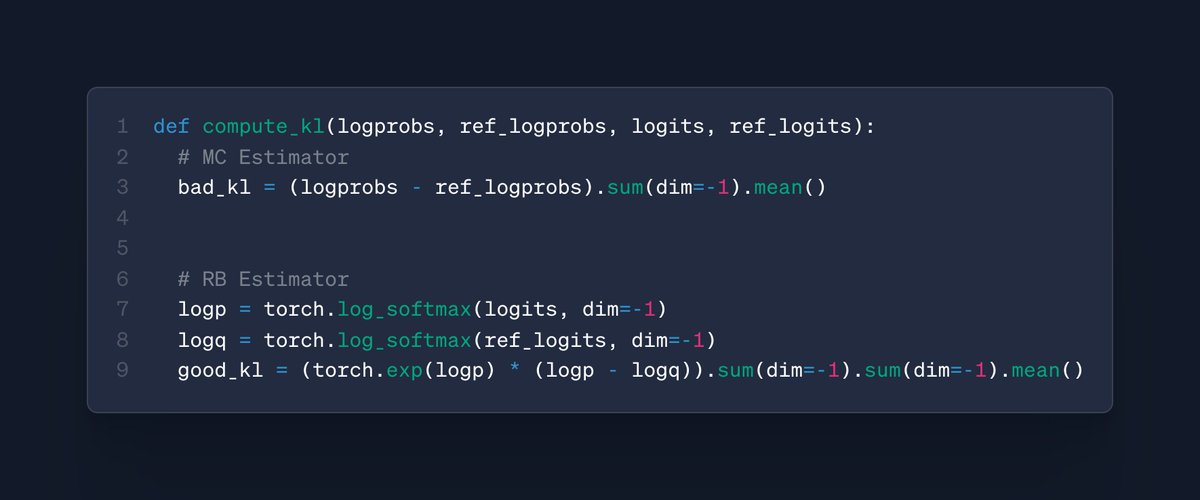

Current KL estimation practices in RLHF can generate high variance and even negative values! We propose a provably better estimator that only takes a few lines of code to implement.🧵👇 w/ Tim Vieira and Ryan Cotterell code: arxiv.org/pdf/2504.10637 paper: github.com/rycolab/kl-rb