vislang.ai

@vislang

Twitter account of the Vision, Language and Learning Lab, Computer Science @ Rice University.

ID: 1270579924169035776

https://www.vislang.ai 10-06-2020 04:54:08

86 Tweet

3,3K Followers

1,1K Following

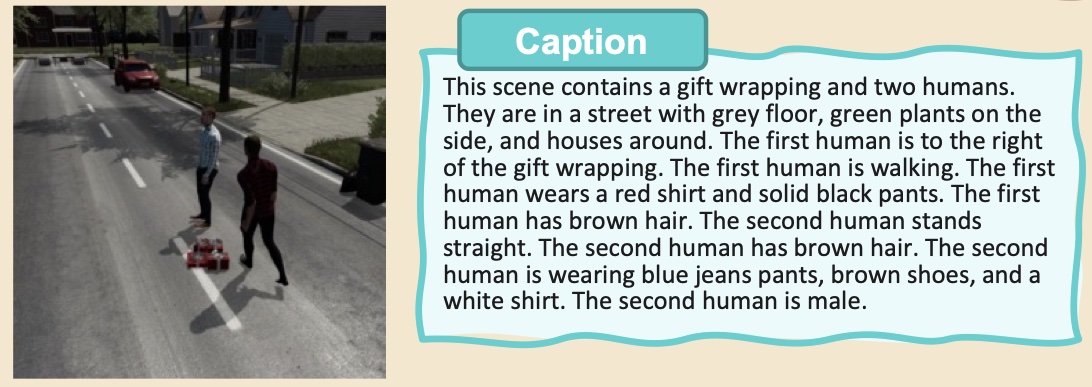

#ICCV2023 Fri Oct 6 (PM) Going Beyond Nouns With Vision & Language Models Using Synthetic Data Cascante, Shehada, James Smith, Sivan Doveh, Kim, Rameswar Panda, Gül Varol, Aude Oliva, vislang.ai, Rogerio Feris, Leonid Karlinsky pdf: arxiv.org/abs/2303.17590 web: synthetic-vic.github.io

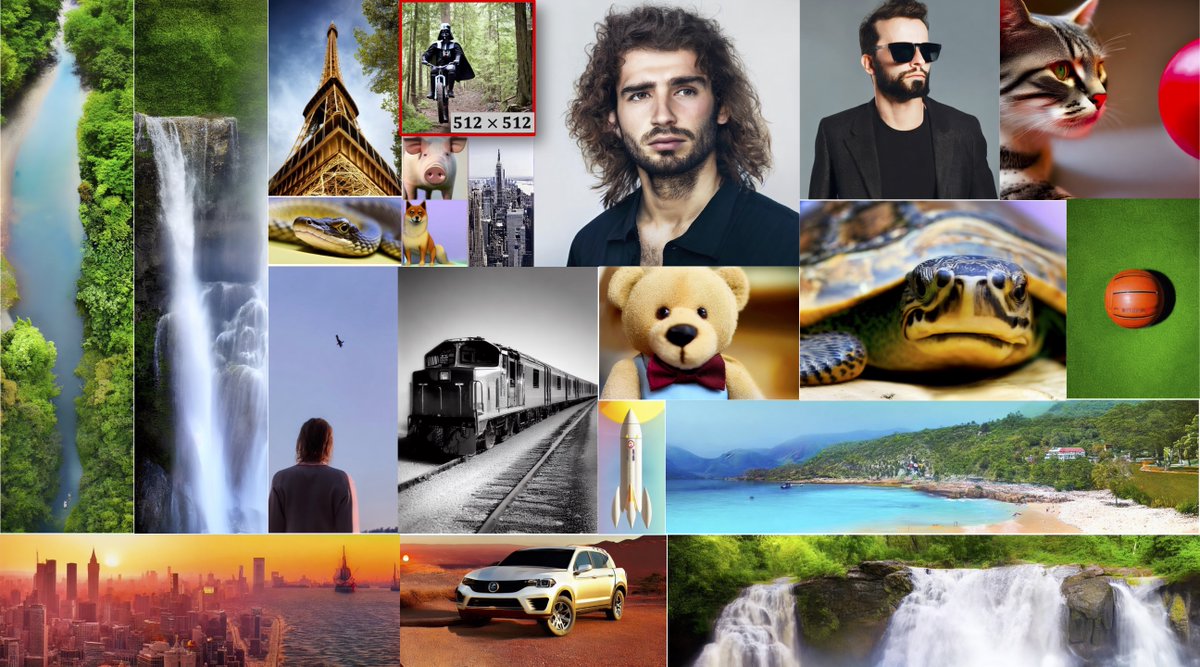

Rice CS PhD student Paola Cascante-Bonilla introduces a 1M-scale synthetic dataset at #iccv2023. It allows users to add synthetically generated objects like furniture & humans to an image & is the result of her collaboration with her vislang.ai advisor Vicente Ordónez. bit.ly/3QIqA1p

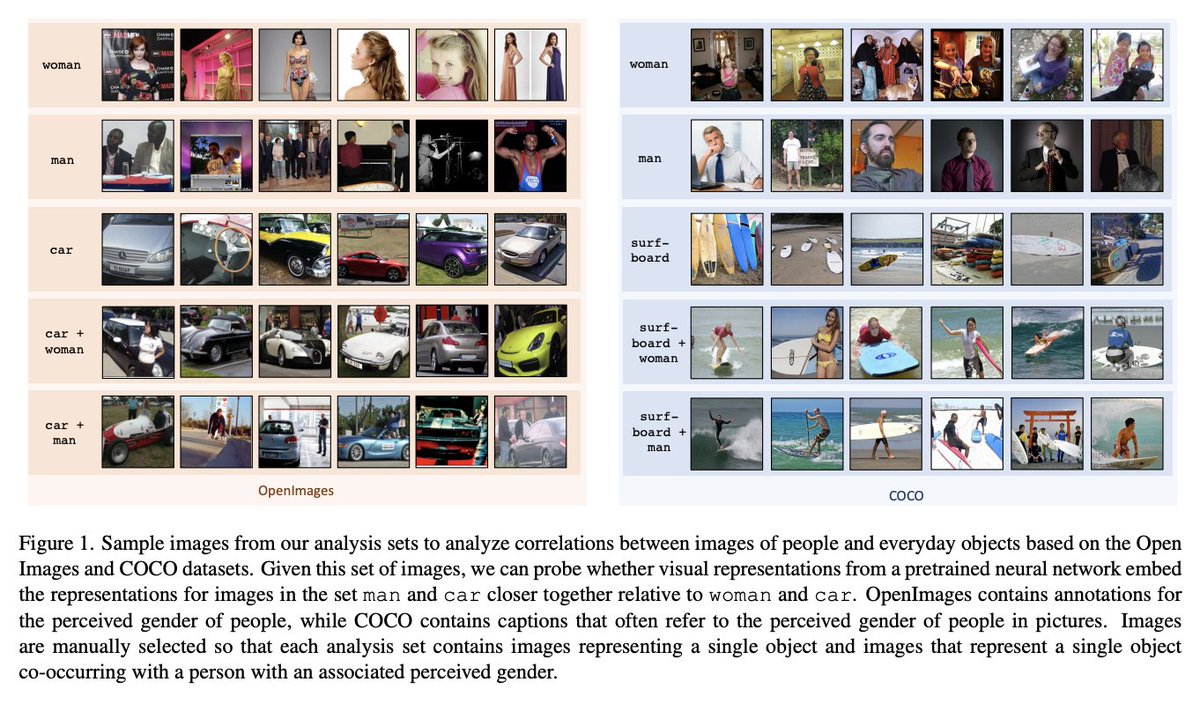

How do biases change before and after finetuning large scale visual recognition models? Our AFME 2024 @ NeurIPS paper incorporates sets of canonical images to highlight changes in biases for an array of off-the-shelf pretrained models. #NeurIPS2023 Link: arxiv.org/abs/2303.07615

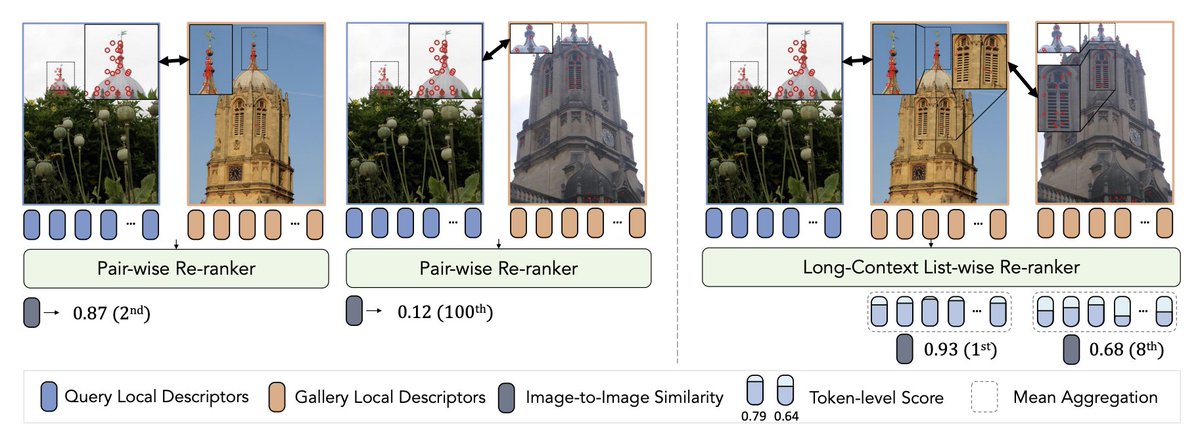

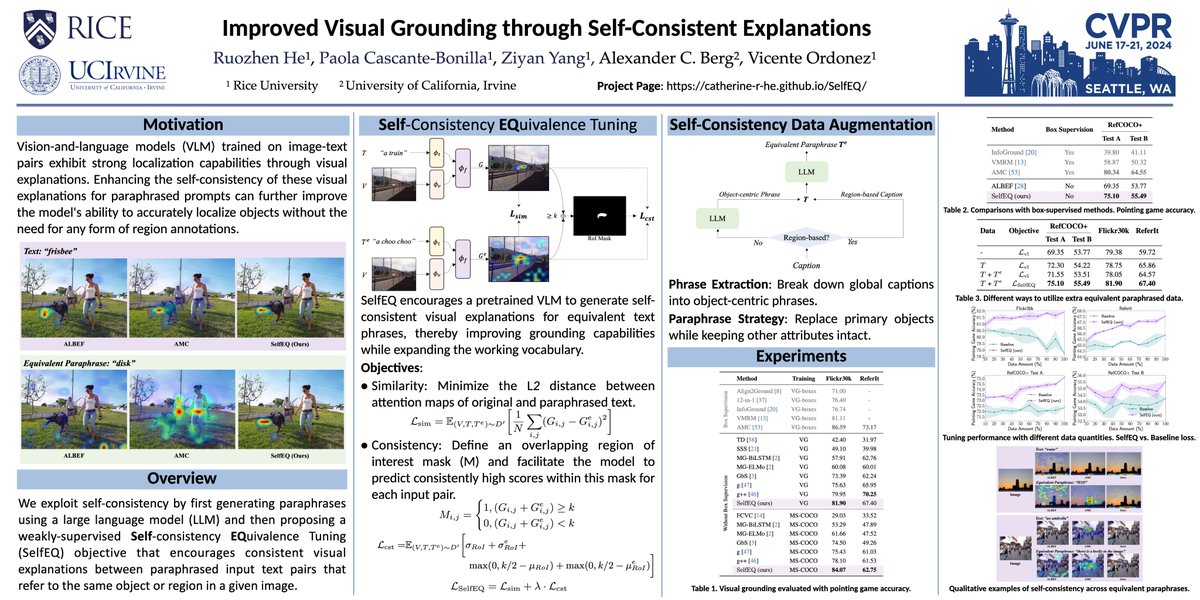

Chatted with Ruozhen Catherine He and Paola Cascante-Bonilla from vislang.ai at Rice University about the paper they had accepted to #CVPR2025 Their paper introduces Self-Consistency Equivalence Tuning (SelfEQ) to improve visual grounding in vision-and-language models using paraphrases. The Problem:

(1/4) Excited to share our latest work at #CVPR2024 #CVPR2025!🔥 Join us tomorrow, Thursday, June 20, from 10:30am to noon at Poster Session 3, # 334, to learn about "Improved Visual Grounding through Self-Consistent Explanations" with Paola Cascante-Bonilla, Ziyan, Alex Berg, vislang.ai.

Check out the work by Ruozhen Catherine He who is representing our group at #CVPR2024 at the poster session tomorrow morning. Poster #334. #CVPR.

Rice CS' Ruozhen Catherine He presented her paper, Improved Visual Grounding through Self-Consistent Explanations, at #CVPR2025 2024. SelfEQ helps computers ‘see’ more accurately and consistently. She is advised by faculty member Vicente Ordóñez-Román. bit.ly/4dfe9CS vislang.ai

GenAI has struggled to create consistent images, but research from Rice CS' vislang.ai lab could make weird AI images a thing of the past. Moayed Haji Ali and Vicente Ordónez-Román have developed a way to improve the performance of AI diffusion models. bit.ly/3BcIlQQ

Rice CS welcomes Zhengzhong Tu, Texas A&M assistant professor, next Tuesday, 9/24 at 4pm in Duncan Hall 3076. Dr. Tu will discuss Democratizing Diffusion Models for Controllable & Efficient Computational Imaging. PLEASE RSVP: bit.ly/4eraBh1 Zhengzhong Tu vislang.ai