Dimitris Tsipras

@tsiprasd

How might an online community look after many people join? My paper w/ lindsay popowski @Carryveggies Meredith Ringel Morris Percy Liang Michael Bernstein introduces "social simulacra": a method of generating compelling social behaviors to prototype social designs 🧵 arxiv.org/abs/2208.04024 #uist2022

You’re deploying an ML system, choosing between two models trained w/ diff algs. Same training data, same acc... how do you differentiate their behavior? ModelDiff (gradientscience.org/modeldiff) lets you compare *any* two learning algs! w/ Harshay Shah Sam Park Andrew Ilyas (1/8)

Our #NeurIPS2022 poster on in-context learning will be tomorrow (Thursday) at 4pm! Come talk to Shivam Garg and me at poster #928 🔥

Stable diffusion can visualize + improve model failure modes! Leveraging our method, we can generate examples of hard subpopulations, which can then be used for targeted data augmentation to improve reliability. Blog: gradientscience.org/failure-direct… Saachi Jain Hannah Lawrence A.Moitra

Recent events (ahem) have brought the debate on whether/how to regulate social media back to the forefront. My students Sarah Cen Andrew Ilyas and I have been thinking about this for a *while*. Excited to share the first results of our thinking: aipolicy.substack.com/p/socialmedias… (1/3)

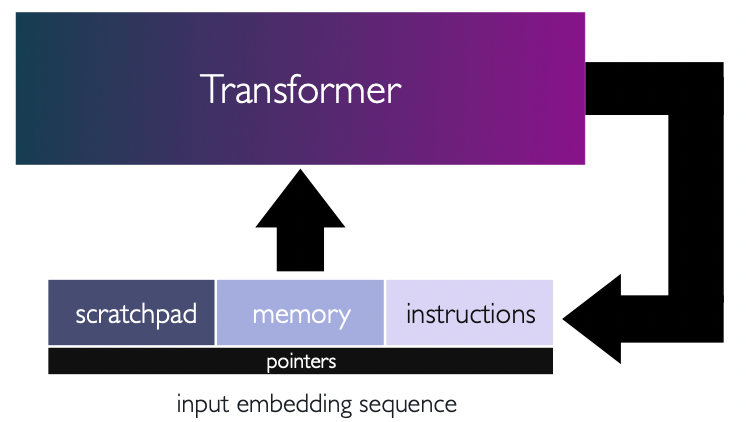

Can transformers follow instructions? We explore this in: "Looped Transformers as Programmable Computers" arxiv.org/abs/2301.13196 led by Angeliki (Angeliki Giannou) and Shashank (Shashank Rajput) in collaboartion with the Kangwook Lee and Jason Lee Here is a 🧵

Aleksander Madry testifying at the Congressional subcommittee on AI technology! That's my postdoc advisor!! oversight.house.gov/hearing/advanc…