AI21 Labs

@AI21Labs

AI21 Labs builds Foundation Models and AI Systems for the enterprise that accelerate the use of GenAI in production.

🥂Meet Jamba

https://t.co/xUBjKZHKVH

ID:1166332664569368576

http://www.ai21.com 27-08-2019 12:52:51

259 Tweets

6,2K Followers

90 Following

.Ori Goshen, Co-Founder + Co-CEO of AI21 Labs, talks about growth opportunities for the company following a $208 million Series C funding round, and shares his perspective on the future of AI on #NYSEFloorTalk with Judy Khan Shaw

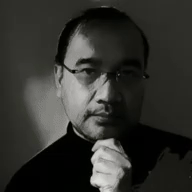

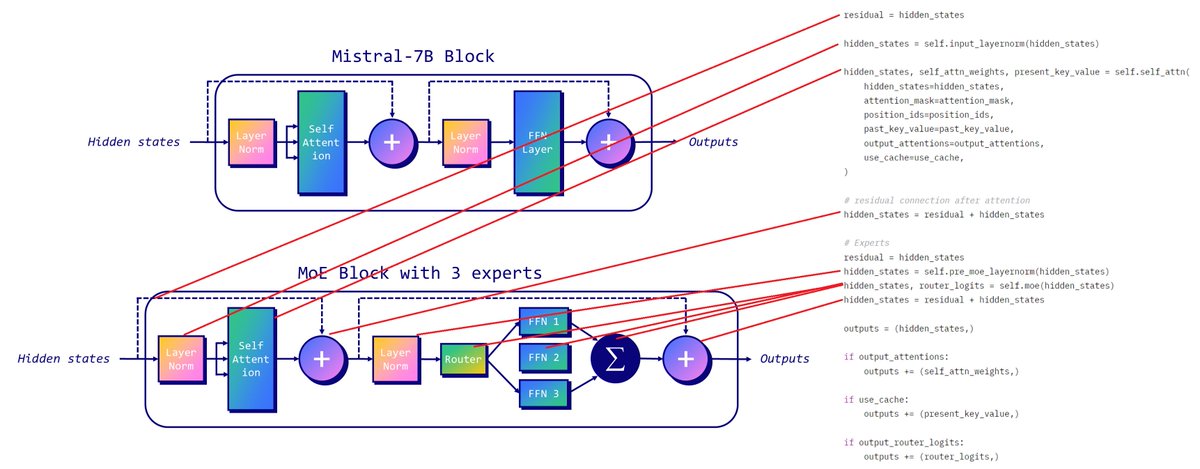

Yesterday AI21 Labs released Jamba, the first production-scale Mamba implementation as a hybrid SSM-Transformer MoE 🐍 And today, you can already finetune it with Hugging Face TRL.

Alexander Doria shared a working Qlora script using 4-bit quantization on an A100 GPU (for now,…

incredibly impressed by AI21 Labs' Jamba today. This is the first legitimate Mixtral-killer we've seen and it came out of 'nowhere':

buttondown.email/ainews/archive…

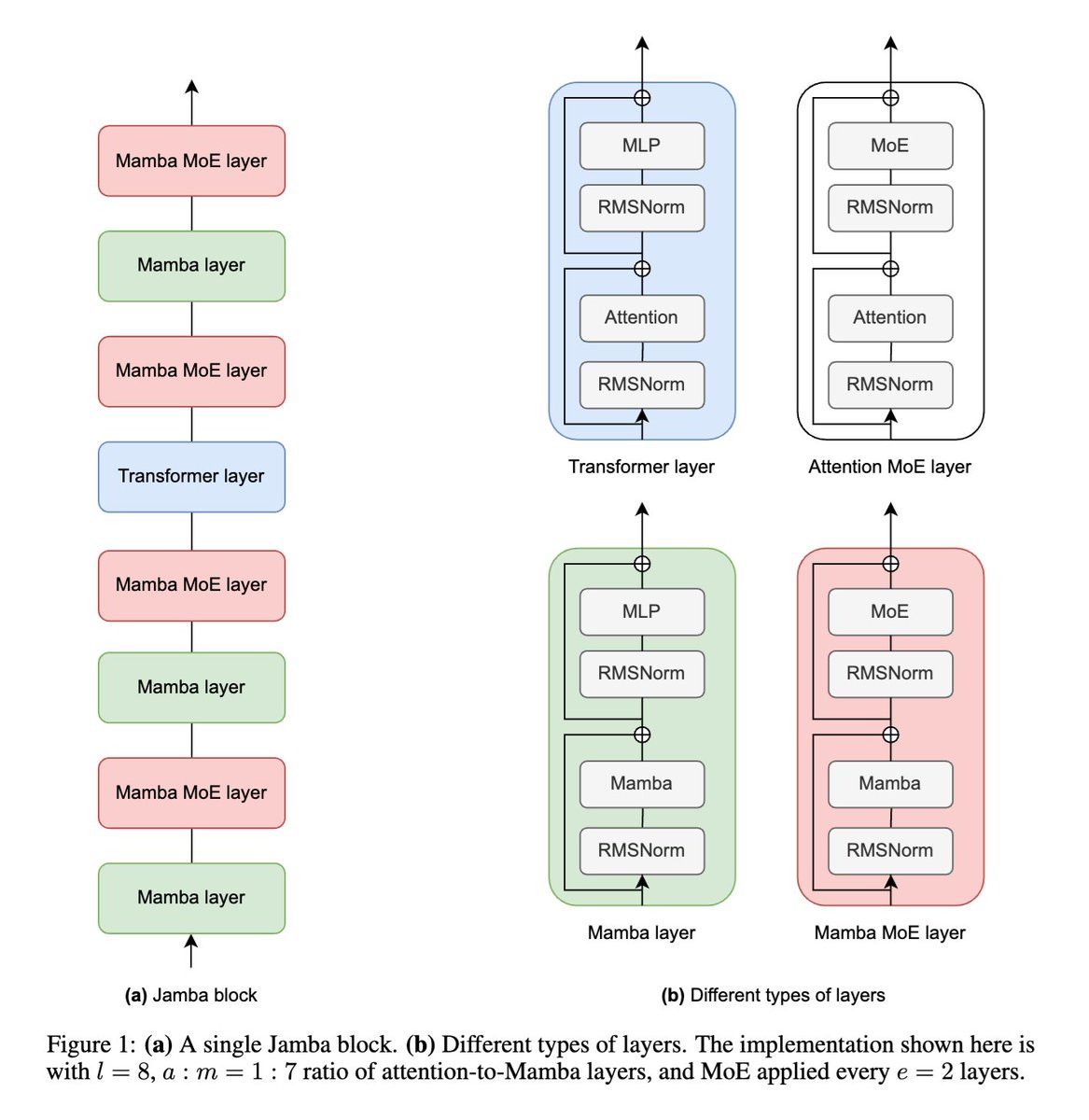

They've helped me redefine my idea of a model 'weight class' from 'number of parameters' (increasingly outdated with MoEs…