Sebastian Raschka

@rasbt

ML/AI researcher & former stats professor turned LLM research engineer. Author of "Build a Large Language Model From Scratch" (amzn.to/4fqvn0D).

ID: 865622395

https://sebastianraschka.com 07-10-2012 02:06:16

17,17K Tweet

326,326K Takipçi

1,1K Takip Edilen

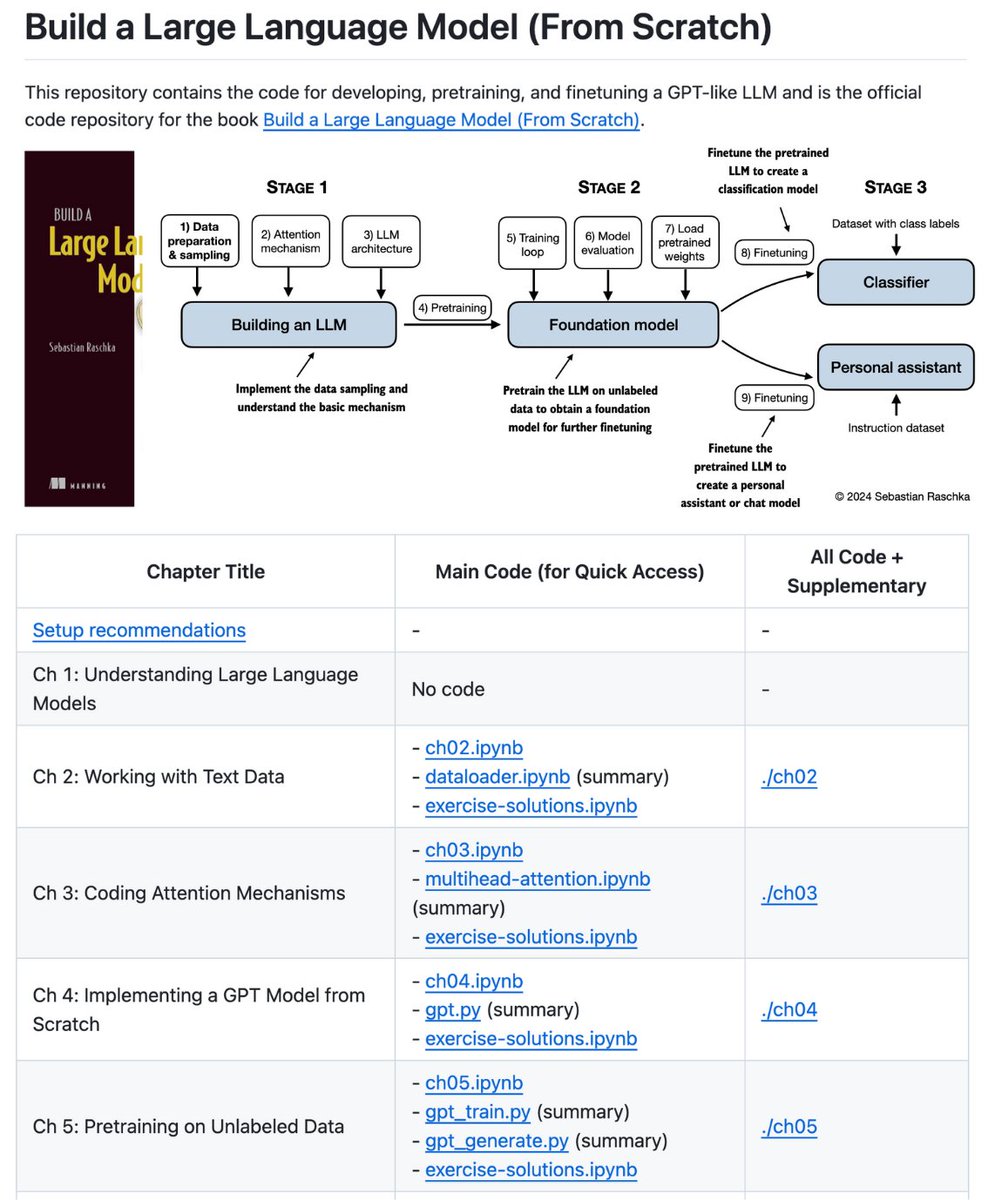

I'm 3 weeks into a book study on Sebastian Raschka's "How to Build an LLM from Scratch", and this book is incredible. The github repo contains Jupyter notebooks, and there are (free!) videos that augment the book. I am super impressed with the quality all around.

learn how to build an LLM from scratch, honestly. Sebastian Raschka's repo is really a gem. it has notebooks with diagrams and explanations that will teach you 100% of: > attention mechanism > implementing a GPT model > pretraining and fine-tuning my top recommendation for studying LLMs.