Sebastian Raschka

@rasbt

Machine learning & AI researcher writing at https://t.co/A0tXWzG1p5. LLM research engineer @LightningAI. Previously stats professor at UW-Madison.

ID:865622395

https://sebastianraschka.com/books/ 07-10-2012 02:06:16

15,5K Tweets

266,6K Followers

906 Following

Andrew Gordon Wilson A long time ago!

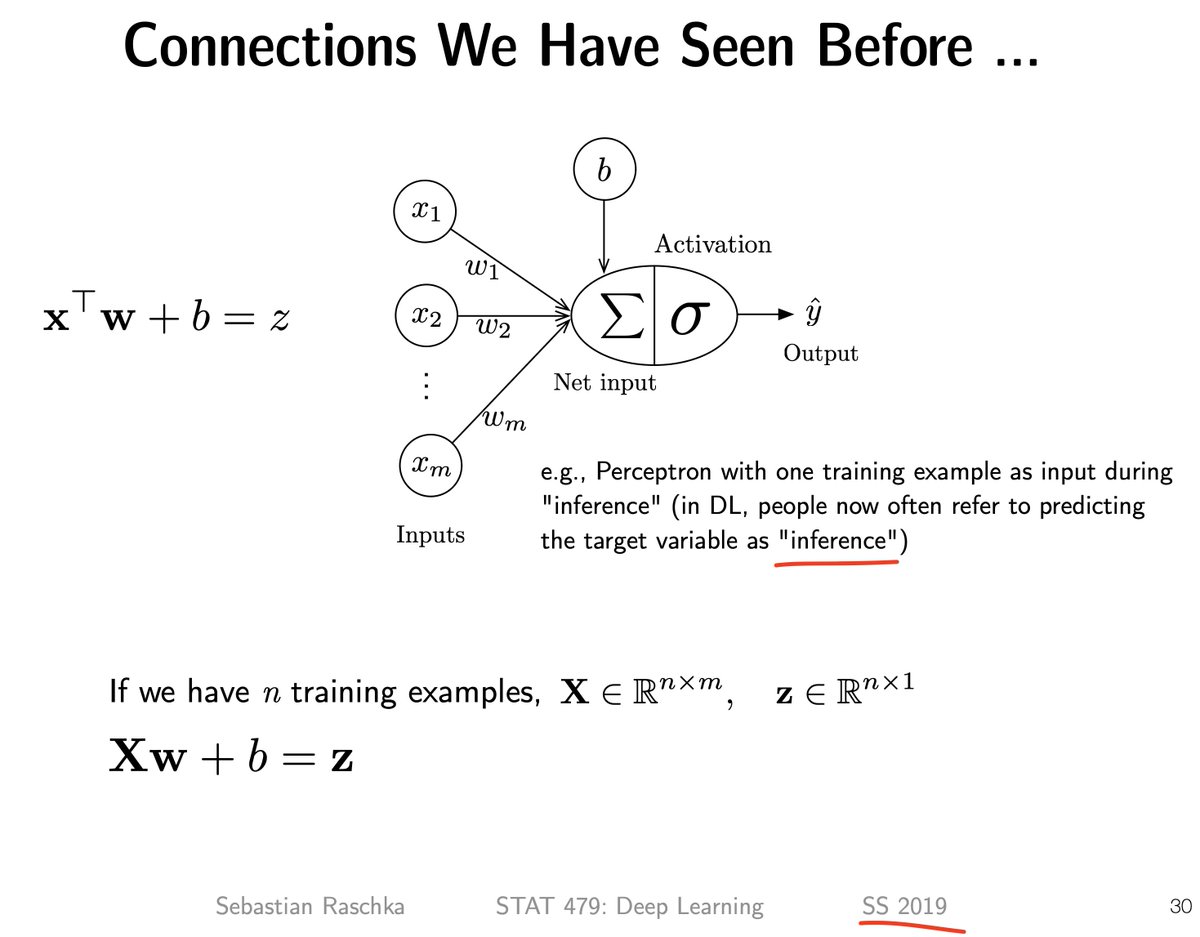

I was faculty in a statistics department and always had to clarify to students and colleagues that in deep learning we use the term 'inference' differently, haha.

From my lecture notes: github.com/rasbt/stat479-…

biased estimator Unsloth AI VRAM consumption for finetuning will not be nice. Also QKV is slower when fused interestingly. MLP fused might only be like teenily faster, but un-noticeable since the matrices are large twitter.com/rasbt/status/1…

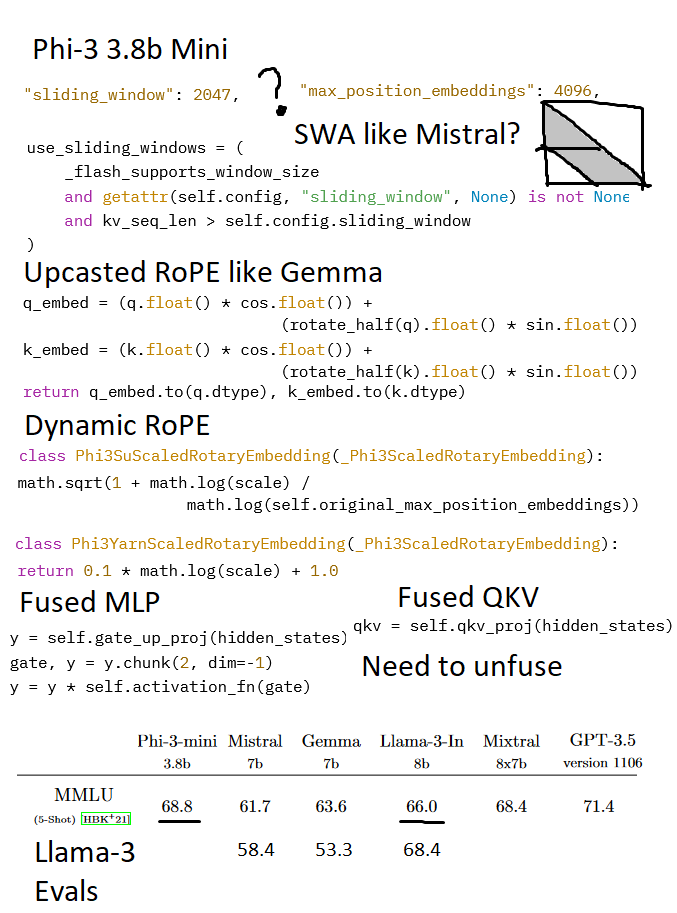

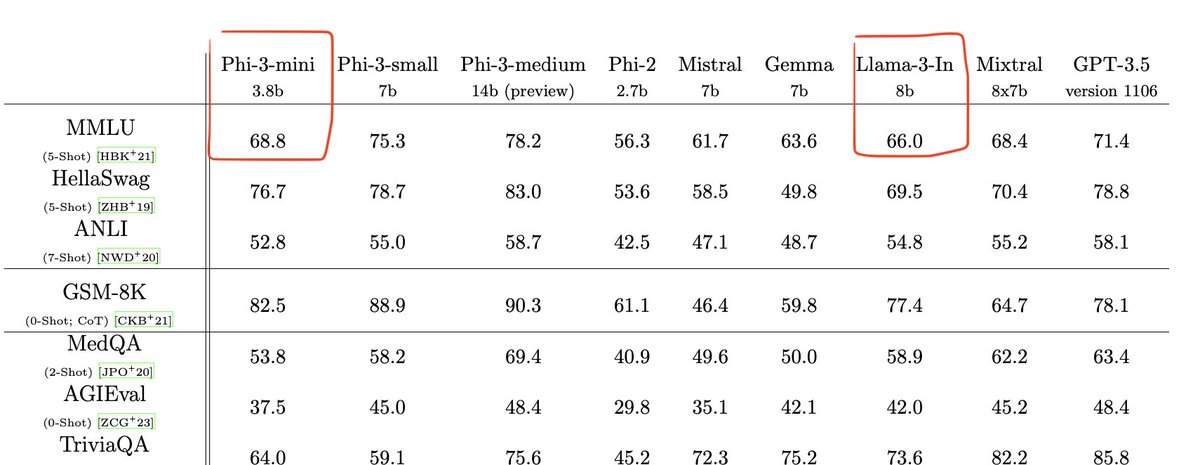

Phi 3 (3.8B) got released! The paper said it was just a Llama arch, but I found some quirks while adding this to Unsloth AI:

1. Sliding window of 2047? Mistral v1 4096. So does Phi mini have SWA? (And odd num?) Max RoPE position is 4096?

2. Upcasted RoPE? Like Gemma?

3. Dynamic…