Akarsh Kumar

@akarshkumar0101

PhD Student @MIT_CSAIL

RS Intern @SakanaAILabs

RL, Meta-Learning, Emergence, Open-Endedness, ALife

ID: 965065559619534853

https://akarshkumar.com/ 18-02-2018 03:28:54

332 Tweet

1,1K Takipçi

887 Takip Edilen

With gratitude to my phenomenal coauthors: Akarsh Kumar, Jeff Clune, and Joel Lehman Paper: arxiv.org/abs/2505.11581 Github: github.com/akarshkumar010…

Akarsh Kumar Great work 👏. “There are reasons to believe that current models are still suffering from FER, especially at the frontiers of knowledge where there is less data.” Even if the 10T parameters handle the issue, where do we go from there sounds like a solid foundation to invest

Kenneth Stanley Akarsh Kumar Jeff Clune Joel Lehman Congrats guys! Another excellent work ✨

Kenneth Stanley And they say academia is dead! Nice work!

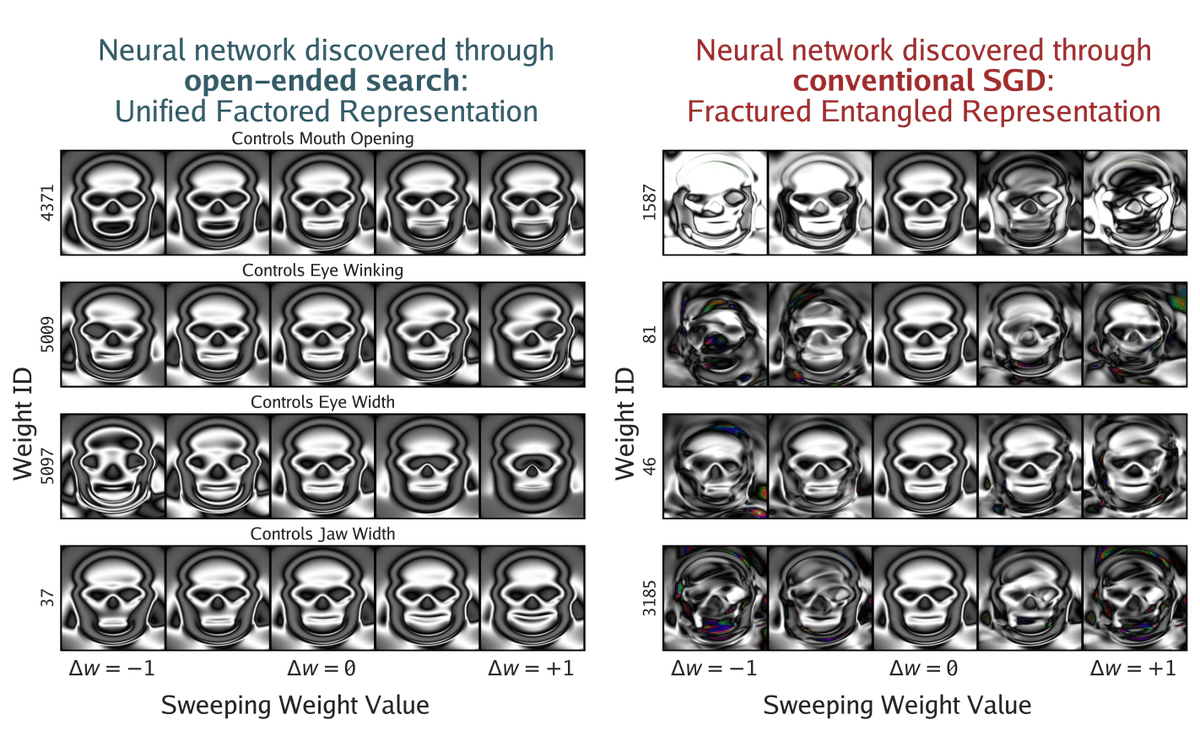

"Why can't my trained model generalize?" Maybe b/c its internal representation of the task is whack. Beautiful new paper from Jeff Clune's team. ~thread~ arxiv.org/abs/2505.11581

John Bohannon Akarsh Kumar Are you calling my brain FERry? I resemble that remark! re: 1(trillion) dollar question: we're working on it! We don't know the answer, but I strongly believe it will involve lots of principles from open-endedness (some known, some yet to be discovered). 😊🌱🔬🧪

attentionmech People underestimate evolutionary algorithms. Great things happen when evolution meta-optimizes us to be better at evolving. But a naive one-to-one gene-to-function mapping like is usually done in many implementations is not sufficient for this to arise

Revisiting Louis Kirsch et al.’s general-purpose ICL by meta-learning paper and forgot how great it is. It's rare to be taken along on the authors' journey to understand the phenomenon they document like this. More toy dataset papers should follow this structure.

oimo.io/works/life/ Incredible website by saharan / さはら visualizing Conway's Game of Life inside of Game of Life inside Game of Life ... and so on ... forever... Reminds me of the hierarchy of emergent structures in our world from physics to chemistry to biology. How many levels