Marc Sun

@_marcsun

Machine Learning Engineer @huggingface Open Source team

ID: 1623720711591174146

09-02-2023 16:29:25

458 Tweet

1,1K Takipçi

421 Takip Edilen

The latest mlx-lm has a new dynamic quantization method (made with Angelos Katharopoulos). It consistently results in better model quality with no increase in size. Some perplexity results (lower is better) for a few Qwen3 base models:

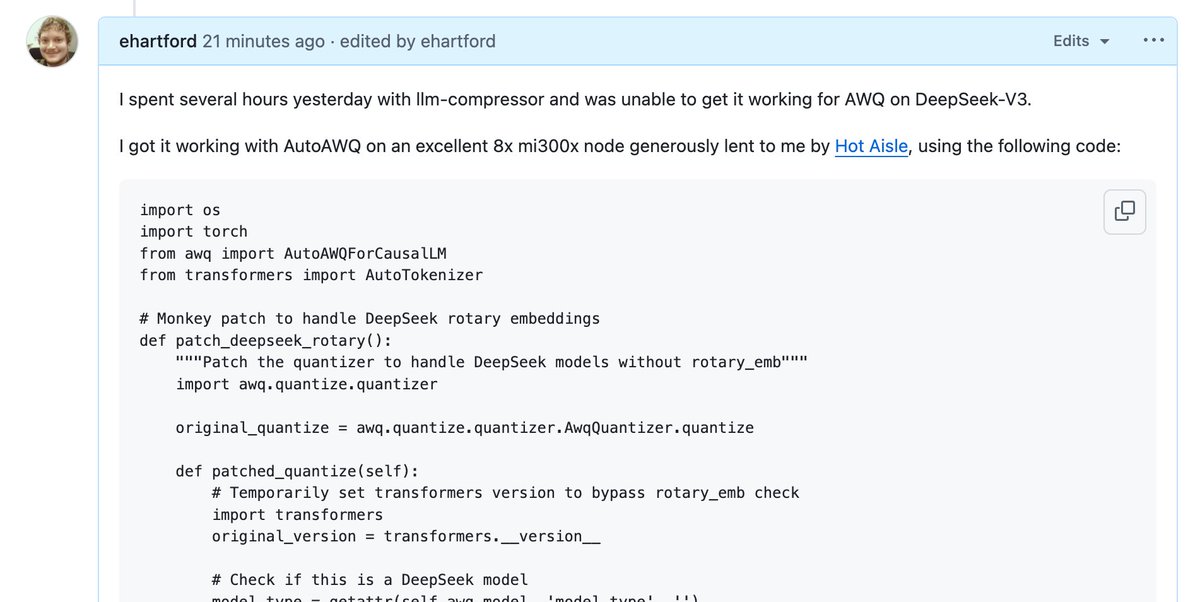

LLM Compressor just got way easier to use. You can now compress most LLMs directly from their Hugging Face model definition. No need to write custom wrappers. This new autowrapper supports 95% of multimodal and decoder models out of the box. Let’s break it down 🧵:

#PyTorch Distributed Checkpointing now supports Hugging Face safetensors—making it easier to save/load checkpoints across ecosystems. New APIs let you read/write safetensors via fsspec paths. First adopter: torchtune, with a smoother checkpointing flow. 📚 Learn more: