Tirthankar Ghosal

@tirthankarslg

Scientist @ORNL #NLProc #LLMs #peerreview #SDProc Editor @SIGIRForum Org. #AutoMin2023 @SDProc @wiesp_nlp AC @IJCAIconf @emnlpmeeting Prevly @ufal_cuni @IITPAT

ID: 817603403677253633

https://member.acm.org/~tghosal 07-01-2017 05:26:56

3,3K Tweet

556 Takipçi

1,1K Takip Edilen

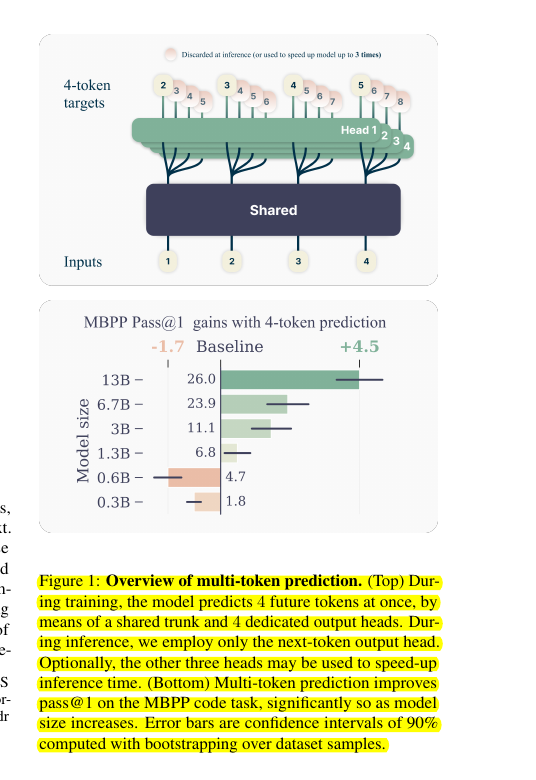

The "Multi-token Prediction" paper (April-2024) from AI at Meta and behind the Chameleon family of models is such an innovative idea. 👨🔧 Original Problem it solves Most LLMs have a simple training objective: predicting the next word. While this approach is simple and scalable,

📝 Check out the proceedings: aclanthology.org/volumes/2024.s… 🔗Get all the details about the program: sdproc.org/2024/program.h… 🙌 This workshop is organized by Tirthankar Ghosal, Philipp Mayr, Anita de Waard, Aakanksha Naik ✈️ NAACL 2024, Amanpreet Singh, Orion Weller, Shannon Shen, Yanxia Qin, Yoonjoo Lee

Absolute banger of a blogpost: Omar Khattab's "project-first" research mindset distilled into one succinct post.