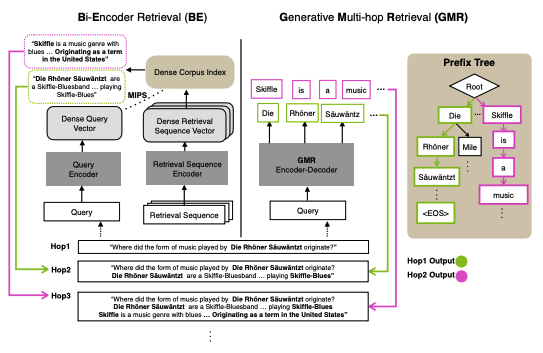

Where does generative retrieval have a significant advantage over bi-encoder retrieval? Our #EMNLP2022 paper 'Generative Multi-hop Retrieval' shows that the answer is 🦘multi-hop🦘 retrieval! (2.5x higher score when the # of hops is large, and the oracle # of hops is unknown) 1/9

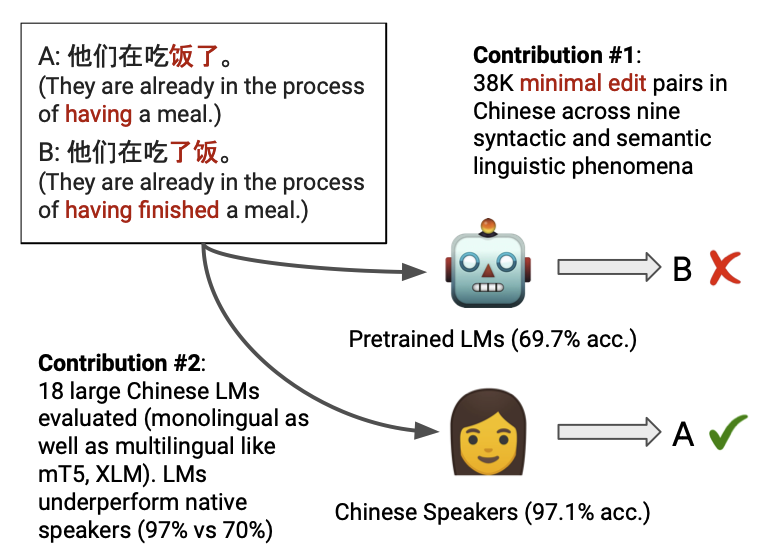

How much Chinese linguistic knowledge do large pretrained language models encode? SLING (to appear at #EMNLP2022 ) investigates this question and presents a high-quality dataset with 38K minimal pairs covering 9 Chinese linguistic phenomena. (1/6)

📄Paper: arxiv.org/abs/2210.11689

👏Happy to announce that our paper 'HashFormers: Towards Vocabulary-independent Pre-trained Transformers' got accepted at #EMNLP2022 . All thanks to my favourite supervisor Nikos Aletras🙏🍺

Paper👀👇: arxiv.org/abs/2210.07904.

[1/k]

![Huiyin Xue (@HuiyinXue) on Twitter photo 2022-10-17 09:56:42 👏Happy to announce that our paper 'HashFormers: Towards Vocabulary-independent Pre-trained Transformers' got accepted at #EMNLP2022. All thanks to my favourite supervisor @nikaletras🙏🍺

Paper👀👇: arxiv.org/abs/2210.07904.

[1/k] 👏Happy to announce that our paper 'HashFormers: Towards Vocabulary-independent Pre-trained Transformers' got accepted at #EMNLP2022. All thanks to my favourite supervisor @nikaletras🙏🍺

Paper👀👇: arxiv.org/abs/2210.07904.

[1/k]](https://pbs.twimg.com/media/FfQ0sEdWYAATlsf.png)

Mind-blowing paper I met on #EMNLP2022 . I highly recommend this paper. The idea is so cool that I can't help to check out the code directly after having a chat with the cool author Oren Sultan!

#EMNLP2022 livetweet

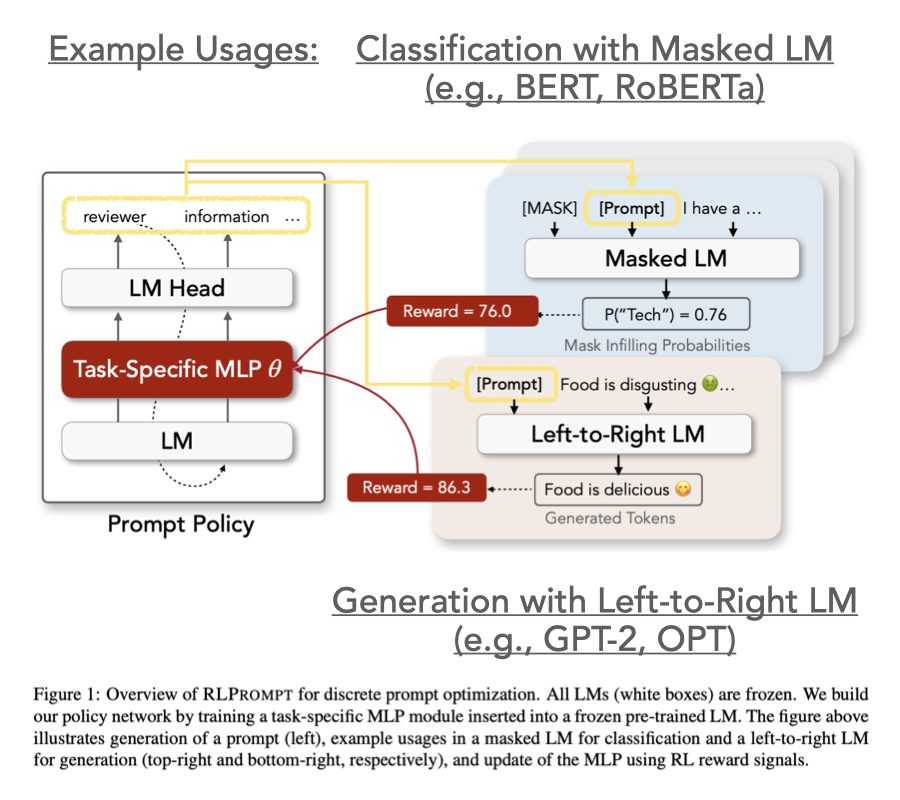

While I'm not at #EMNLP2022 , we have two works on the intersection of RL + NLP.

RLPrompt: Optimizing Discrete Text Prompts with Reinforcement Learning

(arxiv.org/abs/2205.12548)

Efficient (Soft) Q-Learning for Text Generation with Limited Good Data

(arxiv.org/abs/2106.07704)

#EMNLP2022 RLPrompt uses Reinforcement Learning to optimize *discrete* prompts for any LM

w/ many nice properties:

* prompts transfer trivially across LMs

* gradient-free for LM

* strong improve vs manual/soft prompts

Paper arxiv.org/abs/2205.12548

Code github.com/mingkaid/rl-pr…

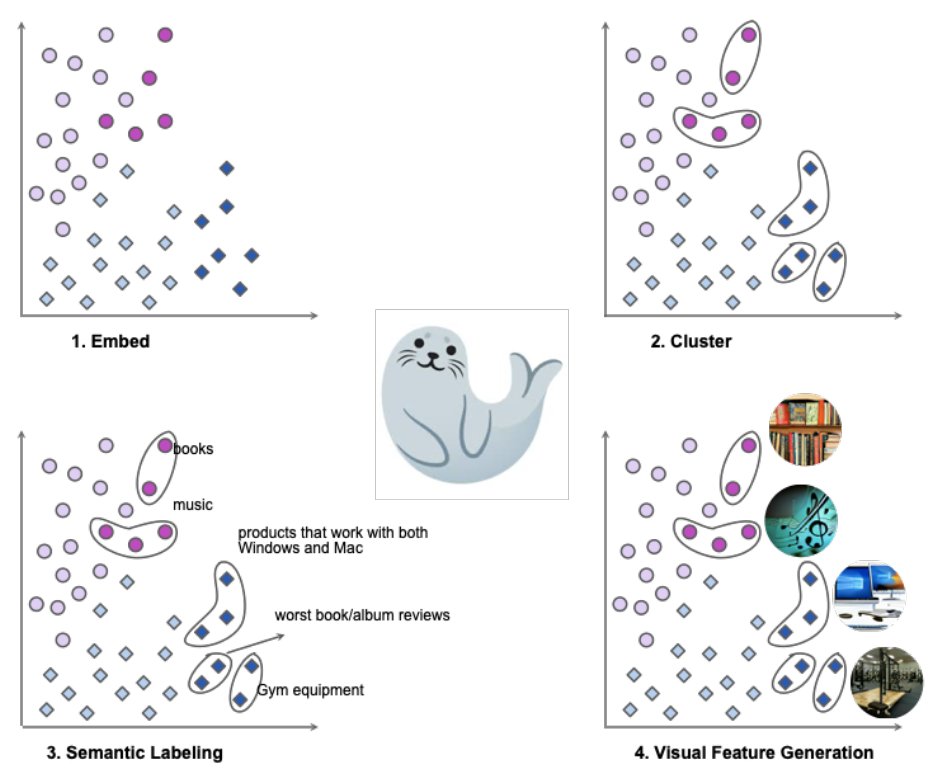

Our paper on Systematic Error Analysis and Labeling (SEAL) 🦭 has been accepted at EMNLP demo track 🎉

Problem: How can we help users find systematic bugs in their models?

Eg: Image classification model on low light images, sentiment classifier on gym reviews

#emnlp2022

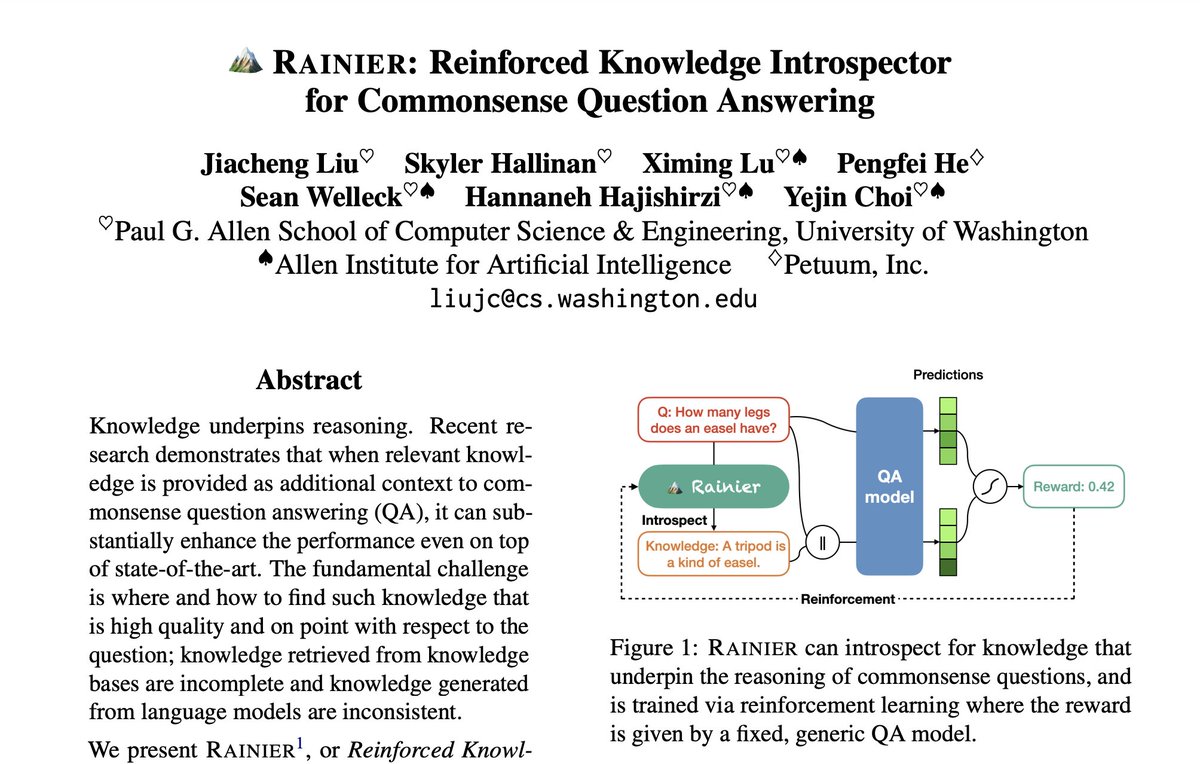

Can LMs introspect the commonsense knowledge that underpins the reasoning of QA?

In our #EMNLP2022 paper, we show that relatively small models (<< GPT-3), trained with RLMF (RL with Model Feedback), can generate natural language knowledge that bridges reasoning gaps. ⚛️

(1/n)

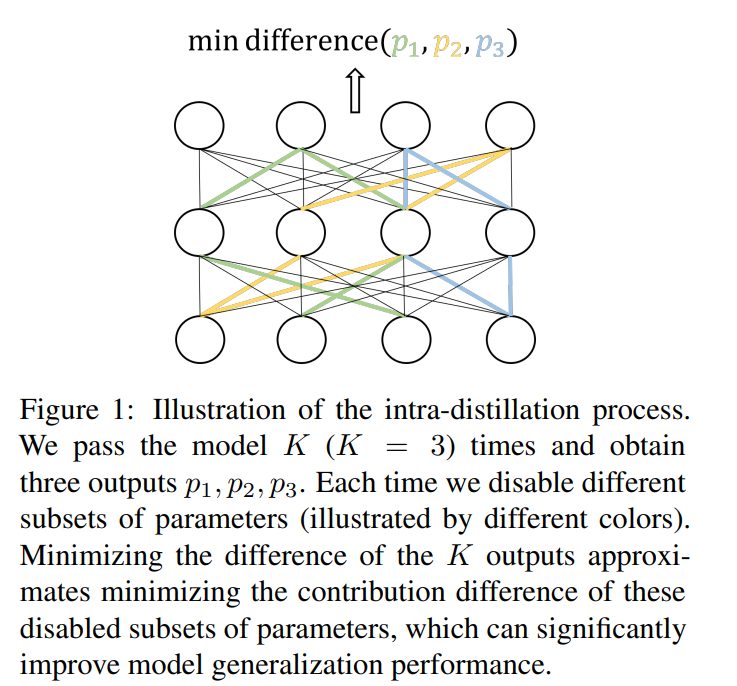

How easy is it to have big gains for your model? Just pass the model multiple times and minimize their difference! The secrete is more balanced parameter contribution. Check our #EMNLP2022 paper 'The Importance of Being Parameters: An Intra-Distillation Method for Serious Gains'!

Finally, I met Yejin Choi at the conference!Thank you for accepting the photo request. I met her at the Ritz-Carlton with a view of the beautiful mosque last night. I was so honored that I couldn't sleep! As a Korean, I am proud of her! Yejin Choi #EMNLP2022

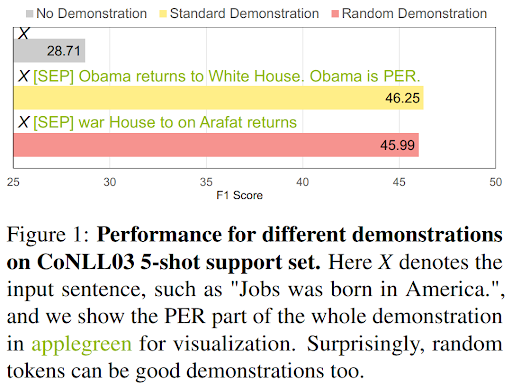

Demonstrations composed of RANDOM tokens can still work? YES!

In our #EMNLP2022 paper (w/Yanzhe Zhang,Diyi Yang,Ruiyi Zhang), we design pathological demonstrations to investigate “Robustness of Demonstration-based Learning Under Limited Data Scenario” arxiv.org/abs/2210.10693

🧵Language models have an unfortunate tendency to contradict themselves.

Our #emnlp2022 oral presents Consistency Correction w/ Relation Detection (ConCoRD), which overrides low-confidence LM predictions to boost self-consistency & accuracy.

Paper/code: ericmitchell.ai/emnlp-2022-con…

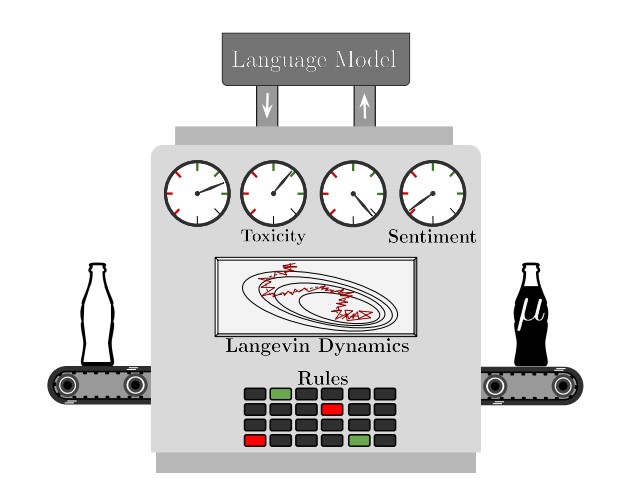

Super excited to introduce our #EMNLP2022 paper!

MuCoLa: Gradient-based Constrained Sampling from Language Models.

With Biswajit Paria and Yulia Tsvetkov tsvetshop

arxiv.org/abs/2205.12558 (1/7)