Shruti Singh @ ACL 2024

@shruti_rsingh

Representation Learning for Scientific Literature | #NLProc | Fulbright fellow @yale | CS Ph.D. Student @iitgn | Past @daiictofficial

ID: 2722176900

http://shruti-singh.github.io 10-08-2014 18:26:56

194 Tweet

247 Followers

1,1K Following

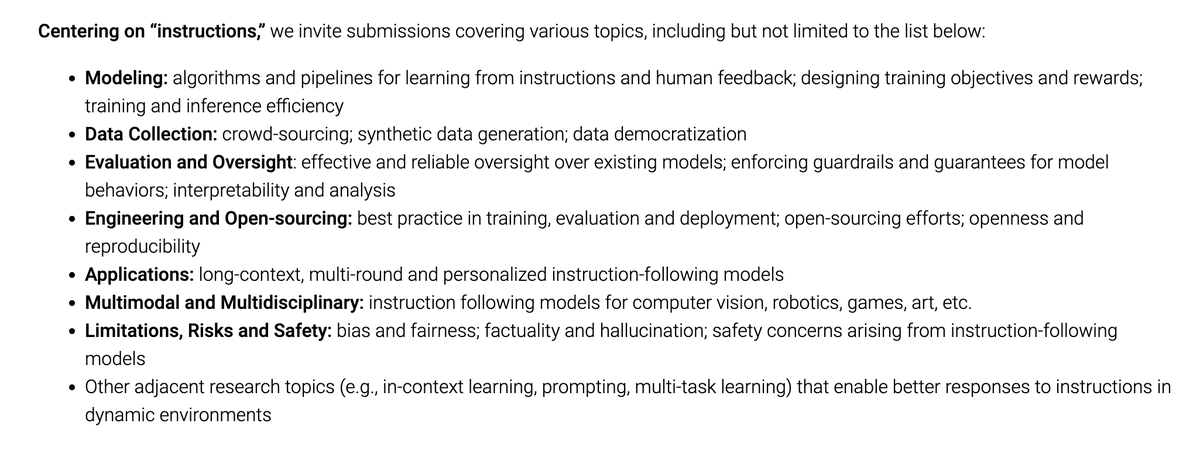

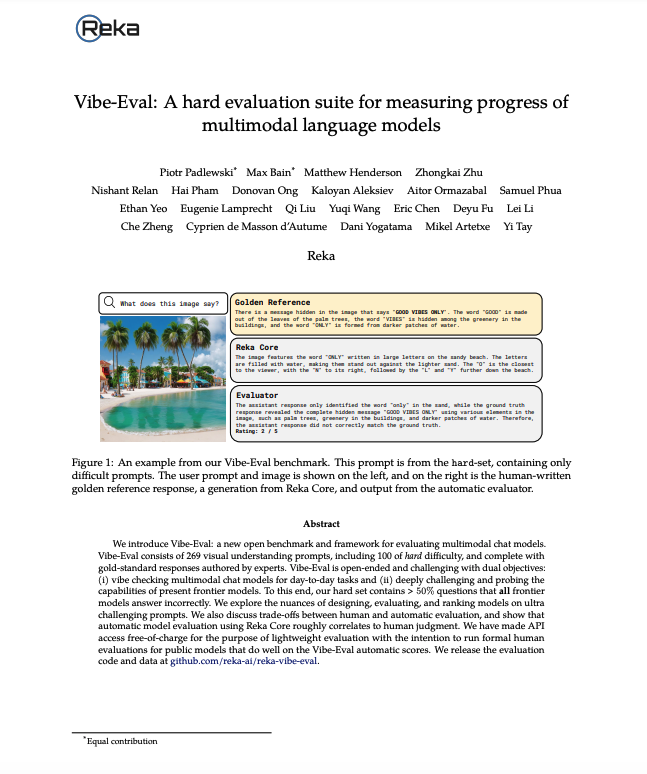

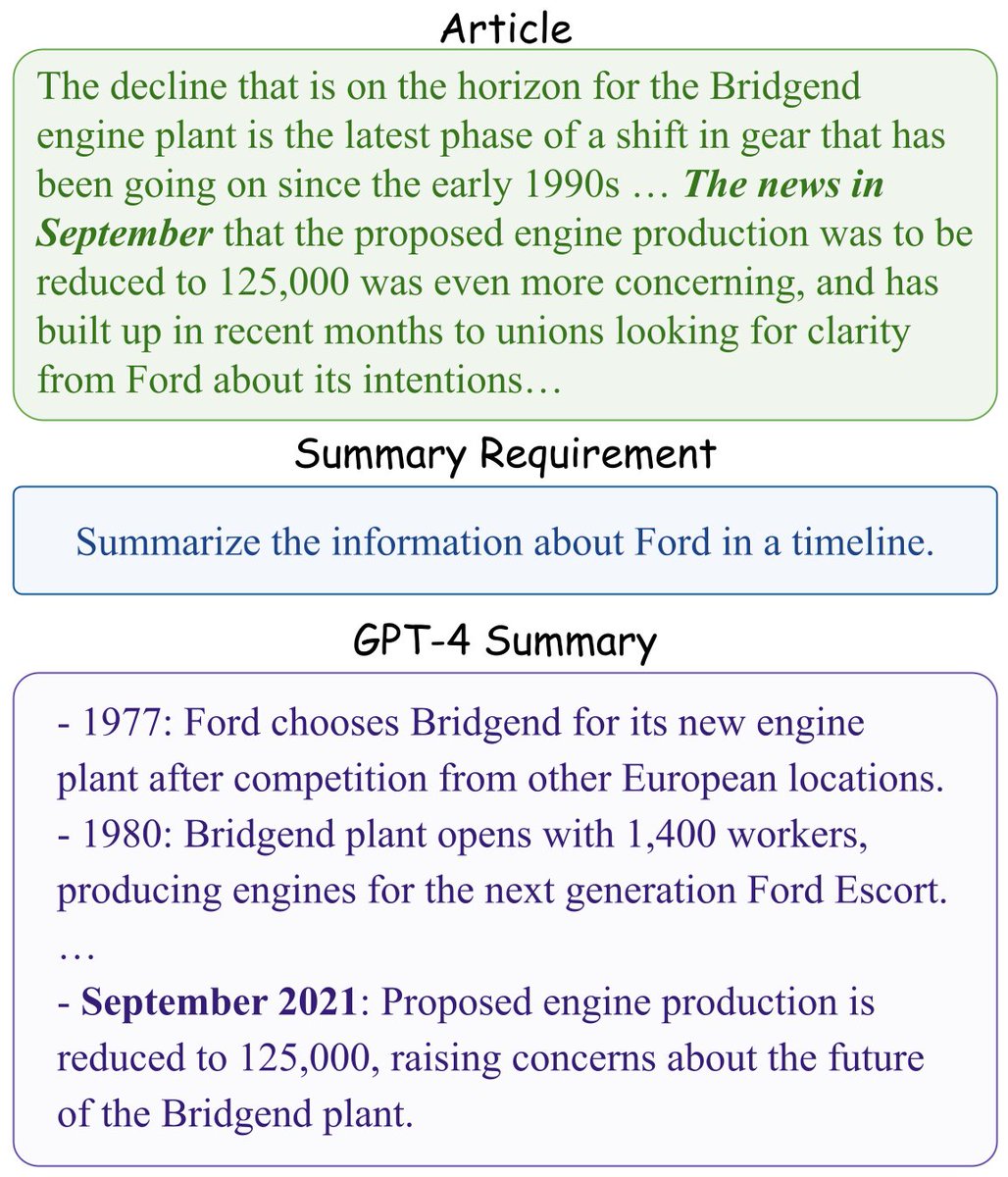

Excited to share our work on "Benchmarking Generation and Evaluation Capabilities of LLMs for Instruction Controllable Summarization"! As LLMs excel in generic summarization, we must explore more complex task settings.🧵 arxiv.org/abs/2311.09184 Equal contribution Alex Fabbri

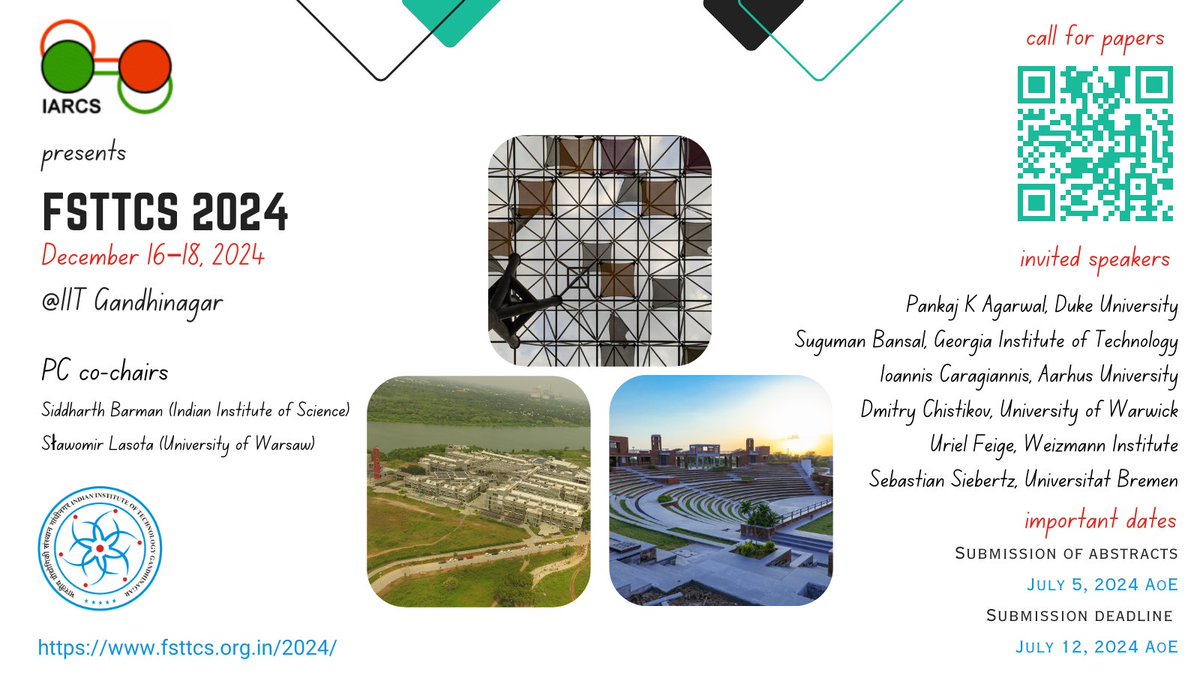

#GandhiPedia is getting inaugurated today. A great moment for us to celebrate the technology and collaborative efforts from diverse set of experts. A huge shout out for IIT Kharagpur🇮🇳, IIT Gandhinagar, National Council of Science Museums-NCSM teams.

🌟 Join the Lingo IITGN as a PhD student! 🌟 Dive into cutting-edge NLP research, including Multilingual & Multimodal LLMs and SLMs.💻 Access SOTA computing & GPUs🔥 Strong funding & industry connections 📈 Proven publication record 🚀 Shape impactful products with us! #NLP #LLM