Sayash Kapoor

@sayashk

CS PhD candidate @PrincetonCITP and senior fellow at @Mozilla. I tweet about agents, evaluation, reproducibility, AI for science. Book: aisnakeoil.com

ID: 3084274082

http://cs.princeton.edu/~sayashk 15-03-2015 09:03:24

1,1K Tweet

9,9K Followers

1,1K Following

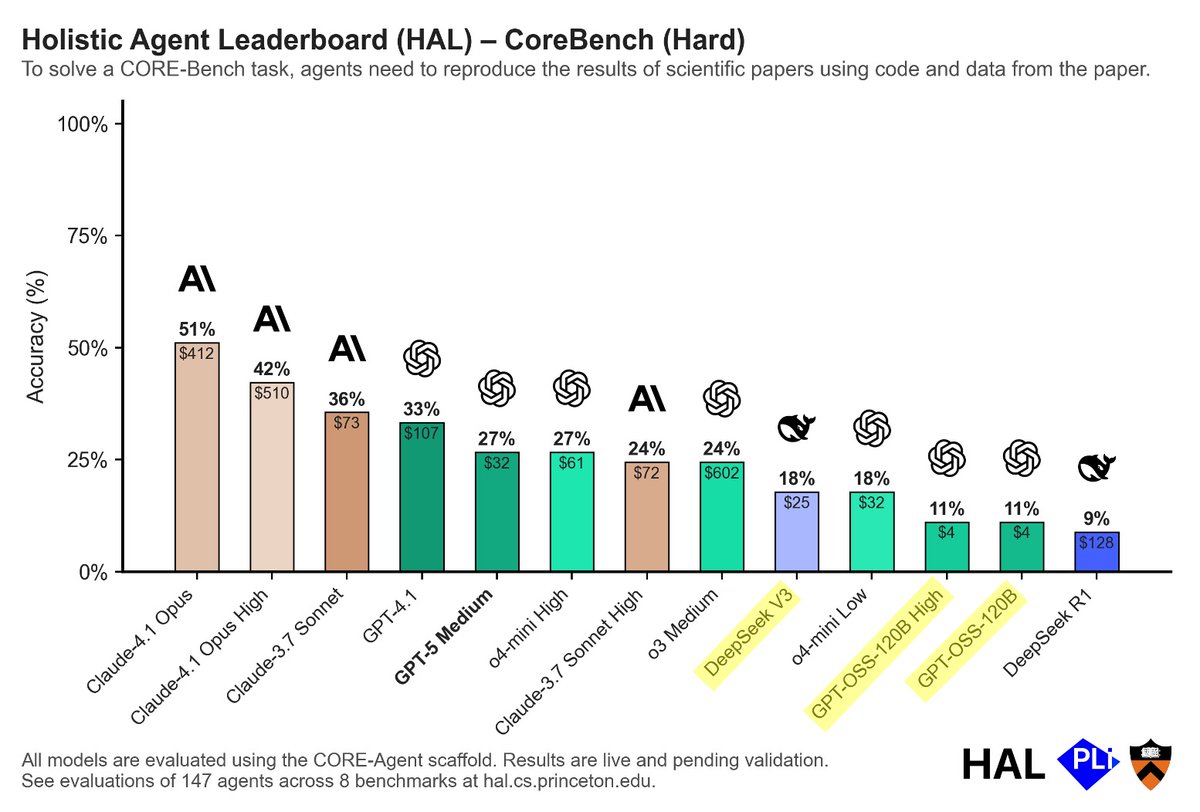

Joshua Achiam Nah that's not what's happening. If it was, I'd be one of the people most excited about recent changes. My long tail of things that models can't do for me hasn't really decreased much in size in recent months.