Royi Rassin

@royirassin

PhD candidate @biunlp researching multimodality. Intern @GoogleAI

ID: 984846057409572869

https://royi-rassin.netlify.app/ 13-04-2018 17:29:32

361 Tweet

334 Followers

274 Following

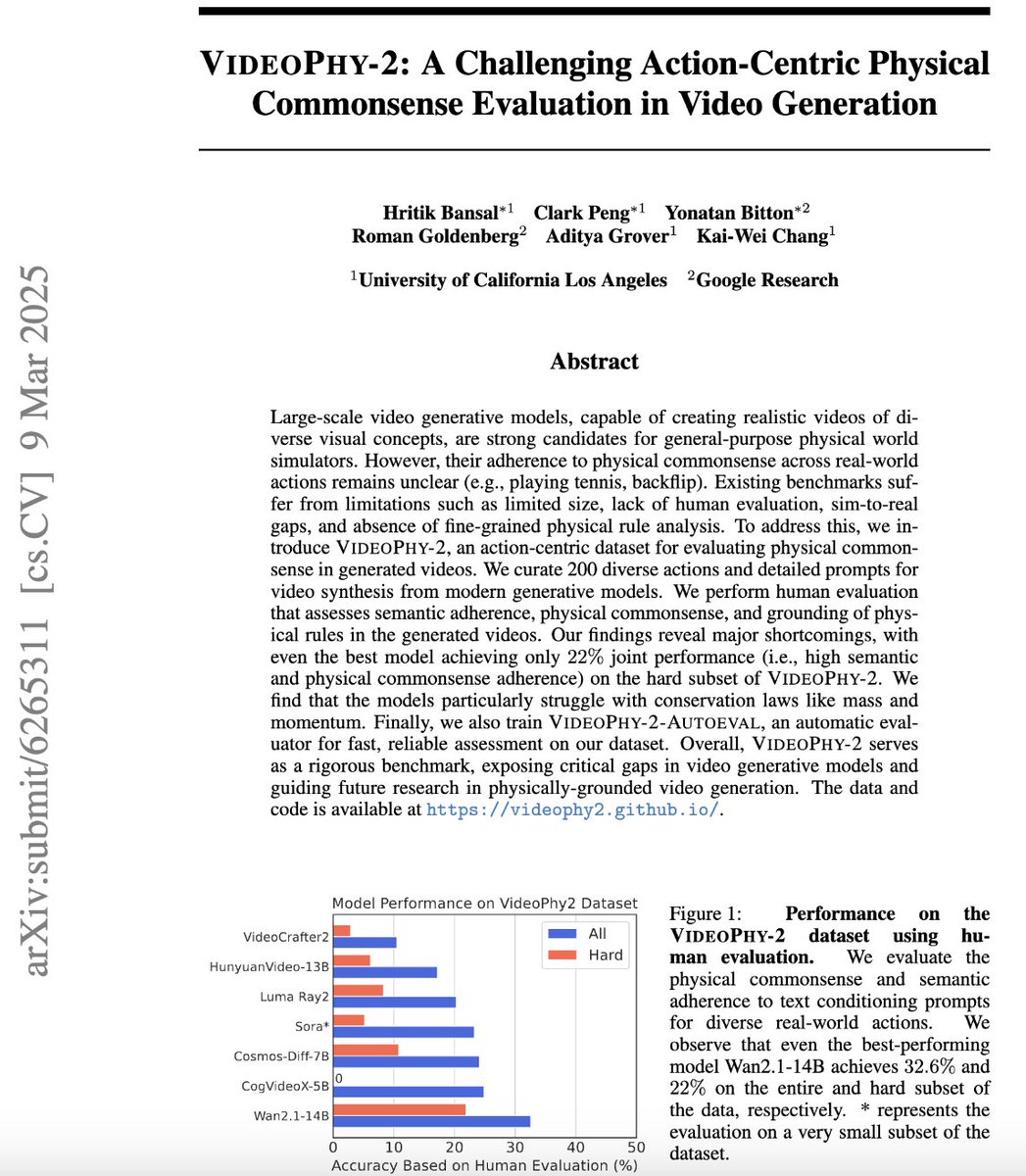

VideoJAM is our new framework for improved motion generation from AI at Meta We show that video generators struggle with motion because the training objective favors appearance over dynamics. VideoJAM directly adresses this **without any extra data or scaling** 👇🧵

Our paper "A Practical Method for Generating String Counterfactuals" has been accepted to the findings of NAACL 2025! a joint work with Matan Avitan , (((ل()(ل() 'yoav))))👾 and Ryan Cotterell. We propose "Coutnerfactual Lens", a technique to explain intervention in natural language. (1/6)

🎉 I'm happy to share that our paper, Make It Count, has been accepted to #CVPR2025! A huge thanks to my amazing collaborators - Yoad Tewel, Hilit Segev , Eran Hirsch, Royi Rassin, and Gal Chechik! 🔗 Paper page: make-it-count-paper.github.io Excited to share our key findings!

New NeurIPS paper! 🐣Why do LMs represent concepts linearly? We focus on LMs's tendency to linearly separate true and false assertions, and provide a complete analysis of the truth circuit in a toy model. A joint work with Gilad Yehudai, Tal Linzen, Joan Bruna and Alberto Bietti.

![Ron Mokady (@mokadyron) on Twitter photo Here is my short and practical thread on how to teach your Text-to-Image model to generate readable text:

[1/n] Here is my short and practical thread on how to teach your Text-to-Image model to generate readable text:

[1/n]](https://pbs.twimg.com/media/GutOJJQW4AAi0xy.jpg)