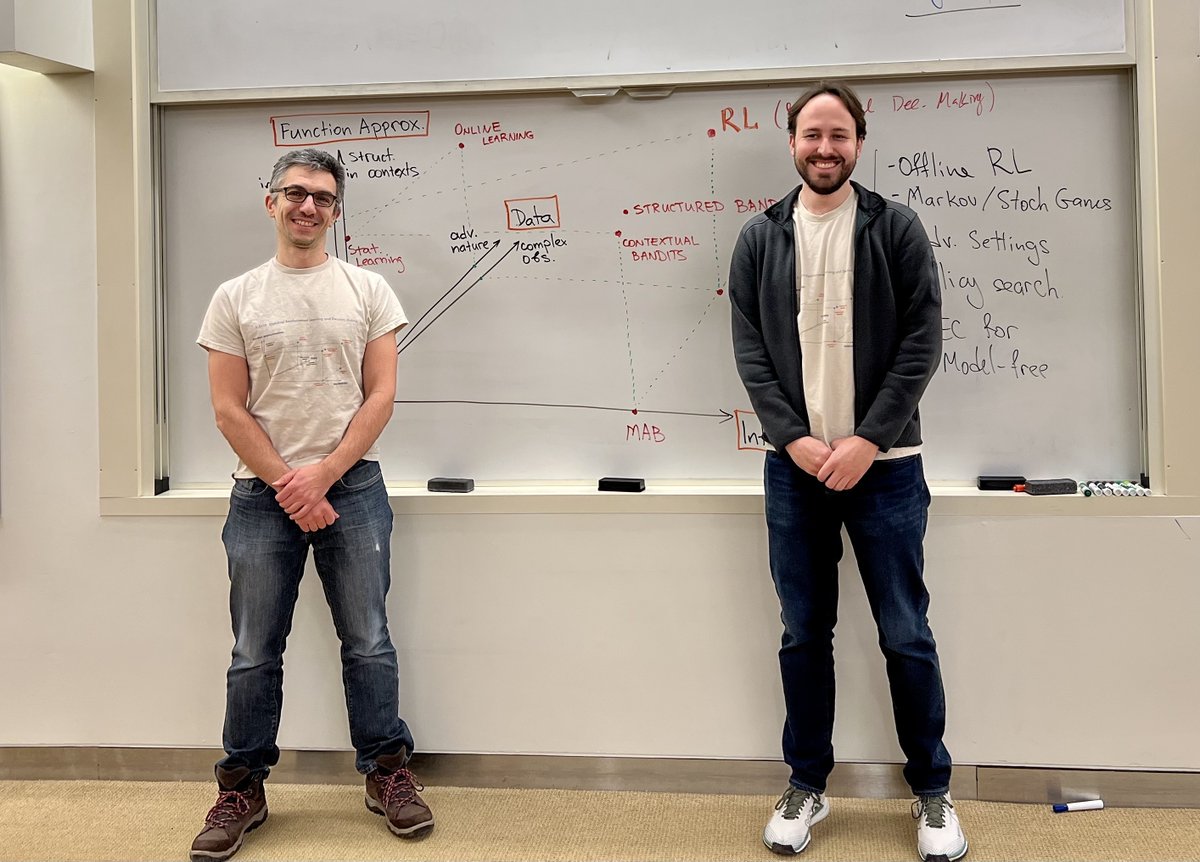

Alberto Bietti

@albertobietti

Machine learning research. Research scientist @FlatironCCM, previously @MetaAI, @NYUDataScience, @Inria, @Quora.

ID: 11056912

http://alberto.bietti.me 11-12-2007 18:03:01

1,1K Tweet

1,1K Followers

1,1K Following

Want to do research in ML for science in NYC and develop the next generation of foundation models for scientific data? Apply here to be a postdoc at PolymathicAI Simons Foundation : forms.gle/JbdWA3VczH3Xfg…

Join us at PolymathicAI at Simons Foundation's Flatiron Institute to create AI models for science! Opportunities available for postdoctoral candidates! Google form below: forms.gle/JbdWA3VczH3Xfg…

Training LLMs involves learning associations. We study training dynamics in a simple model that yields useful insight on the role of token interference and imbalance. Go talk to Vivien Cabannes and Berfin Simsek @ ICML at our #ICML poster #1114 tmrw 11:30! Paper: arxiv.org/abs/2402.18724

Want to build powerful AI for Science? We at PolymathicAI are building foundation models for science 🔥 at Flatiron Institute Join us ( Alberto Bietti Miles Cranmer Kyunghyun Cho Michael Eickenberg Siavash Golkar Mariel Pettee, Francois Lanusse, Mike McCabe, Tom Hehir, Rudy Morel,

Some exciting PolymathicAI news... We're expanding!! New Research Software Engineer positions opening in Cambridge UK, NYC, and remote. Come build generalist foundation models for science with us! Please indicate your interest on the form here: docs.google.com/forms/d/e/1FAI…

I'm looking for more reviewers (especially in optimization theory) for Transactions on Machine Learning Research, please reach out with your email (you can send it to me in DM) if you're interested in becoming a reviewer! TMLR has the best system I know: 1. You don't get assigned more than 1 submission at a