Richard Futrell

@rljfutrell

Language Science at University of California, Irvine

Information theory and language

ID: 846190375589089280

http://socsci.uci.edu/~rfutrell 27-03-2017 02:41:21

787 Tweet

2,2K Takipçi

767 Takip Edilen

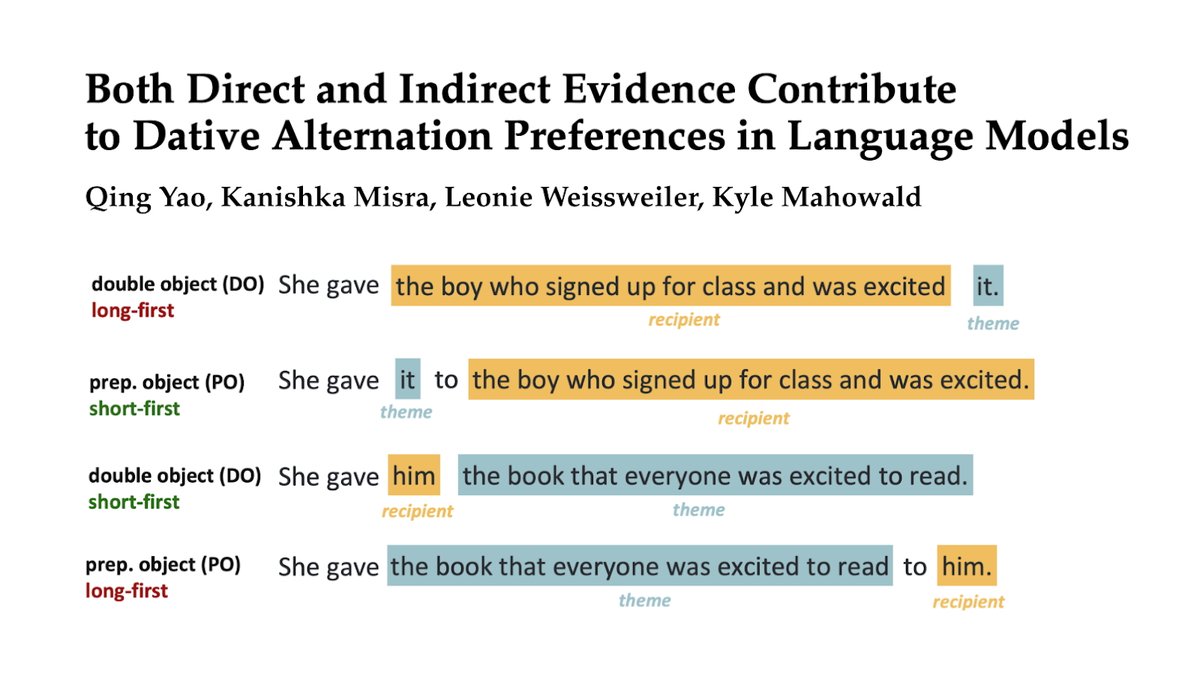

LMs learn argument-based preferences for dative constructions (preferring recipient first when it’s shorter), being quite consistent with humans. Is this from just memorizing the preferences in their training data? New paper w/ Kanishka Misra 🌊, Leonie Weissweiler, Kyle Mahowald

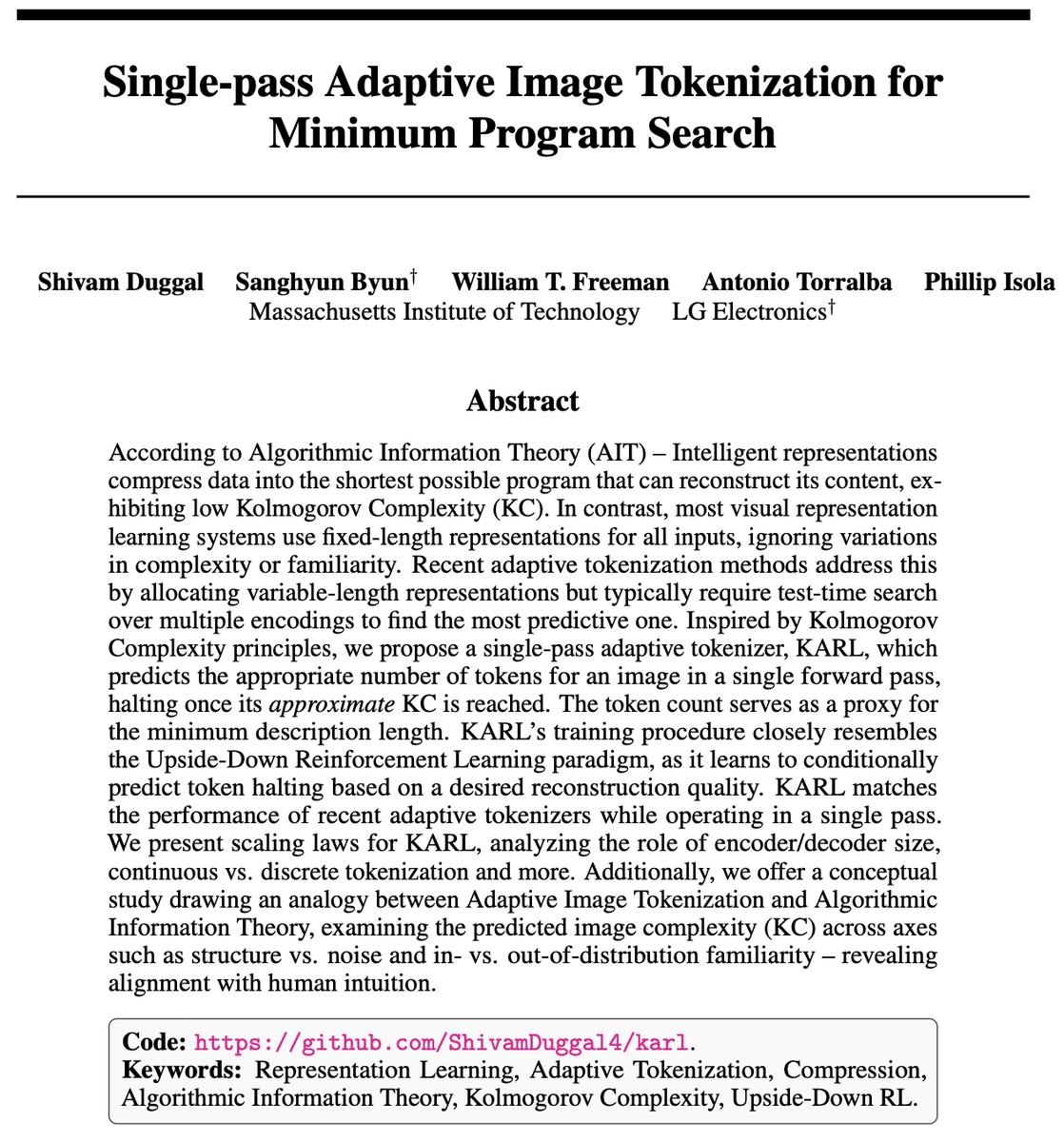

Neural scaling laws are powerful and predictive, but what sets the exponent? Previous work links it to power-law data statistics, echoing classical results of kernel theory. arxiv.org/abs/2505.07067 shows that hierarchical structure matters more. Accepted ICML Conference 2025 🎉

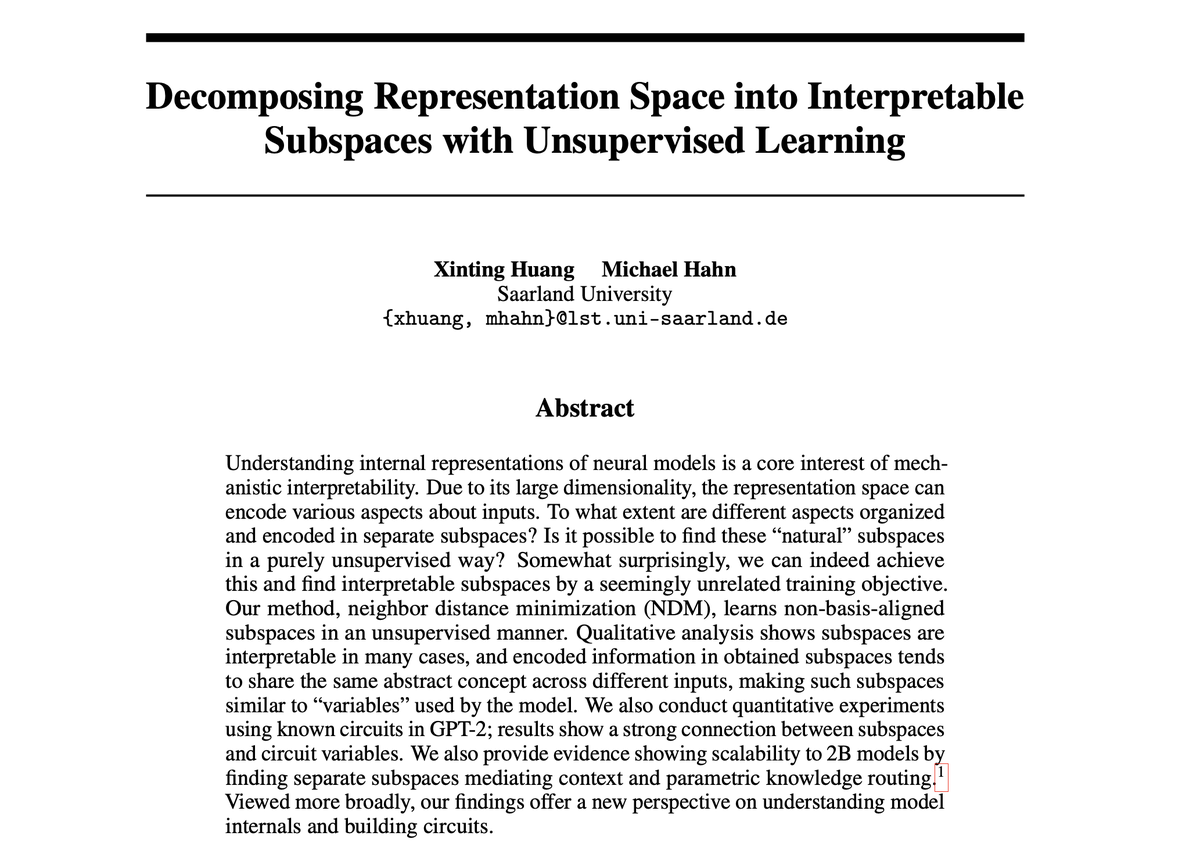

A key hypothesis in the history of linguistics is that different constructions share underlying structure. We take advantage of recent advances in mechanistic interpretability to test this hypothesis in Language Models. New work with Kyle Mahowald and Christopher Potts! 🧵👇

1/9 Thrilled to share our recent theoretical paper (with Griffiths Computational Cognitive Science Lab) on human belief updating, now published in Psychological Review! A quick 🧵:

Congrats to Weijie Xu, fourth-year UC Irvine language science grad student & recipient of the UCI Social Sciences Outstanding Scholarship award! The faculty-nominated award recognizes an outstanding grad student for high intellectual scholarship & achievement. socsci.uci.edu/newsevents/new…

new paper! robust and general "soft" preferences are a hallmark of human language production. we show that these emerge from *any* policy minimizing an autoregressive memory-based cost function w/ Richard Futrell & Dan Jurafsky

Honored to have received a Senior Area Chair award at #ACL2025 for our Prosodic Typology paper. Huge shout out to the whole team: Cui Ding, Tiago Pimentel, Alex Warstadt, Tamar Regev!