Daniel Han

@danielhanchen

Building @UnslothAI. Finetune LLMs 30x faster https://t.co/aRyAAgKOR7. Prev ML at NVIDIA. Hyperlearn used by NASA. I like maths, making code go fast

ID:717359704226172928

https://unsloth.ai/ 05-04-2016 14:34:16

703 Tweets

7,0K Followers

930 Following

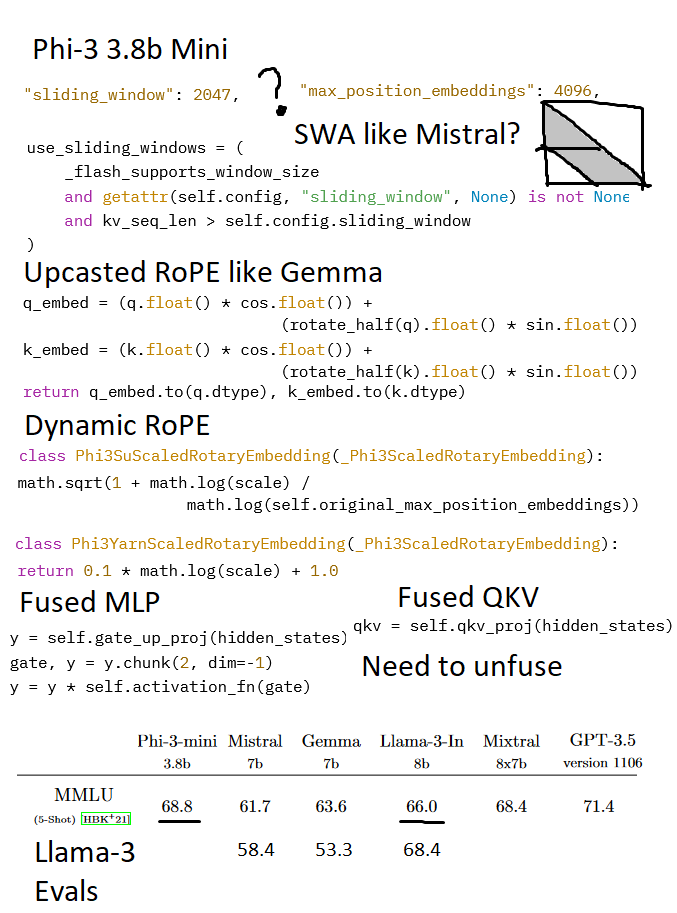

Phi 3 (3.8B) got released! The paper said it was just a Llama arch, but I found some quirks while adding this to Unsloth AI:

1. Sliding window of 2047? Mistral v1 4096. So does Phi mini have SWA? (And odd num?) Max RoPE position is 4096?

2. Upcasted RoPE? Like Gemma?

3. Dynamic

Phi-3 Mini 3.8b Instruct is out!!

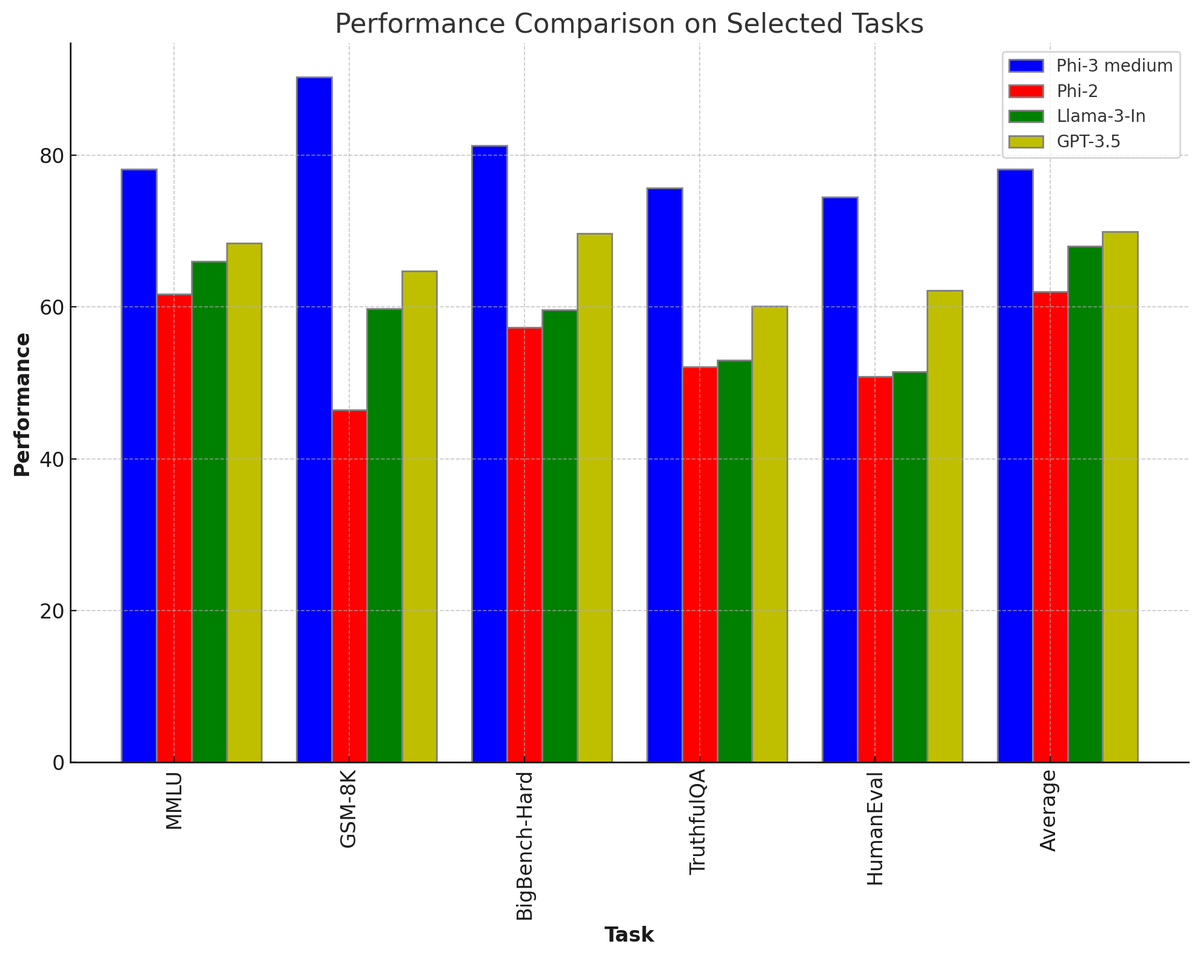

68.8 MMLU vs Llama-3 8b Instruct's 66.0 MMLU (Phi team's own evals)

The long context 128K model is also out at huggingface.co/microsoft/Phi-…

Working on adding this into Unsloth AI! Some fused linear modules need unfusing :)

huggingface.co/microsoft/Phi-…

Come build with us and get $100K+ in credits!

Build Club have just launched a 6 week AI accelerator for top builders, backed by AWS 🚀✨

Build with the likes of Daniel Han, Micah Hill-Smith and receive mentorship with swyx , Logan Kilpatrick + more...

Details 🧵

For burning Qs, if you don't know, Unsloth AI has a wiki page at github.com/unslothai/unsl…!

1. How to update Unsloth so Llama-3 works

2. Fix OOM in eval loops

3. Train lm_head, embed_tokens

4. Resume from checkpoint

5. Saving to GGUF

6. Chat templates

7. Enable 2x faster inference