piushvaish

@piushvaish

Curiosity-Driven Creative Adventurer

Using data and technology to create new opportunities and develop innovative solutions.

#DataScience #MachineLearning #AI

ID: 90139401

https://adataanalyst.com 15-11-2009 10:40:53

2,2K Tweet

119 Takipçi

558 Takip Edilen

Introducing Jamba, our groundbreaking SSM-Transformer open model! As the first production-grade model based on Mamba architecture, Jamba achieves an unprecedented 3X throughput and fits 140K context on a single GPU. 🥂Meet Jamba ai21.com/jamba 🔨Build on Hugging Face

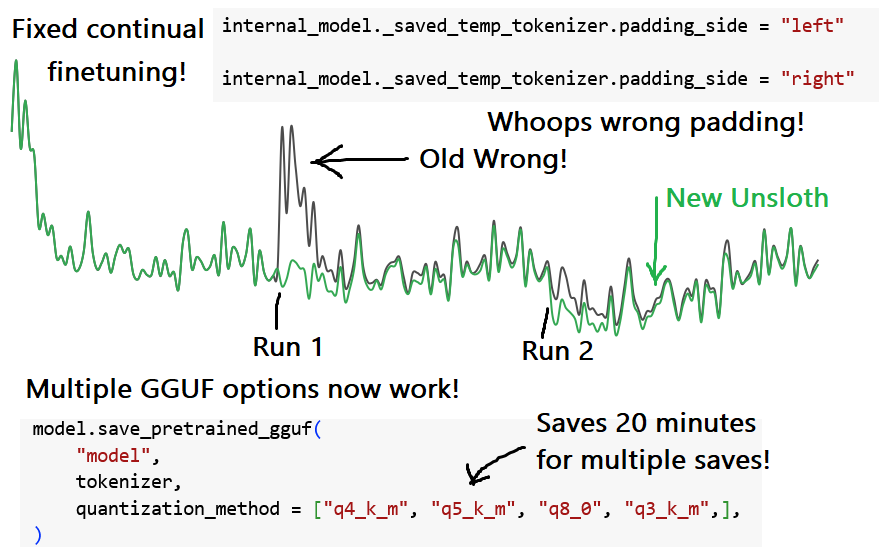

Fixed continual finetuning in Unsloth AI! When continuing to finetune LoRA adapters, the loss goes haywire I accidentally set the tokenizer's padding side to "left", not "right" on new runs! Whoops! + save 20mins with multi GGUF options! Colab for both: colab.research.google.com/drive/1rU4kVb9…

New Mistral AI cookbook: Self-Supervised Prompt Optimization ❌ Prompt engineering... sucks. It's a non-standard process, heavily relying on trial and error and difficult to standardize 🤩 Luckily, we can automate it using ✨prompt optimization✨, investigated in recent works