Pierre Fernandez

@pierrefdz

Researcher (Meta, FAIR Paris) • Working on AI, watermarking and data protection • ex. @Inria, @Polytechnique, @UnivParisSaclay (MVA)

ID: 1325092743073443840

https://pierrefdz.github.io/ 07-11-2020 15:08:36

123 Tweet

505 Followers

226 Following

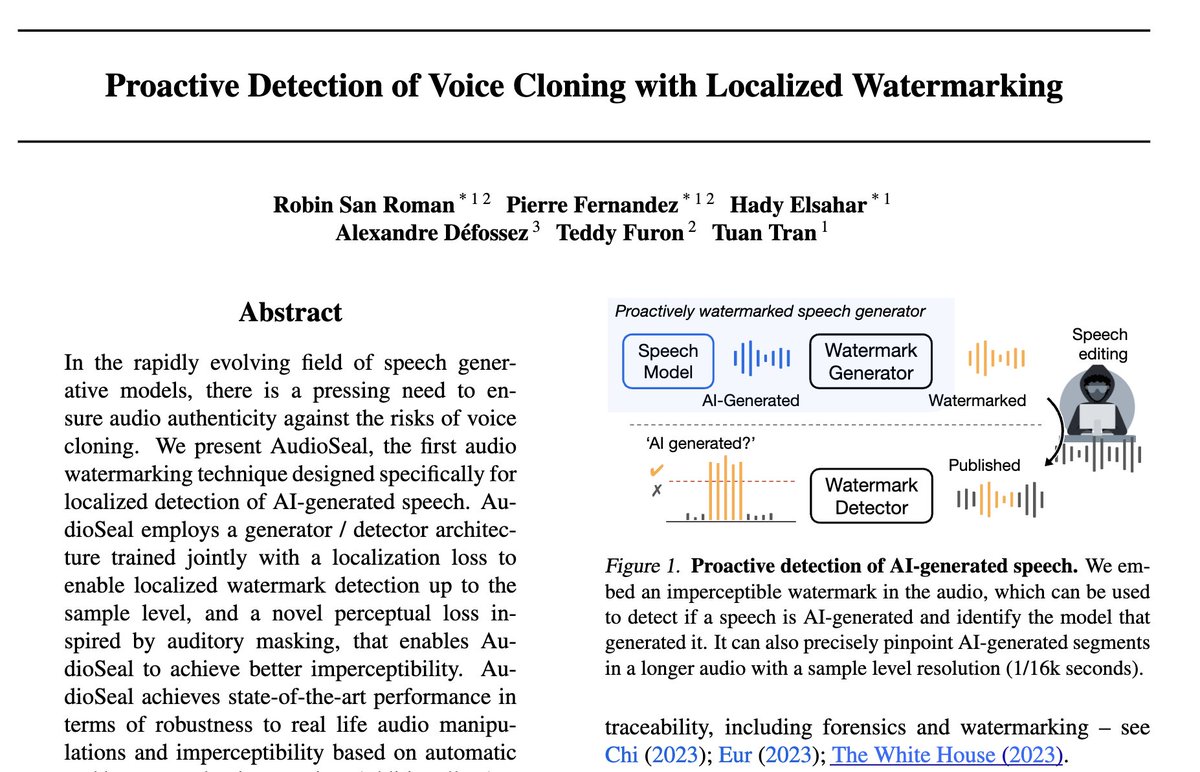

AudioSeal is accepted at #ICML2024! 🚀 AI at Meta AudioSeal is the state of art audio watermarking model designed for deepfakes mitigation. It is robust, lightning-fast & imperceptible⚡️ 📄 paper: arxiv.org/abs/2401.17264 🔗 code (commercial friendly) github.com/facebookresear…

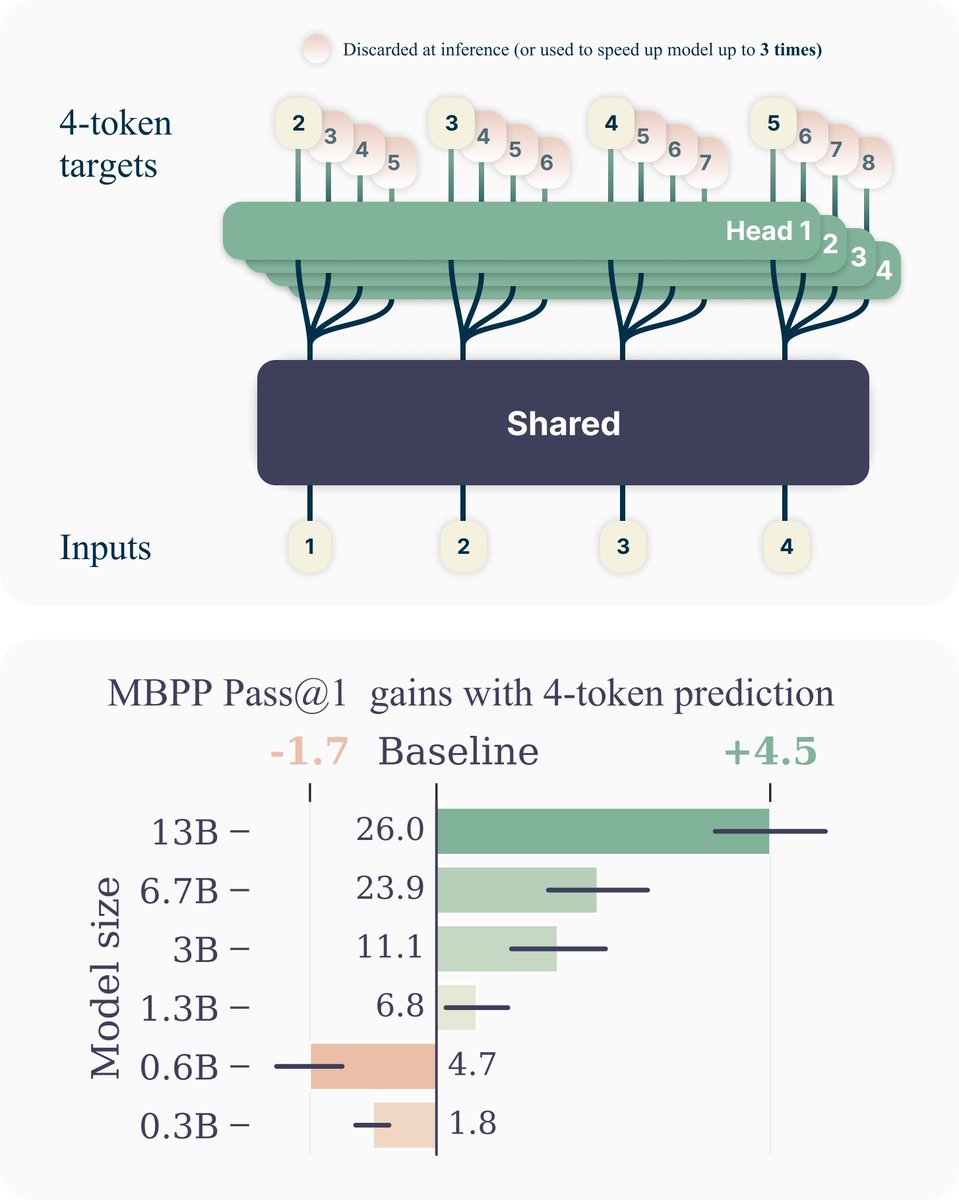

What happens if we make language models predict several tokens ahead instead of only the next one? In this paper, we show that multi-token prediction boosts language model training efficiency. 🧵 1/11 Paper: arxiv.org/abs/2404.19737 Joint work with Fabian Gloeckle

Watermarking Audios to Detect Voice Cloning 🧵📖 Read of the day, day 100: Proactive Detection of Voice Cloning with Localized Watermarking, by Robin San Roman, Pierre Fernandez et al from AI at Meta arxiv.org/pdf/2401.17264 The authors introduce a method to train a pair of models to

🔐 Introducing zkDL++ A cutting-edge framework for proving the integrity of any deep neural network. 💡Demo: Provable Watermark Extraction for AI at Meta Stable Signature 🔍 Dive into our preliminary report for more details: hackmd.io/@Ingonyama/zkd…

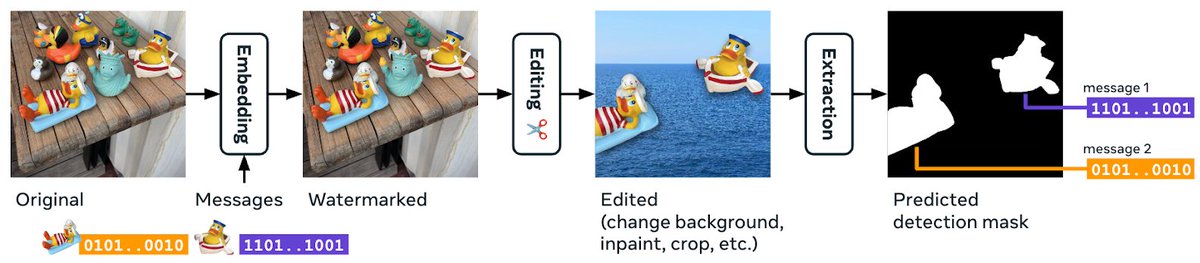

🔒Image watermarking is promising for digital content protection. But images often undergo many modifications—spliced or altered by AI. Today at AI at Meta, we released Watermark Anything that answers not only "where does the image come from," but "what part comes from where." 🧵

Amazing work led by Tom Sander and Pierre Fernandez

![fly51fly (@fly51fly) on Twitter photo [LG] A Watermark for Black-Box Language Models

D Bahri, J Wieting, D Alon, D Metzler [Google DeepMind] (2024)

arxiv.org/abs/2410.02099 [LG] A Watermark for Black-Box Language Models

D Bahri, J Wieting, D Alon, D Metzler [Google DeepMind] (2024)

arxiv.org/abs/2410.02099](https://pbs.twimg.com/media/GZDfd4KbMAAlUQX.jpg)