Tejesh Bhalla

@og_tejeshbhalla

@theagentic

ID: 1188159594318594048

26-10-2019 18:25:04

972 Tweet

51 Followers

315 Following

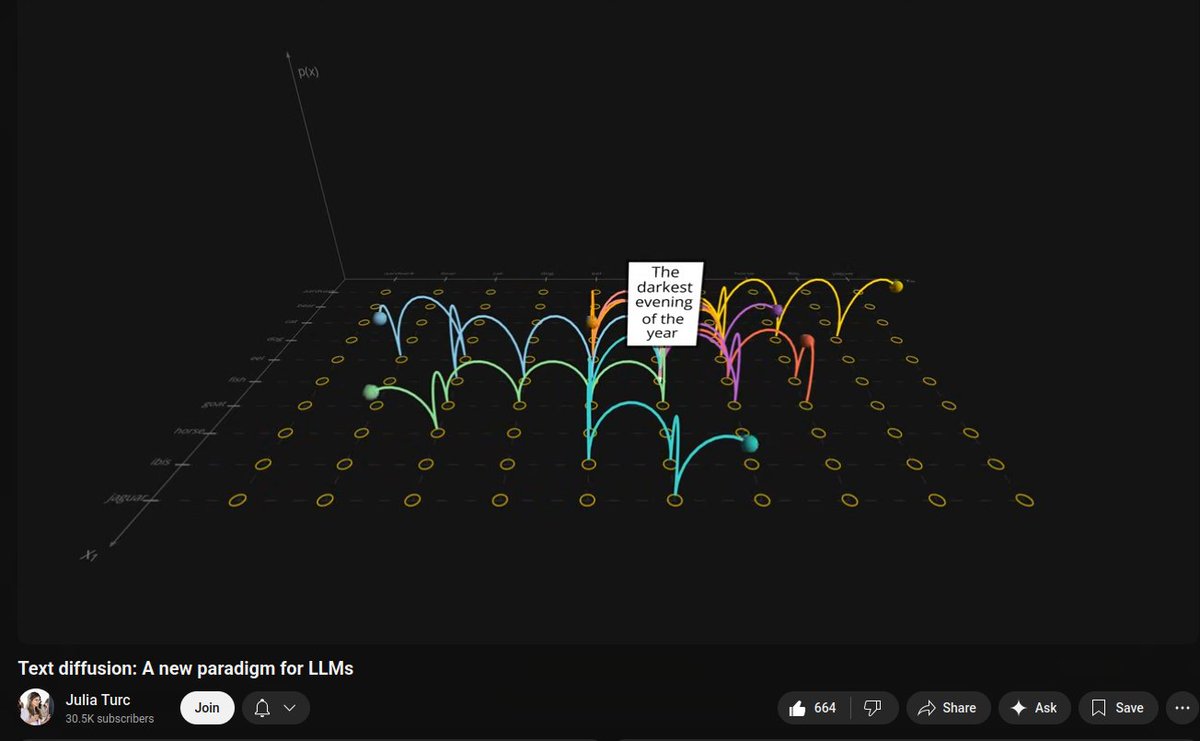

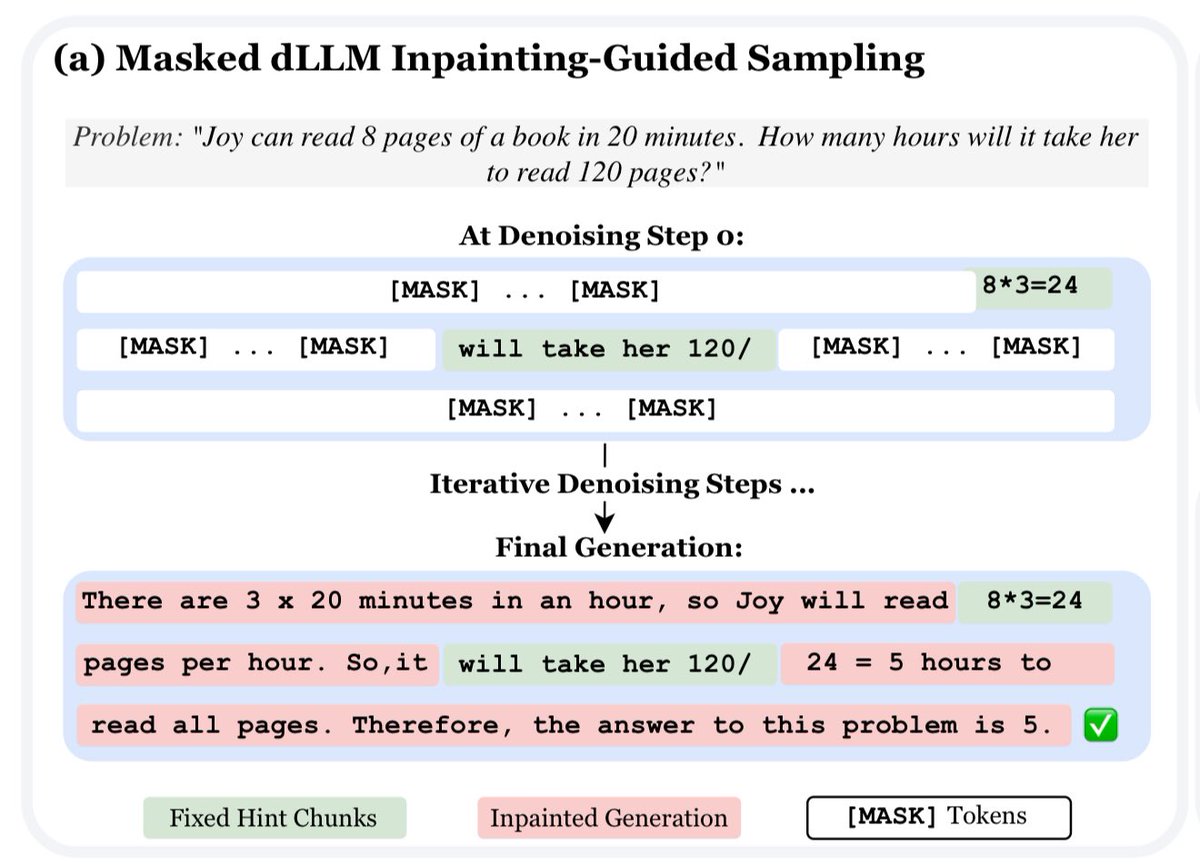

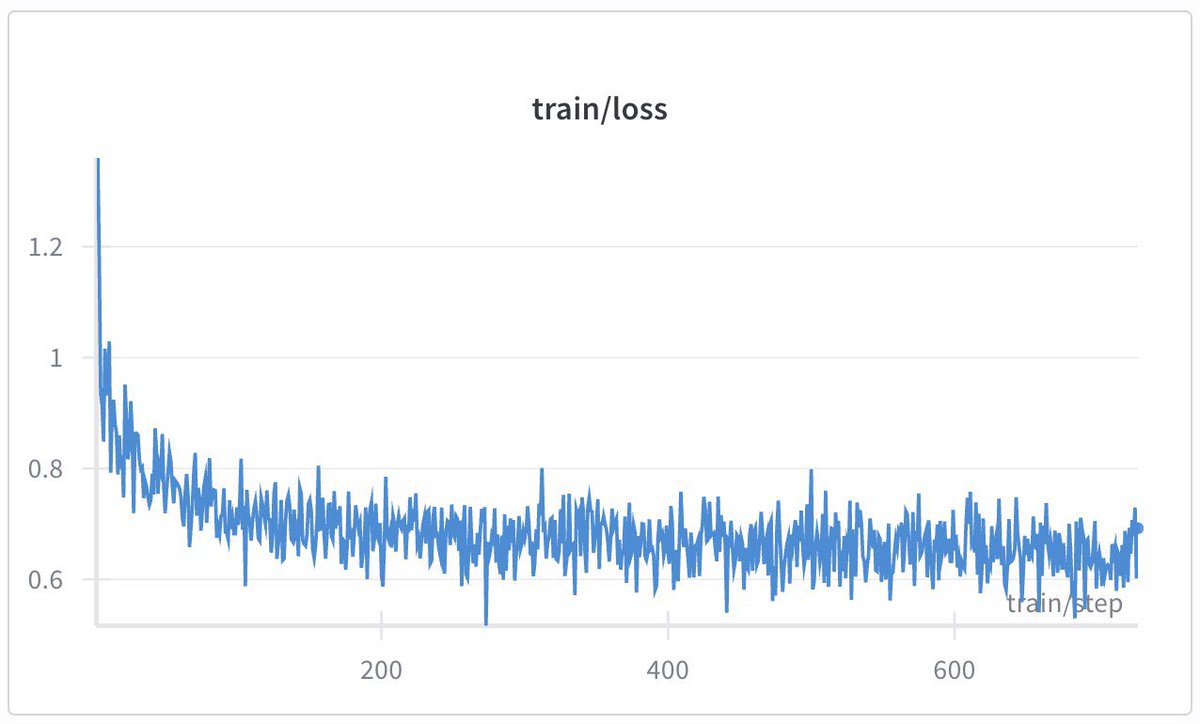

if you ever wondered how diffusion models can be used for text generation (like in those blazing fast coding demo) check out Julia Turc latest tutorial in 24min you'll get the main strategy in D3PM/LLaDA, their inference/training tradeoff and the math intuition behind them