Neeraj Kumar Pandit

@neeraj_compchem

PhD Student, University of Göttingen. Interested in computational chemistry and machine learning

ID: 1270629660150452225

10-06-2020 08:12:12

63 Tweet

168 Followers

266 Following

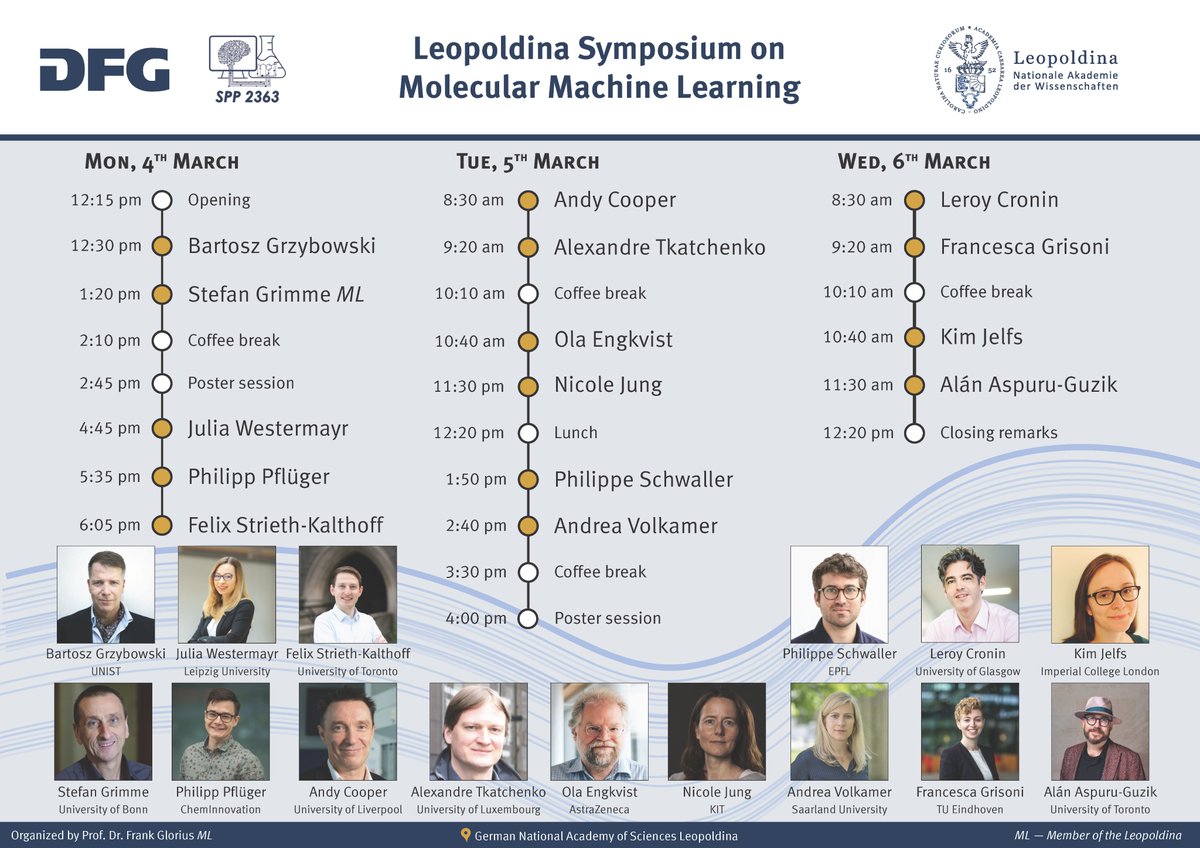

Leopoldina Symposium on Molecular Machine Learning: 4 to 6 March in Halle/Saale (National Academy). Amazing line-up👍, free registration!😀 Nationale Akademie der Wissenschaften Leopoldina SPP 2363 DFG public | @[email protected]

great demonstration of why handling conformers correctly matters in predicting reaction selectivity, from Rubén Laplaza clemence corminboeuf - in computational chemistry it's easy to be right, or wrong, for bad reasons! (dx.doi.org/10.26434/chemr…)

Peter Shor Peter Shor wins the 2025 Shannon Award. Well deserved! Read more here: itsoc.org/news/shannon-a…

This is Sayash Kapoor calmly dismantling AI scaling laws hype, during our discussion of his article he published with Arvind Narayanan earlier today. This interview slapped. #ICML2024 is a wrap!

Our work applying the Marcus theory to estimate energy-transfer kinetics is now available online in Chemical Science ! Check it out here: doi.org/10.1039/D4SC03… Maseras Group ICIQ

Chemists often combine many different techniques to elucidate structures. Adrian Mirza has been building a system that mimics this using machine-learning models and genetic algorithms.