Markus Hofmarcher

@mrkhof

PhD student @ JKU Linz, Institute for Machine Learning

ID: 1070444434083459073

05-12-2018 22:26:55

24 Tweet

96 Followers

18 Following

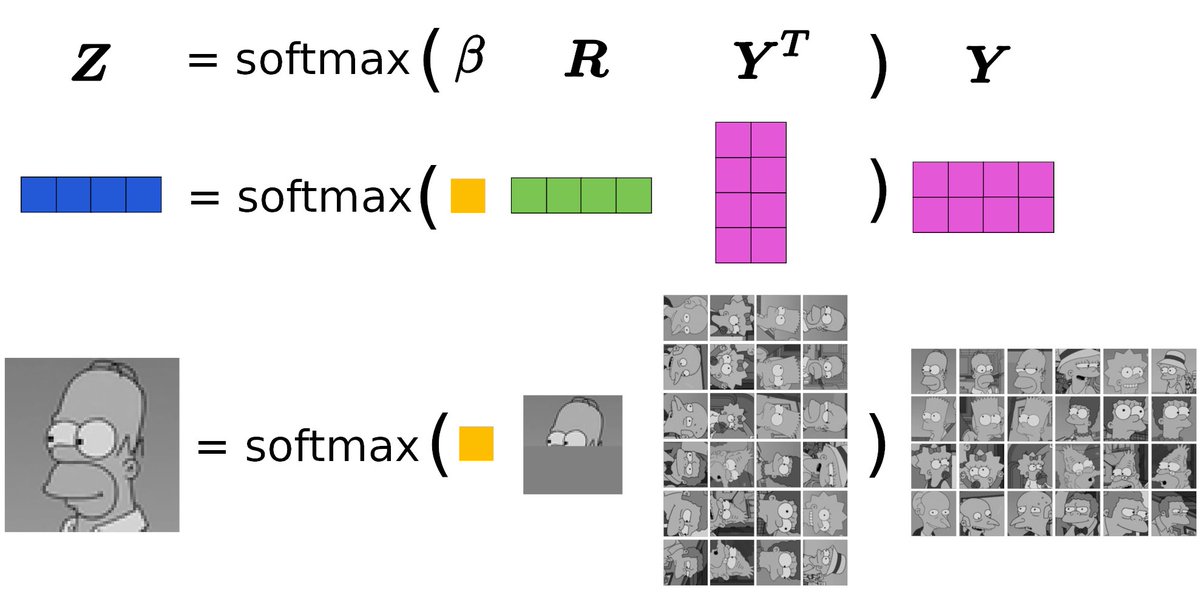

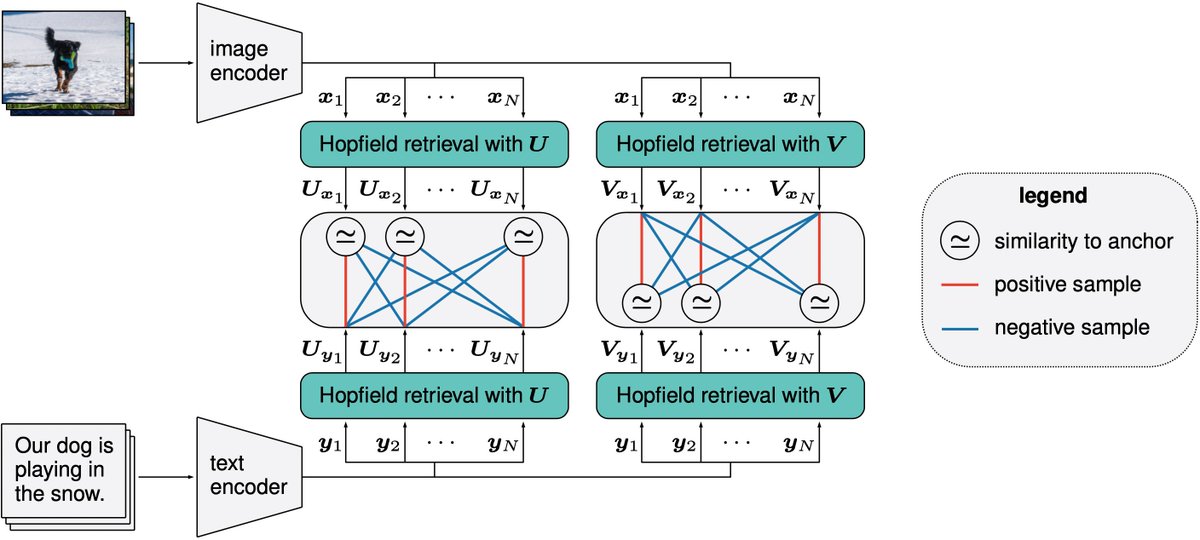

Our paper "Hopfield Networks is All You Need" is accepted at #ICLR2021. Time to give some talks :) I am very honored to present our research today at the great platform of ML Collective Rosanne Liu (mlcollective.org/dlct/).

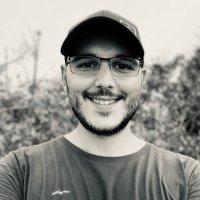

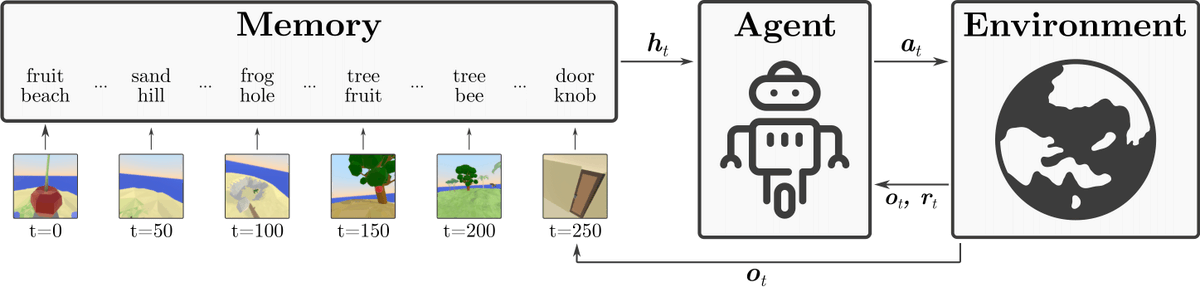

Excited to share our recent work on parameter-efficient fine-tuning in RL. We pre-train a Decision Transformer (DT) on 50 tasks from two domains, and subsequently fine-tune on various down-stream tasks. Joint work with Markus Hofmarcher, Fabian Paischer, Razvan, and Sepp Hochreiter. 1/n

Personal update: last month, I re-joined the group of my mentor Sepp Hochreiter and my amazing colleague Günter Klambauer in Linz, opening my own group "AI for data-driven simulations". We all share the vision to create a large-scale AI ecosystem in Linz. Big news to come soon 🚀

![Marius-Constantin Dinu (@dinumariusc) on Twitter photo We are excited to present our work, combining the power of a symbolic approach and Large Language Models (LLMs). Our Symbolic API bridges the gap between classical programming (Software 1.0) and differentiable programming (Software 2.0). GitHub: github.com/Xpitfire/symbo… [1/n] We are excited to present our work, combining the power of a symbolic approach and Large Language Models (LLMs). Our Symbolic API bridges the gap between classical programming (Software 1.0) and differentiable programming (Software 2.0). GitHub: github.com/Xpitfire/symbo… [1/n]](https://pbs.twimg.com/media/Fm6dwWwaMAAVKye.jpg)

![Marius-Constantin Dinu (@dinumariusc) on Twitter photo Excited to present our work “Large Language Models Can Self-Improve At Web Agent Tasks”. We show that synthetic data self-improvement boosts task completion by 31% on WebArena and introduce quality metrics for measuring autonomous agent workflows. #AI #MachineLearning #LLMs [1/n] Excited to present our work “Large Language Models Can Self-Improve At Web Agent Tasks”. We show that synthetic data self-improvement boosts task completion by 31% on WebArena and introduce quality metrics for measuring autonomous agent workflows. #AI #MachineLearning #LLMs [1/n]](https://pbs.twimg.com/media/GO6KikDaoAEisGP.png)