Mojan Javaheripi

@mojan_jp

Senior Researcher @MSFTResearch working on physics of LLMs. Phi pretraining. CE PhD from @UCSanDiego

ID: 1199219580998045696

http://acsweb.ucsd.edu/~mojavahe 26-11-2019 06:53:34

28 Tweet

279 Takipçi

125 Takip Edilen

I'm excited for our NeurIPS LLM Efficiency Competition workshop tomorrow: 1LLM + 1GPU + 1Day! Stop by 1:30 CT to see Weiwei Yang (MSR), Mark Saroufim , Jeremy Howard , Sebastian Raschka , Ao Liu, Tim Dettmers , @sourab_m , Keming (Luke) Lu , Mojan Javaheripi , Leshem (Legend) Choshen 🤖🤗 @ACL , Vicki Boykis, Christian Puhrsch

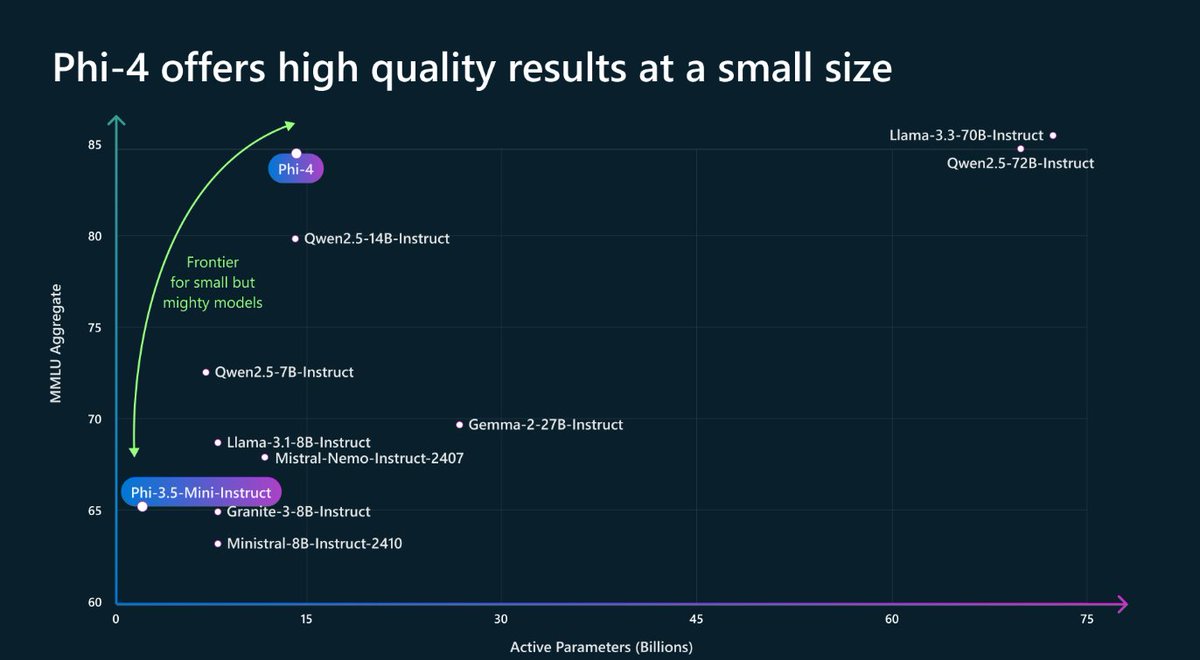

🚀 Phi-4 is here! A small language model that performs as well as (and often better than) large models on certain types of complex reasoning tasks such as math. Useful for us in Microsoft Research, and available now for all researcher on the Azure AI Foundry! aka.ms/phi4blog

Nice summary of more cool results for Phi-4-Reasoning by Dimitris Papailiopoulos