Armin Ronacher ⇌

@mitsuhiko

Creator of Flask; A decade at @getsentry; Exploring — love API design, Python and Rust. Excited about AI. Husband and father of three — “more nuanced in person”

ID: 12963432

https://mitsuhiko.at 01-02-2008 23:12:59

54,54K Tweet

53,53K Followers

764 Following

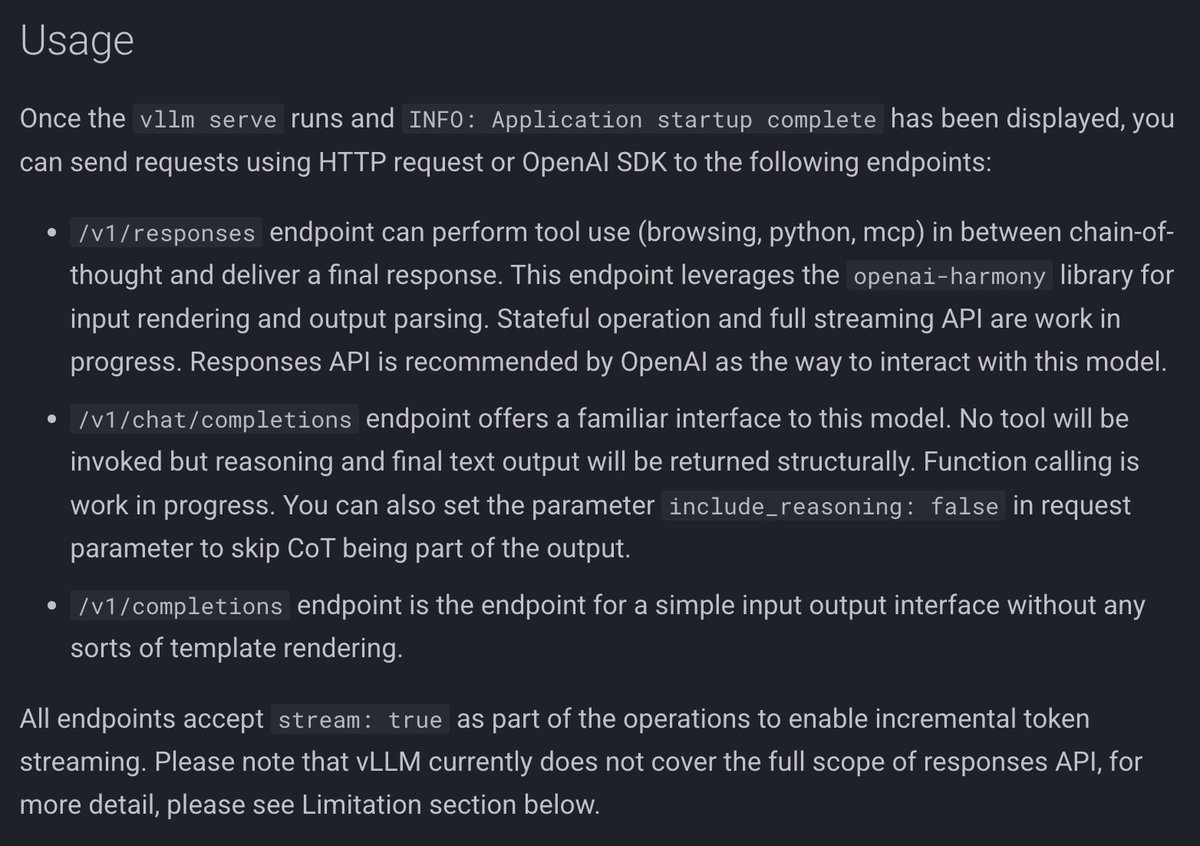

vLLM has put up a recipe for gpt-oss. It details all the limitations. My investigation from yesterday was correct. `chat/completions`doesn't support tool calls at all. That means tools like opencode, qwen-cli, crush, etc. won't work. Armin Ronacher ⇌ docs.vllm.ai/projects/recip…