Miranda Bogen

@mbogen

Director of the AI Governance Lab @CenDemTech / responsible AI + policy

ID: 16679560

https://www.mirandabogen.com/ 10-10-2008 04:48:54

2,2K Tweet

2,2K Takipçi

1,1K Takip Edilen

.Center for Democracy & Technology is excited to see National Institute of Standards and Technology continue its important work to understand the societal impacts of AI, as well as continued momentum from government agencies in response to the President’s Executive Order on AI. x.com/NIST/status/17…

We're excited to announce our detailed schedule featuring amazing speakers (Besmira Nushi 💙💛 Miranda Bogen @Björn Ommer) and panelists (Alex Beutel Milagros Miceli Cristian Canton Chengzhi Mao David Bau ). Huge thanks to Google for their sponsorship! See you #CVPR2025 in Summit Room 433 on 18th!

📢 Timely tech policy job opportunity: work with Princeton SPIA-DC and faculty at Princeton CITP and across the university (including me) to develop programming that helps bridge AI expertise with policy making. Pay: $105-115,000 Details and application: spia.princeton.edu/sites/default/…

Looking forward to a great conversation -- National Academies is hosting a public panel on "AI Risk Management: Evaluation, Testing, and Oversight" with rishi Hanna Wallach (@hannawallach.bsky.social) Miranda Bogen and myself, moderated by William Isaac - register and come along on 20th June! nationalacademies.org/event/42732_06…

TODAY: Center for Democracy & Technology’s #AI Governance Lab Director @MBogen spoke before the @PCLOB_Gov on the challenges that come with the use of #ArtificialIntelligence (AI) in counterterrorism and other national security programs. cdt.org/insights/cdts-…

Join Center for Democracy & Technology’s @MBogen on July 31st alongside @ChiraagBains, @DBrody_, & Prof Spencer Overton for an insightful panel on "what you need to know about #AI," moderated by Jon Greenbaum, Founder of Justice Legal Strategies. RSVP Today: cdt.org/event/what-you…

so proud to release this report with Center for Democracy & Technology alongside coauthors Bonnielin Swenor PhD & Miranda Bogen, in advance of the #ADA anniversary. minimizing the risks of #AlgorithmicBias for #disabled people starts with creating more equitable datasets -- here's how: cdt.org/wp-content/upl…

Call for Tiny Papers! Community Research! Studying Social/Broader Impact! Making Evaluations Better! Submit your 2 pager by Sept 20 on eval perspectives, challenges, validity And come to our #NeurIPS2024 workshop to work together on this part of safety evaleval.github.io/call-for-paper…

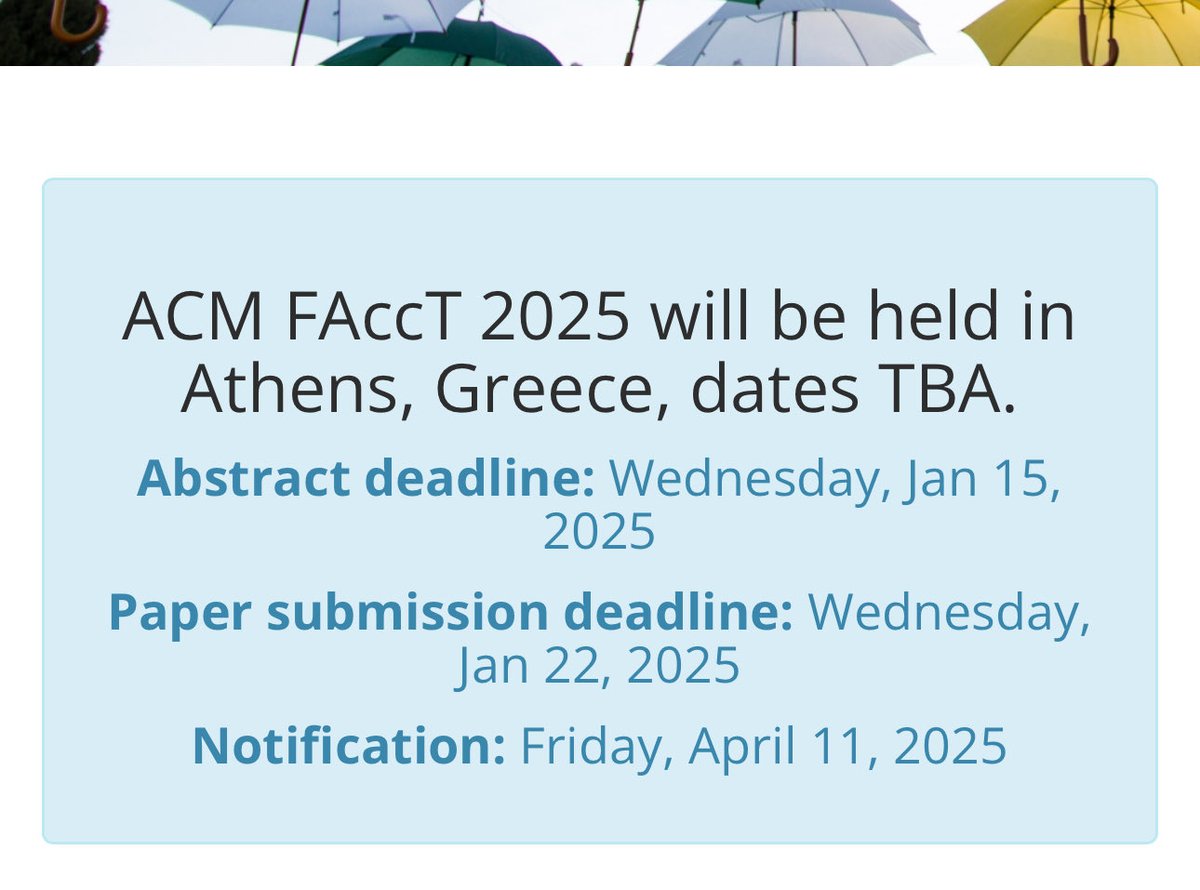

I’m honoured to be one of the ACM FAccT Programme Chairs this year, alongside the amazing Jenn Wortman Vaughan Sina Fazelpour Talia Gillis 🤩 and we’ve been hard at work already. The CFP is coming soon, but the key dates everyone are now set. Happy paper planning 🚀

Companies often attribute their focus on English AI to the lack of resources in non-English langs. New brief written by Evani Radiya-Dixit and Miranda Bogen highlights the incredible work researchers are doing on multilingual AI if only companies wanted to work with them cdt.org/insights/beyon…

If we want to understand and shape how advanced AI behaves, we need to know the rules it’s supposed to follow. This requires a type of transparency that’s different from what most policymakers focus on today. Amy Winecoff, Miranda Bogen, and I explain in a new report for Center for Democracy & Technology:

AI companies are starting to build more and more personalization into their products, but there's a huge personalization-sized hole in conversations about AI safety/trust/impacts. Delighted to feature Miranda Bogen on Rising Tide today, on what's being built and why we should care: