Maximilian Beck

@maxmbeck

PhD Student @ JKU Linz Institute for Machine Learning.

ID:1401163561322389508

http://maxbeck.ai 05-06-2021 13:06:56

110 Tweets

379 Followers

510 Following

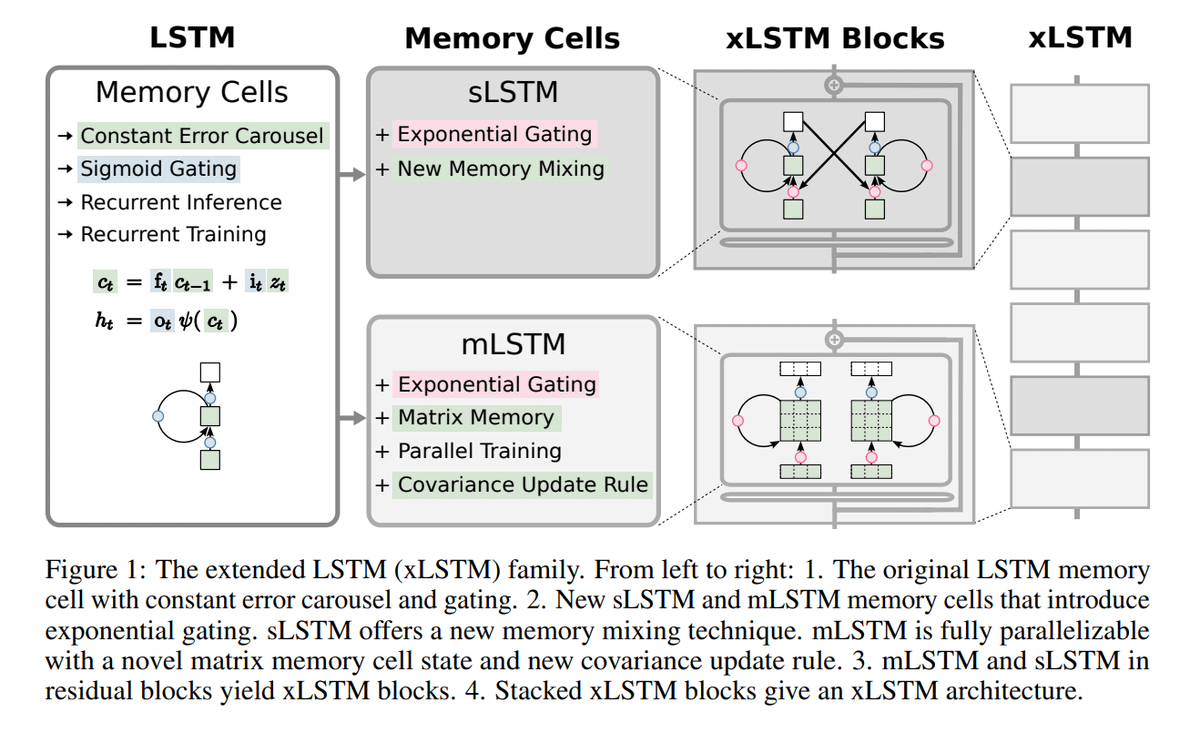

'xLSTM: Extended Long Short-Term Memory' by Maximilian Beck , Korbinian Poeppel, Markus Spanring, Andreas Auer , Günter Klambauer. Johannes Brandstetter , Sepp Hochreiter and co-authors is signaling a potential shift in the landscape of natural language processing technologies.

As

Meet DBRX, a new sota open llm from Databricks. It's a 132B MoE with 36B active params trained from scratch on 12T tokens. It sets a new bar on all the standard benchmarks, and - as an MoE - inference is blazingly fast. Simply put, it's the model your data has been waiting for.