Marco Virgolin 🇺🇦

@marcovirgolin

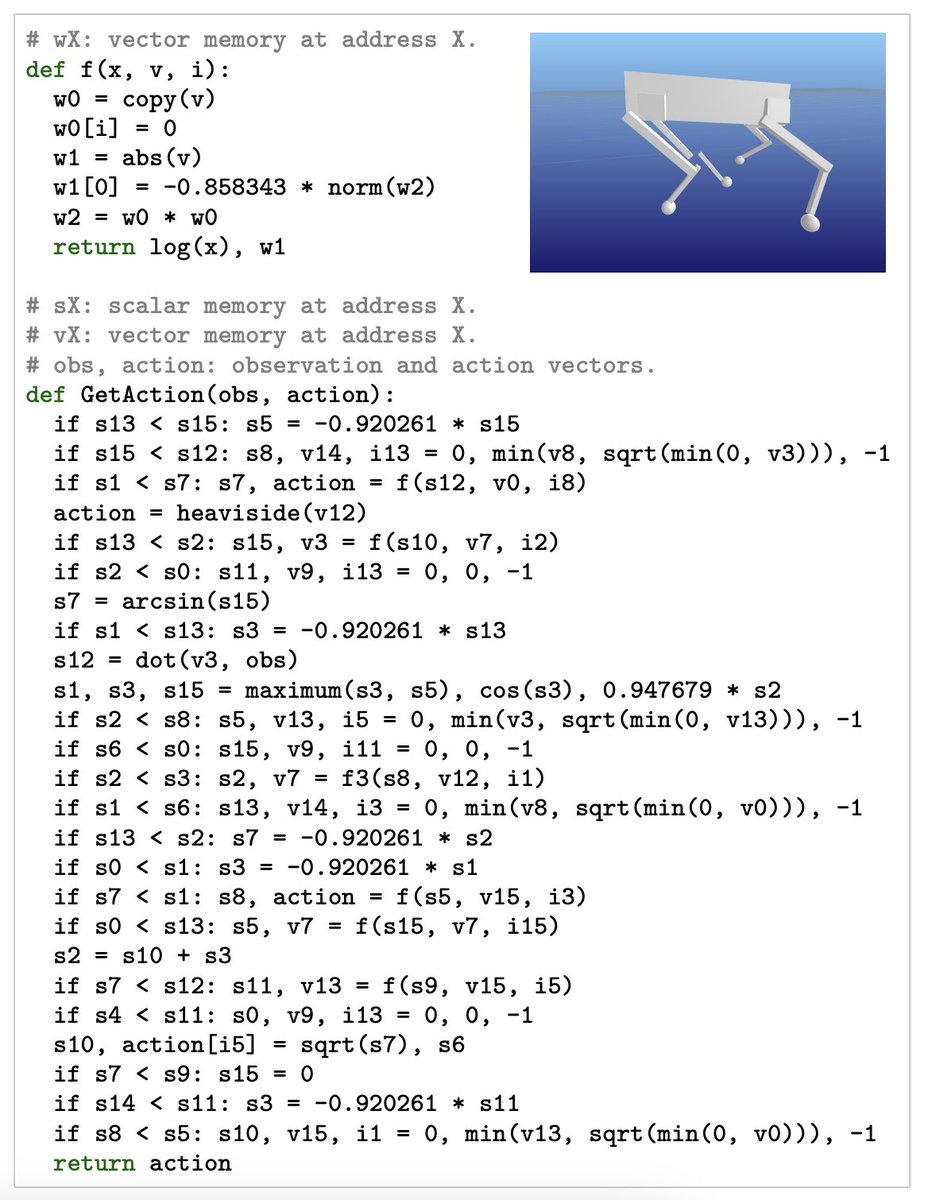

❤️ Machine Learning 🤖, LLMs 🦙, Evolutionary Computation 🧬, eXplainable AI 🔍, Bouldering 🧗

Opinions are my own

ID: 580688158

https://marcovirgolin.github.io 15-05-2012 08:28:35

715 Tweet

309 Followers

335 Following

Do we need RL to align LLMs with Human feedback? 🔍👀 Last week, Stanford University researchers unveiled a paper introducing Direct Preference Optimization (DPO) - a new algorithm that could change the way we align LLMs with Human Feedback arxiv.org/abs/2305.18290 🧵 1/3

![Amirhossein Kazemnejad (@a_kazemnejad) on Twitter photo When we plot the attentions we find the PEs exhibit different patterns. NoPE & T5's Relative PE show both short-range and long-range attention, ALiBi favors short-range, while Rotary & APE distribute attention more uniformly.🤯 [10/n] When we plot the attentions we find the PEs exhibit different patterns. NoPE & T5's Relative PE show both short-range and long-range attention, ALiBi favors short-range, while Rotary & APE distribute attention more uniformly.🤯 [10/n]](https://pbs.twimg.com/media/FxiyoL-agAMQqqu.jpg)