leander

@leanderkur

PhD in prob. ML @ APRIL-lab at the university of Edinburgh. Tractable probabilistic ML, closed form stat. quantities and probabilistic ML under hard constraints

ID: 95281114

07-12-2009 21:20:15

124 Tweet

44 Followers

202 Following

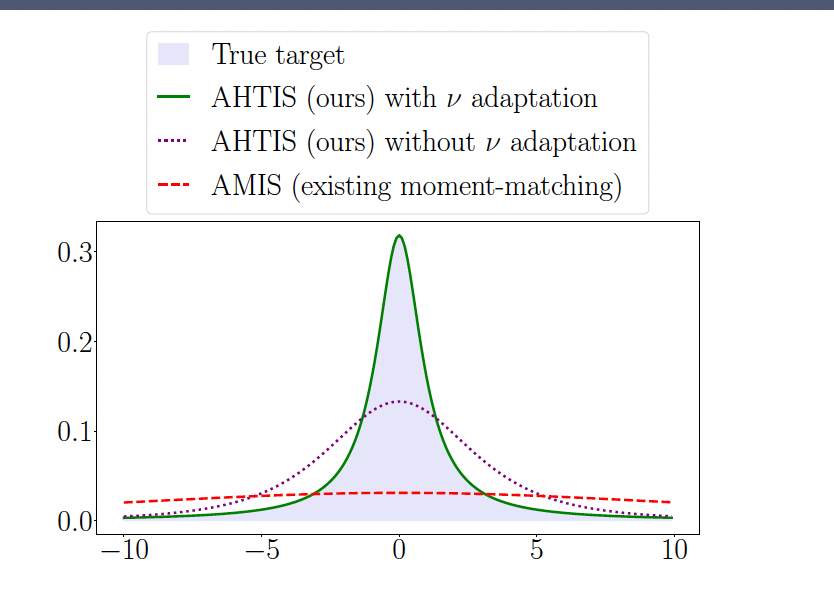

Classical mixture models are limited to positive weights and this requires learning very large mixtures! Can we learn (deep) mixtures with negative weights? Answer in our #ICLR2024 spotlight by Lorenzo Loconte Aleks, Martin, Stefan, Nicolas Arno Solin 📜openreview.net/forum?id=xIHi5…

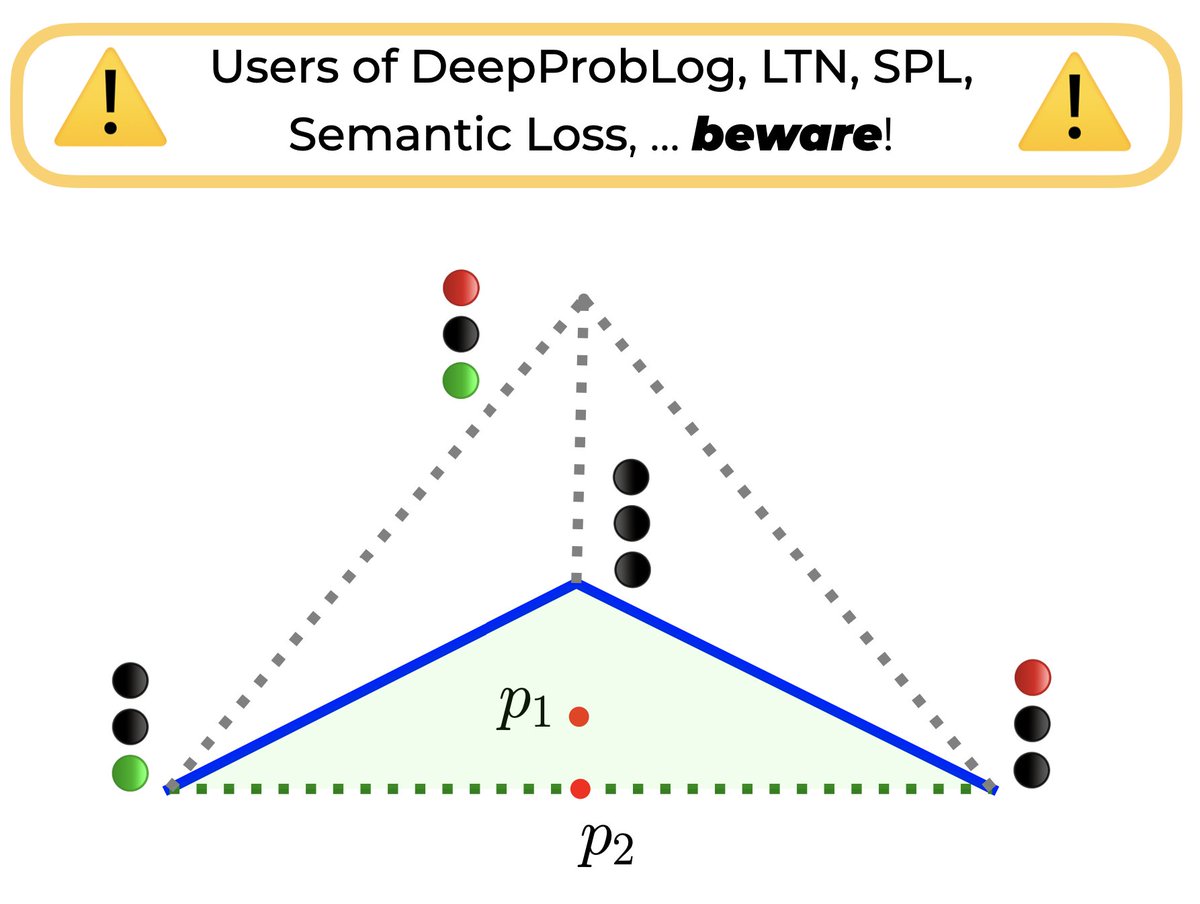

In our ICML 2024 (ICML Conference) paper, we study neurosymbolic methods under the very common independence assumption… and find many problems! 1️⃣ Non-convexity 2️⃣ Disconnected minima 3️⃣ Unable to represent uncertainty Making optimisation very challenging 😱 Let’s explore 👇