Lili

@lchen915

Ph.D. student @mldcmu. Previously undergrad @berkeley_ai

ID: 1361818686512766976

http://lilichen.me 16-02-2021 23:24:18

56 Tweet

831 Takipçi

334 Takip Edilen

Dhruv Batra The story is not black and white as the tweet claims: should one have the same prompts for all models (what papers did) vs tune the prompt separately for each model (what blog says; much harder to reproduce). We already highlighted this in the paper and have a baseline to address

1/ Maximizing confidence indeed improves reasoning. We worked with Shashwat Goel, Nikhil Chandak Ameya P. for the past 3 weeks (over a zoom call and many emails!) and revised our evaluations to align with their suggested prompts/parsers/sampling params. This includes changing

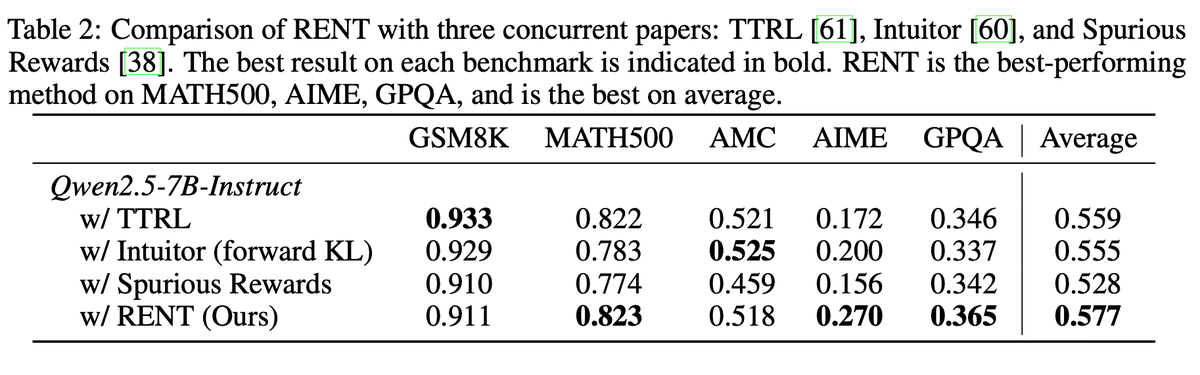

Shashwat Goel Nikhil Chandak Ameya P. 2/ We also tried our best to compare against concurrent works in the unsupervised RL space. We find using confidence as a reward performs better than having random rewards (while spurious rewards does indeed improve performance). We also find entropy minimization (reverse KL)