Karthik A Sankararaman 🇮🇳🇺🇸

@karthikabinav

Research/Engineering in #Algorithms, #machinelearning, #generativeAI; Long-term Affiliations: #iitm, @UMDCS, @facebook, @meta

ID: 85788359

http://karthikabinavs.xyz 28-10-2009 10:34:40

1,1K Tweet

1,1K Takipçi

2,2K Takip Edilen

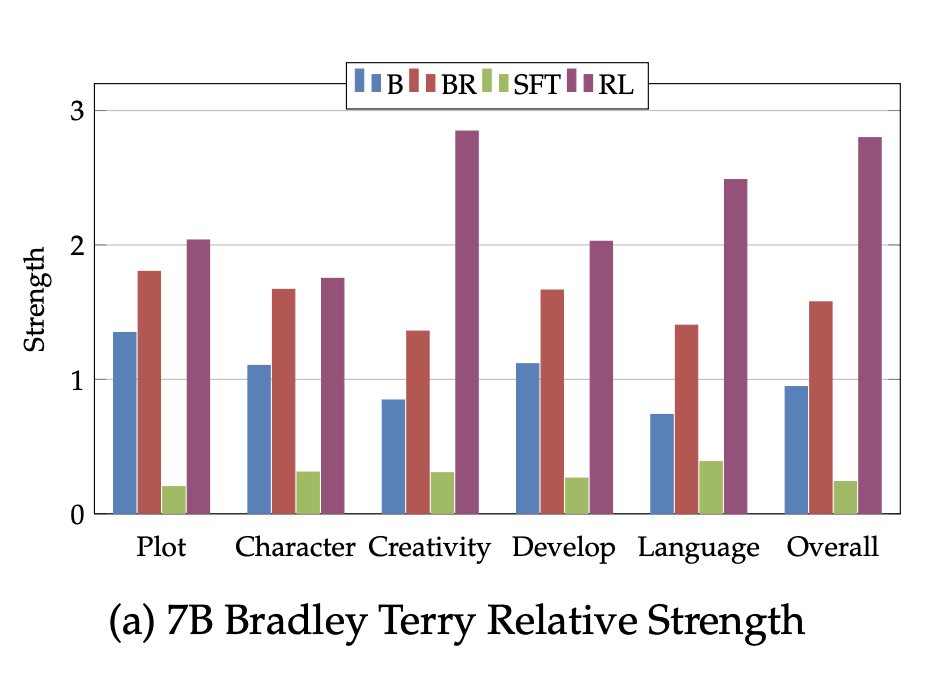

![fly51fly (@fly51fly) on Twitter photo [LG] Reinforcement Learning from User Feedback

E Han, J Chen, K A Sankararaman, X Peng... [Meta GenAI] (2025)

arxiv.org/abs/2505.14946 [LG] Reinforcement Learning from User Feedback

E Han, J Chen, K A Sankararaman, X Peng... [Meta GenAI] (2025)

arxiv.org/abs/2505.14946](https://pbs.twimg.com/media/GrlZqstWwAIS2kd.jpg)