Yixing Jiang

@jyx_su

PhD student at Stanford | Stanford Machine Learning Group, HealthRex Lab | National Science Scholar | Previously Student Researcher at Google Deepmind

ID: 1539636575474069505

https://www.linkedin.com/in/jiangyx/ 22-06-2022 15:49:47

15 Tweet

275 Followers

11 Following

Congrats Google DeepMind on the Gemma-2-2B release! Gemma-2-2B has been tested in the Arena under "guava-chatbot". With just 2B parameters, it achieves an impressive score 1130 on par with models 10x its size! (For reference: GPT-3.5-Turbo-0613: 1117, Mixtral-8x7b: 1114). This

We highlight the fundamental shift from AI as a tool to AI as a teammate in our recent multi-agent benchmarking study that measures leading large language models in their ability to carry out tasks in medicine: Full study: bit.ly/41kdgFs Stanford AI Lab Stanford Medicine

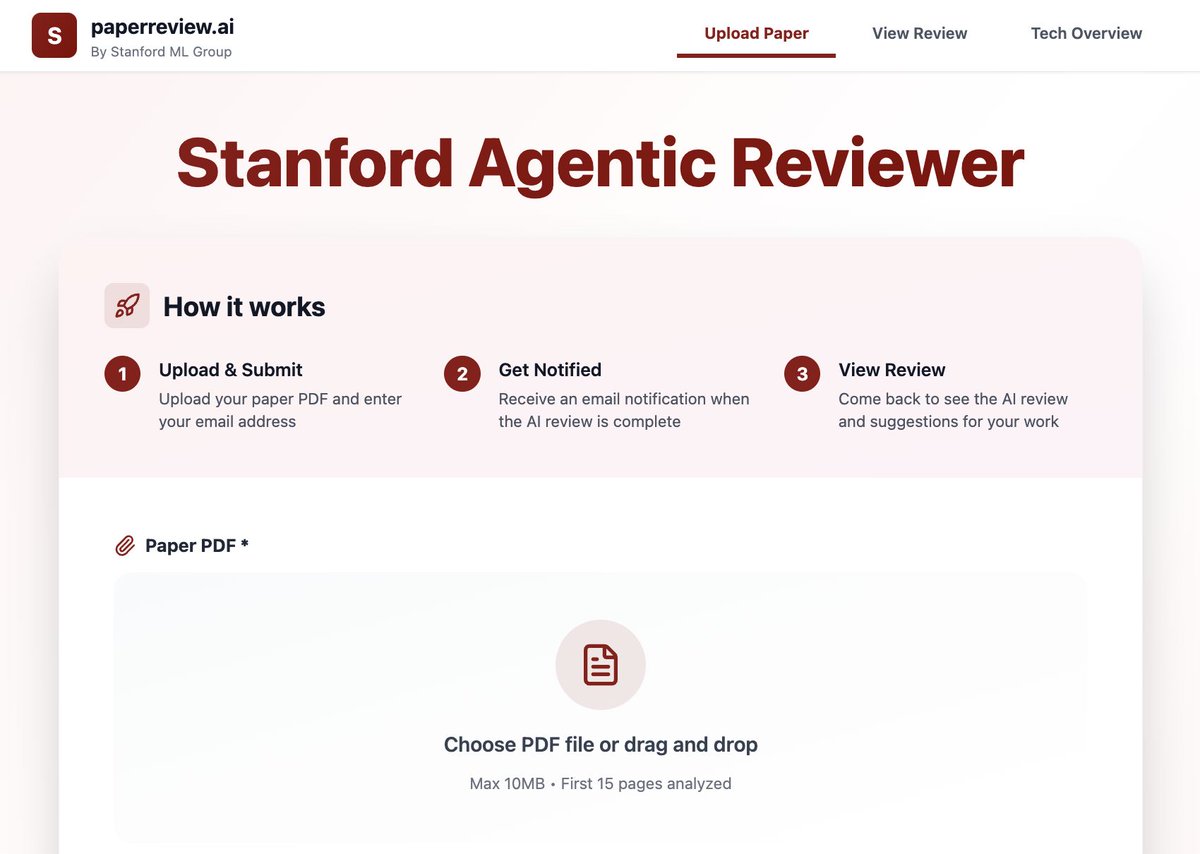

Releasing a new "Agentic Reviewer" for research papers. I started coding this as a weekend project, and Yixing Jiang made it much better. I was inspired by a student who had a paper rejected 6 times over 3 years. Their feedback loop -- waiting ~6 months for feedback each time -- was