Jeremy Bernstein

@jxbz

☎️ silicon valley tech support @ modula.systems

✍️ anon feedback @ admonymous.co/jxbz

ID: 103996493

http://jeremybernste.in 11-01-2010 23:05:07

947 Tweet

4,4K Takipçi

561 Takip Edilen

Pretty wild to see work that I contributed to (e.g., AlgoPerf, Crowded Valley Robin M. Schmidt) included in a university course. I feel very honored.

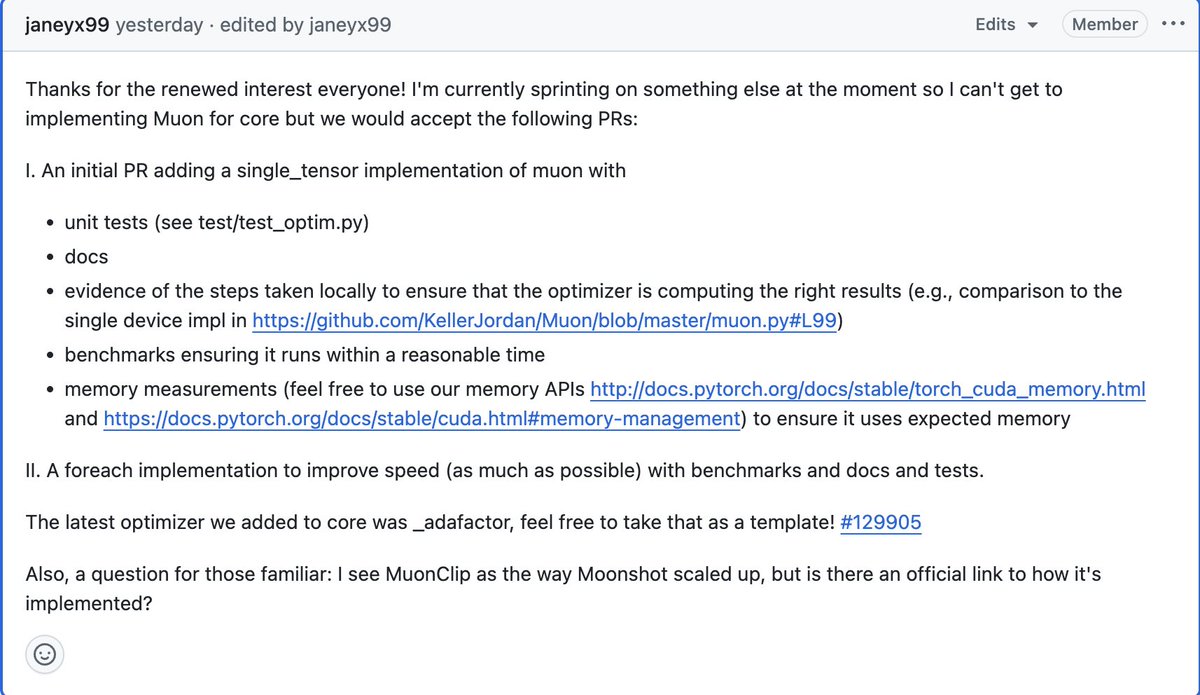

Holy shit. Kimi K2 was pre-trained on 15.5T tokens using MuonClip with zero training spike. Muon has officially scaled to the 1-trillion-parameter LLM level. Many doubted it could scale, but here we are. So proud of the Moum team: Keller Jordan, Vlado Boza, You Jiacheng,